-

Notifications

You must be signed in to change notification settings - Fork 14.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Wait for pipeline state in Data Fusion operators #8954

Wait for pipeline state in Data Fusion operators #8954

Conversation

| pipeline_name=self.pipeline_name, | ||

| instance_url=api_url, | ||

| namespace=self.namespace, | ||

| runtime_args=self.runtime_args | ||

|

|

||

| ) | ||

| self.log.info("Pipeline started") | ||

| hook.wait_for_pipeline_state( | ||

| success_states=[PipelineStates.COMPLETED], |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This operator is name CloudDataFusionStartPipelineOperator (key word start).

IMO that means PipelineStates.RUNNING should be a success state by default.

However, it might be worth allowing the user to optionally control these success states to span more use cases.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I've added success_states as an optional argument. If not provided the operator will wait for operation to be RUNNING

| runtime_args = json.loads(pipe["properties"]["runtimeArgs"]) | ||

| if runtime_args[job_id_key] == faux_pipeline_id: | ||

| return pipe["runid"] | ||

| sleep(10) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

How often do you notice the first request failing? If >50% then we expect the program run doesn't show up for some time (seconds) and we can expect first iteration to fail not work, do we expect this loop to happen at least 2-3x?

Could we move the sleep to the beginning of the loop body to increase likelihood that this loop exits on an earlier iteration? Hopefully save API calls we don't expect to yield a successful get on the program run.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Usually it's successful on 2nd loop but sometimes I get to the 3rd. I will move the sleep to the beginning as you suggested so it should decrease number of requests.

| # may not be present instantly | ||

| for _ in range(5): | ||

| response = self._cdap_request(url=url, method="GET") | ||

| if response.status != 200: |

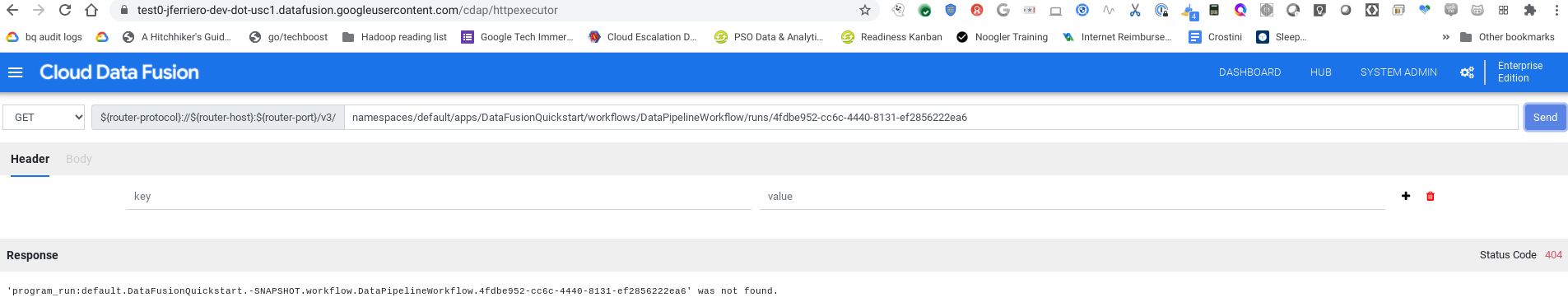

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

FYI you can get to this convenient UI by clicking system admin > configuration > Make HTTP calls

It's very useful for getting used to / testing the CDAP REST API.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You are requesting .../runs/run-id, the code here is calling .../runs to get list of all runs because we don't know yet the proper CDAP run-id. I assume that this request should be successful unless something wrong is with API / network.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah I see, my mistake.

You'll get 200 and empty collection if there's no runs.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, so I will retry the request. I am not sure if there's anything we can do about this. When we call this method we are expecting to see some runs

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

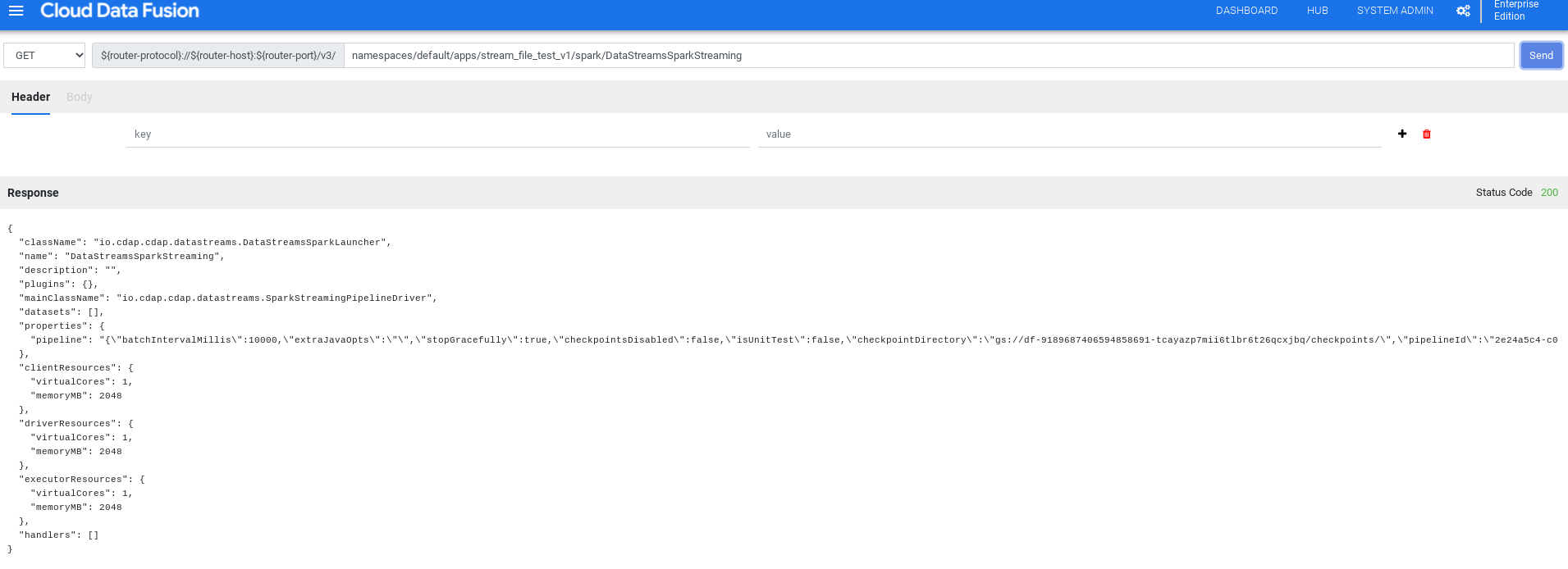

@jaketf the API that CDAP exposes is the basic building blocks of programs. Which are workflows, spark, mapreduce jobs etc. The Data Fusion pipelines use workflows for batch jobs and spark streaming jobs for realtime. The operators should wait for the batch jobs and not wait for the streaming ones.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@sreevatsanraman how can we distinguish those two types? According to Data Fusion CDAP API reference users should use the same endpoint to start both batch and streaming pipelines:

https://cloud.google.com/data-fusion/docs/reference/cdap-reference#start_a_batch_pipeline

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think we should just handle batch pipelines in this PR (as this is implicitly all the current operator does). Also, anecdotally, I think this covers 90% of use cases for airflow. In the field i have not see a lot of streaming orchestration with airflow.

| namespace, | ||

| "apps", | ||

| pipeline_name, | ||

| "workflows", |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This CDAP API is very convoluted.

Seems like there are several program types and this is hard coding workflows and DataPipelineWorkflow .

I think DataPipelineWorkflow this will not be present for streaming pipelines instead you have to poll a spark program.

I'm not sure how many other scenarios require other program types.

It would be good to get someone from CDAP community to review this.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

CC: @sreevatsanraman

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This seems to be a bigger change because we will have to adjust each method that uses DataPipelineWorkflow in URI. So, I would say we can do this but in a follow up PR.

Btw. I was relying on Google docs: https://cloud.google.com/data-fusion/docs/reference/cdap-reference#start_a_batch_pipeline

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

As long as we cover batch pipelines (with spark or MR backend I think we should be good)

|

Hi @jaketf @sreevatsanraman what should we do to move this forward? |

|

Thanks for following up @turbaszek. I've updated the threads. I agree I think we should keep this PR small and focused on patching the existing operator for starting data fusion batch pipelines. In general I think batch is more used than streaming and spark is more used than MR. |

2cdd840

to

0458a4c

Compare

| namespace: str = "default", | ||

| pipeline_timeout: int = 10 * 60, |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If the success state is COMPLETED, we will timeout before the pipeline run completes.

It can take > 5 minutes to just provision a Data Fusion pipeline run. Some pipelines can take hours to complete.

Can we increase the default timeout to 1 hour?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The contract of this operator is to start a pipeline. not wait til pipeline completion.

10 mins is reasonable timeout.

COMPLETED is just a success state in case it's a super quick pipeline that completes between polls.

We can add a sensor for waiting on pipeline completion (which should use reschedule mode if it expects to be so long).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

As part of these changes you can now pass in a parameter to have the operator wait for pipeline completion (not just pipeline start).

sensor + reschedule mode sounds like a good suggestion, thanks

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I've created an issue to limit scope of this PR

#9300

| @@ -616,6 +625,9 @@ class CloudDataFusionStartPipelineOperator(BaseOperator): | |||

| :type pipeline_name: str | |||

| :param instance_name: The name of the instance. | |||

| :type instance_name: str | |||

| :param success_states: If provided the operator will wait for pipeline to be in one of | |||

| the provided states. | |||

| :type success_states: List[str] | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

missing info for new pipeline_timeout parameter

| "programId": "DataPipelineWorkflow", | ||

| "runtimeargs": runtime_args | ||

| }] | ||

| response = self._cdap_request(url=url, method="POST", body=body) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Just an FYI - this is the API request to start multiple pipelines.

There will eventually be a fix return the run Id as part of the API request to run a single pipeline.

We can revert to your original URL when this is available. For context:

https://issues.cask.co/browse/CDAP-7641

fixup! Wait for pipeline state in Data Fusion operators fixup! fixup! Wait for pipeline state in Data Fusion operators fixup! fixup! fixup! Wait for pipeline state in Data Fusion operators

f370ade

to

76ff806

Compare

* Wait for pipeline state in Data Fusion operators fixup! Wait for pipeline state in Data Fusion operators fixup! fixup! Wait for pipeline state in Data Fusion operators fixup! fixup! fixup! Wait for pipeline state in Data Fusion operators * Use quote to encode url parts * fixup! Use quote to encode url parts

Closes: #8673

Make sure to mark the boxes below before creating PR: [x]

In case of fundamental code change, Airflow Improvement Proposal (AIP) is needed.

In case of a new dependency, check compliance with the ASF 3rd Party License Policy.

In case of backwards incompatible changes please leave a note in UPDATING.md.

Read the Pull Request Guidelines for more information.