-

Notifications

You must be signed in to change notification settings - Fork 13.8k

[FLINK-30479][doc] Document flink-connector-files for local execution #21549

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

3d0a250 to

1ace9fc

Compare

| NOTE: If you use the filesystem connector for [local execution]({{< ref "docs/dev/dataset/local_execution" >}}), | ||

| for e.g. running Flink job in your IDE, you will need to add dependency. | ||

|

|

||

| ```xml |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In the PR description, we mentioned why this does not use the sql_download_table shortcodes like {{< sql_download_table "files" >}}.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't think this is necessarily the best method. I don't think this should be resolved per connector, but there is more value in documenting centrally what's needed to run things locally. If we do it per connector, you need to do it for all connectors, but also for things like running things that require Hadoop etc. WDYT?

|

Yeah, documenting centrally sounds good. Maybe I'm limited by how I build the jobs with connectors - I shade all connectors (and dependencies) into the uber job jar. For other connectors (e.g. Kafka), I add the dependency to the Flink job following the Maven snippet in each connector's doc page. That works for both local execution (IDE) and remote deployment. Filesystem connector is a bit special because it's in the Flink deploy (so no need to shade) but not ready for local execution. Adding "provided" scope dependency for this connector solves my problem. I don't find other connectors dependency needs to change for local execution. I'm thinking where it would be a good central place. There is a short guide for setting Hadoop dependencies for local execution. Do you think it's a good idea to write a new section in the Connectors and Formats page or Advanced Configuration Topics page? |

What is the purpose of the change

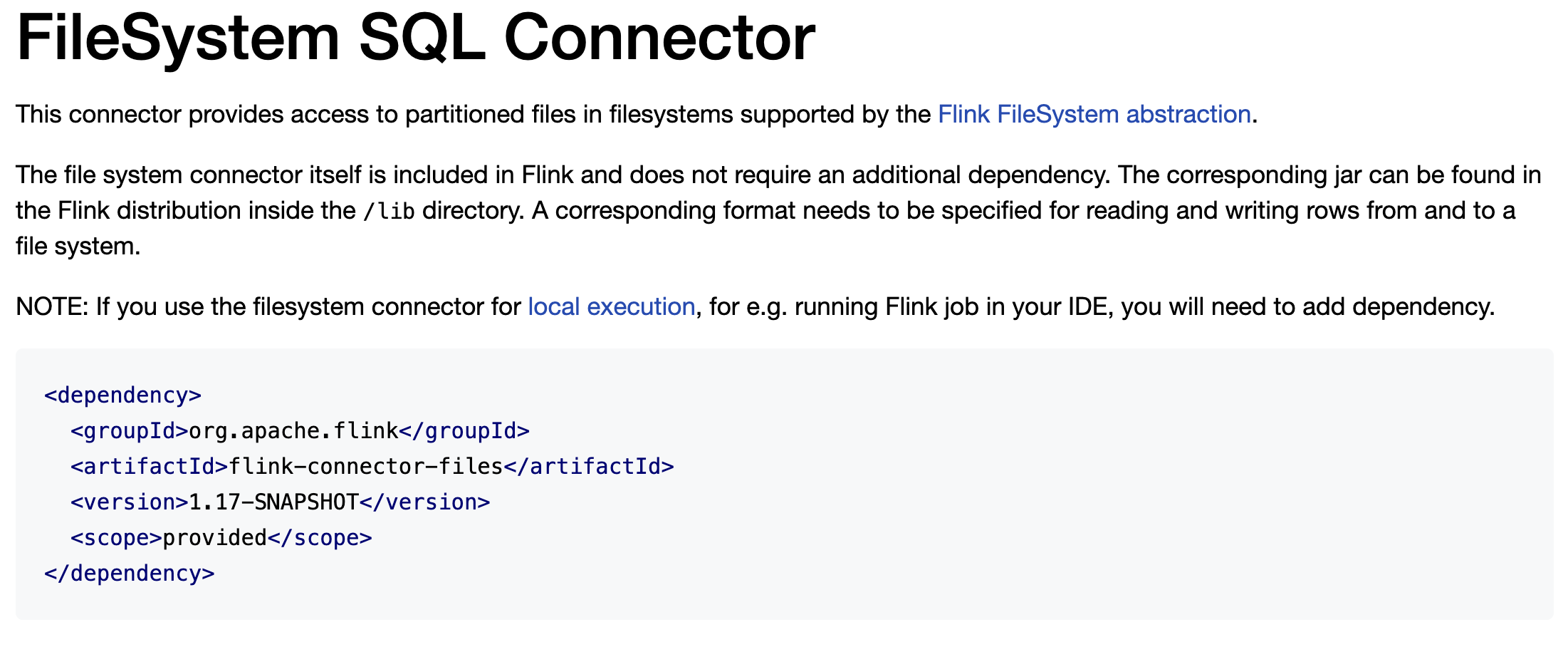

The file system SQL connector itself is included in Flink and does not require an additional dependency. However, if a user uses the filesystem connector for local execution, for e.g. running Flink job in the IDE, she will need to add dependency. Otherwise, the user will get validation exception:

Cannot discover a connector using option: 'connector'='filesystem'. This is confusing and can be documented.Brief change log

The scope of the files connector dependency should be

provided, because they should not be packaged into the job JAR file. So we do not use thesql_download_tableshortcodes like{{< sql_download_table "files" >}}. Also that shortcodes has texts saying the dependencies are required for SQL Client with SQL JAR bundles. That is not applicable to files connector as it's already shipped int he/libdirectlory.Verifying this change

This is a doc change, and I have tested it rendered locally.

Does this pull request potentially affect one of the following parts:

@Public(Evolving): (yes / no)Documentation