-

Notifications

You must be signed in to change notification settings - Fork 1.2k

Don't store graph offsets for HNSW graph #536

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

Currently we store for each node an offset in the graph neighbours file from where to read this node's neighbours. We also load these offsets into the heap. This patch instead of storing offsets calculates them when needed. This allows to save heap space and disk space for offsets. To make offsets calculatable: 1) we write neighbours as Int instead of the current VInt (some extra space here) 2) for each node we allocate ((maxConn + 1) * 4) bytes for storing the node's neighbours, where "maxConn" is the maximum number of connections the node can have. If a node has less than maxConn we add extra padding to fill the leftover space (some extra space here). In big graphs most nodes have "maxConn" of neighbours so there should not be much of a waste space.

lucene/core/src/java/org/apache/lucene/codecs/lucene90/Lucene90HnswVectorsReader.java

Outdated

Show resolved

Hide resolved

|

I seem to remember that when I checked (you can use |

|

@msokolov Thanks for the initial review, it is good to know that we are ok with this idea. I will do the comparison of size and also the |

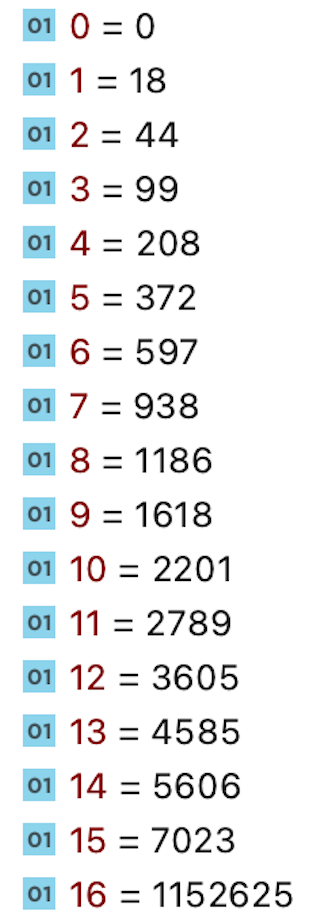

I've checked the index sizes and the size actually increased by 4-5%: glove-100-angular sift-128-euclidean With a proposed design even if we save space by not storing offsets, we encode each node's neighbours as Int instead of the current VInt, which causes more disk usage. On the upside:

glove-100-angular

sift-128-euclidean

|

|

I've also run the comparison on a bigger dataset: deep-image-96-angular of 10M docs. Disk size before the change: 4.2G; after change: 4.3G => 2% increase

|

|

Thanks for the thorough testing, @mayya-sharipova. I think we want to minimize heap usage, the index size cost is small; basically we are trading off on-heap for on-disk/off-heap, which is always a tradeoff we like. The search time change seems like noise? So +1 from me. Also, glad to see the fanout numbers are sane :) |

|

@mayya-sharipova this looks like a nice improvement. We should make sure it's done in a backwards compatible way though, so we can still read vectors that were written in Lucene 9.0. Here's a guide for making index format changes: https://github.com/apache/lucene/blob/main/lucene/backward-codecs/README.md. I think we'll want to create a new class |

|

Oh sorry, I didn't see this was merged into the |

|

@jtibshirani Thanks for the guide on the format change, I will study it and follow it. |

Currently HNSW has only a single layer. This patch makes HNSW graph multi-layered. This PR is based on the following PRs: apache#250, apache#267, apache#287, apache#315, apache#536, apache#416 Main changes: - Multi layers are introduced into HnswGraph and HnswGraphBuilder - A new Lucene91HnswVectorsFormat with new Lucene91HnswVectorsReader and Lucene91HnswVectorsWriter are introduced to encode graph layers' information - Lucene90Codec, Lucene90HnswVectorsFormat, and the reading logic of Lucene90HnswVectorsReader and Lucene90HnswGraph are moved to backward_codecs to support reading and searching of graphs built in pre 9.1 version. Lucene90HnswVectorsWriter is deleted. - For backwards compatible tests, previous Lucene90 graph reading and writing logic was copied into test files of Lucene90RWHnswVectorsFormat, Lucene90HnswVectorsWriter, Lucene90HnswGraphBuilder and Lucene90HnswRWGraph.

Currently HNSW has only a single layer. This patch makes HNSW graph multi-layered. This PR is based on the following PRs: apache#250, apache#267, apache#287, apache#315, apache#536, apache#416 Main changes: - Multi layers are introduced into HnswGraph and HnswGraphBuilder - A new Lucene91HnswVectorsFormat with new Lucene91HnswVectorsReader and Lucene91HnswVectorsWriter are introduced to encode graph layers' information - Lucene90Codec, Lucene90HnswVectorsFormat, and the reading logic of Lucene90HnswVectorsReader and Lucene90HnswGraph are moved to backward_codecs to support reading and searching of graphs built in pre 9.1 version. Lucene90HnswVectorsWriter is deleted. - For backwards compatible tests, previous Lucene90 graph reading and writing logic was copied into test files of Lucene90RWHnswVectorsFormat, Lucene90HnswVectorsWriter, Lucene90HnswGraphBuilder and Lucene90HnswRWGraph. TODO: tests for KNN search for graphs built in pre 9.1 version; tests for merge of indices of pre 9.1 + current versions.

Currently HNSW has only a single layer. This patch makes HNSW graph multi-layered. This PR is based on the following PRs: apache#250, apache#267, apache#287, apache#315, apache#536, apache#416 Main changes: - Multi layers are introduced into HnswGraph and HnswGraphBuilder - A new Lucene91HnswVectorsFormat with new Lucene91HnswVectorsReader and Lucene91HnswVectorsWriter are introduced to encode graph layers' information - Lucene90Codec, Lucene90HnswVectorsFormat, and the reading logic of Lucene90HnswVectorsReader and Lucene90HnswGraph are moved to backward_codecs to support reading and searching of graphs built in pre 9.1 version. Lucene90HnswVectorsWriter is deleted. - For backwards compatible tests, previous Lucene90 graph reading and writing logic was copied into test files of Lucene90RWHnswVectorsFormat, Lucene90HnswVectorsWriter, Lucene90HnswGraphBuilder and Lucene90HnswRWGraph. TODO: tests for KNN search for graphs built in pre 9.1 version; tests for merge of indices of pre 9.1 + current versions.

Currently HNSW has only a single layer. This patch makes HNSW graph multi-layered. This PR is based on the following PRs: #250, #267, #287, #315, #536, #416 Main changes: - Multi layers are introduced into HnswGraph and HnswGraphBuilder - A new Lucene91HnswVectorsFormat with new Lucene91HnswVectorsReader and Lucene91HnswVectorsWriter are introduced to encode graph layers' information - Lucene90Codec, Lucene90HnswVectorsFormat, and the reading logic of Lucene90HnswVectorsReader and Lucene90HnswGraph are moved to backward_codecs to support reading and searching of graphs built in pre 9.1 version. Lucene90HnswVectorsWriter is deleted. - For backwards compatible tests, previous Lucene90 graph reading and writing logic was copied into test files of Lucene90RWHnswVectorsFormat, Lucene90HnswVectorsWriter, Lucene90HnswGraphBuilder and Lucene90HnswRWGraph. TODO: tests for KNN search for graphs built in pre 9.1 version; tests for merge of indices of pre 9.1 + current versions.

Currently HNSW has only a single layer. This patch makes HNSW graph multi-layered. This PR is based on the following PRs: apache#250, apache#267, apache#287, apache#315, apache#536, apache#416 Main changes: - Multi layers are introduced into HnswGraph and HnswGraphBuilder - A new Lucene91HnswVectorsFormat with new Lucene91HnswVectorsReader and Lucene91HnswVectorsWriter are introduced to encode graph layers' information - Lucene90Codec, Lucene90HnswVectorsFormat, and the reading logic of Lucene90HnswVectorsReader and Lucene90HnswGraph are moved to backward_codecs to support reading and searching of graphs built in pre 9.1 version. Lucene90HnswVectorsWriter is deleted. - For backwards compatible tests, previous Lucene90 graph reading and writing logic was copied into test files of Lucene90RWHnswVectorsFormat, Lucene90HnswVectorsWriter, Lucene90HnswGraphBuilder and Lucene90HnswRWGraph. TODO: tests for KNN search for graphs built in pre 9.1 version; tests for merge of indices of pre 9.1 + current versions.

Currently HNSW has only a single layer. This patch makes HNSW graph multi-layered. This PR is based on the following PRs: #250, #267, #287, #315, #536, #416 Main changes: - Multi layers are introduced into HnswGraph and HnswGraphBuilder - A new Lucene91HnswVectorsFormat with new Lucene91HnswVectorsReader and Lucene91HnswVectorsWriter are introduced to encode graph layers' information - Lucene90Codec, Lucene90HnswVectorsFormat, and the reading logic of Lucene90HnswVectorsReader and Lucene90HnswGraph are moved to backward_codecs to support reading and searching of graphs built in pre 9.1 version. Lucene90HnswVectorsWriter is deleted. - For backwards compatible tests, previous Lucene90 graph reading and writing logic was copied into test files of Lucene90RWHnswVectorsFormat, Lucene90HnswVectorsWriter, Lucene90HnswGraphBuilder and Lucene90HnswRWGraph. TODO: tests for KNN search for graphs built in pre 9.1 version; tests for merge of indices of pre 9.1 + current versions.

Currently we store for each node an offset in the graph

neighbours file from where to read this node's neighbours.

We also load these offsets into the heap.

This patch instead of storing offsets calculates them when needed.

This allows to save heap space and disk space for offsets.

To make offsets calculable:

have predictable offsets (some extra space here)

storing the node's neighbours, where "maxConn" is the maximum number

of connections the node can have. If a node has less than maxConn we

add extra padding to fill the leftover space (some extra space here).

In big graphs most nodes have "maxConn" of neighbours so there

should not be much of a waste space.