-

Notifications

You must be signed in to change notification settings - Fork 28.8k

[SPARK-34246][SQL] New type coercion syntax rules in ANSI mode #31349

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[SPARK-34246][SQL] New type coercion syntax rules in ANSI mode #31349

Conversation

5233edb to

0faed1f

Compare

|

Note: I have added some follow-ups for this new feature https://issues.apache.org/jira/browse/SPARK-34246. |

|

Test build #134509 has started for PR 31349 at commit |

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/TypeCoercion.scala

Outdated

Show resolved

Hide resolved

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

... promotion to string seems not true for ansi mode?

dfabacc to

4a8ce27

Compare

|

Kubernetes integration test starting |

|

Kubernetes integration test status success |

|

Test build #134562 has finished for PR 31349 at commit

|

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This update is pretty nice, thanks, @gengliangwang ! Btw, could you summarize major behaviour changes from the existing one in Does this PR introduce any user-facing change? The description about the new behaivour looks pretty clear, but it is not easy to understand which implicit cast behavior will change, actually.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

private

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/AnsiTypeCoercion.scala

Outdated

Show resolved

Hide resolved

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/AnsiTypeCoercion.scala

Outdated

Show resolved

Hide resolved

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/AnsiTypeCoercion.scala

Outdated

Show resolved

Hide resolved

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The standard says something about this behaviour, too?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

No, this is not from the standard. It is learned from PostgreSQL. I planed to put this feature in another follow-up PR. But there were multiple test failures from the "SQLQueryTestSuite", so I did it in this PR as well.

What do you think of it?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I just updated the comment to claim that it is not from the standard.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Also, this is for passing TPCDSQueryANSISuite.

E.g. without promoting string literals, q83 will fail with

org.apache.spark.sql.AnalysisException: cannot resolve '(spark_catalog.default.date_dim.`d_date` IN ('2000-06-30', '2000-09-27', '2000-11-17'))' due to data type mismatch: Arguments must be same type but were: date != string; line 12 pos 17;

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Test build #134595 has finished for PR 31349 at commit

|

|

Kubernetes integration test starting |

|

Kubernetes integration test status success |

|

Test build #134608 has finished for PR 31349 at commit

|

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/AnsiTypeCoercion.scala

Outdated

Show resolved

Hide resolved

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What's a relationship between AnsiTypeCoercion and DecimalPrecision? In the existing DecimalPrecision, it seems all the (decimal, decimal) cases are handled in DecimalPrecision though.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Here it I am merging findWiderTypeForDecimal into findTightestCommonType, to make the logic more clear.

For the rule DecimalPrecision, it decides the result data type of various operators involving decimals, e.g. Add, Subtract.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah, ok. The latest change looks good.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

nit: _ @ StringType() => StringType()

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

hm, string literal=>numeric literals is allowed, but numeric literals=>string literals is disallowed?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, only string literals are specially handled.

|

Kubernetes integration test starting |

|

Kubernetes integration test status failure |

|

Test build #134809 has finished for PR 31349 at commit

|

|

Test build #134839 has finished for PR 31349 at commit

|

|

retest this please. |

|

Kubernetes integration test starting |

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/AnsiTypeCoercion.scala

Outdated

Show resolved

Hide resolved

sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/analysis/AnsiTypeCoercion.scala

Outdated

Show resolved

Hide resolved

|

|

||

| -- !query | ||

| CREATE TABLE withz USING parquet AS SELECT i AS k, CAST(i AS string) || ' v' AS v FROM (SELECT EXPLODE(SEQUENCE(1, 16, 3)) i) | ||

| CREATE TABLE withz USING parquet AS SELECT i AS k, CAST(i || ' v' AS string) v FROM (SELECT EXPLODE(SEQUENCE(1, 16, 3)) i) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I see what's going on now. This test is for the SQL WITH feature, not string concat, and we lose test coverage because we failed to create the table and following queries all fail.

Let's change it to CAST(i AS string) || ' v'.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yeah, that was my purpose.

|

Kubernetes integration test starting |

|

Kubernetes integration test status success |

|

Kubernetes integration test starting |

|

Kubernetes integration test status success |

|

Test build #135382 has finished for PR 31349 at commit

|

|

Test build #135381 has finished for PR 31349 at commit

|

|

It looks like a valid failure. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I've checked the latest changes and it looks fine.

|

GA passes. Merging to master. |

|

Test build #135403 has finished for PR 31349 at commit

|

…ype` for backward compatibility ### What changes were proposed in this pull request? Change the definition of `findTightestCommonType` from ``` def findTightestCommonType(t1: DataType, t2: DataType): Option[DataType] ``` to ``` val findTightestCommonType: (DataType, DataType) => Option[DataType] ``` ### Why are the changes needed? For backward compatibility. When running a MongoDB connector (built with Spark 3.1.1) with the latest master, there is such an error ``` java.lang.NoSuchMethodError: org.apache.spark.sql.catalyst.analysis.TypeCoercion$.findTightestCommonType()Lscala/Function2 ``` from https://github.com/mongodb/mongo-spark/blob/master/src/main/scala/com/mongodb/spark/sql/MongoInferSchema.scala#L150 In the previous release, the function was ``` static public scala.Function2<org.apache.spark.sql.types.DataType, org.apache.spark.sql.types.DataType, scala.Option<org.apache.spark.sql.types.DataType>> findTightestCommonType () ``` After #31349, the function becomes: ``` static public scala.Option<org.apache.spark.sql.types.DataType> findTightestCommonType (org.apache.spark.sql.types.DataType t1, org.apache.spark.sql.types.DataType t2) ``` This PR is to reduce the unnecessary API change. ### Does this PR introduce _any_ user-facing change? Yes, the definition of `TypeCoercion.findTightestCommonType` is consistent with previous release again. ### How was this patch tested? Existing unit tests Closes #32493 from gengliangwang/typecoercion. Authored-by: Gengliang Wang <ltnwgl@gmail.com> Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

…ype` for backward compatibility ### What changes were proposed in this pull request? Change the definition of `findTightestCommonType` from ``` def findTightestCommonType(t1: DataType, t2: DataType): Option[DataType] ``` to ``` val findTightestCommonType: (DataType, DataType) => Option[DataType] ``` ### Why are the changes needed? For backward compatibility. When running a MongoDB connector (built with Spark 3.1.1) with the latest master, there is such an error ``` java.lang.NoSuchMethodError: org.apache.spark.sql.catalyst.analysis.TypeCoercion$.findTightestCommonType()Lscala/Function2 ``` from https://github.com/mongodb/mongo-spark/blob/master/src/main/scala/com/mongodb/spark/sql/MongoInferSchema.scala#L150 In the previous release, the function was ``` static public scala.Function2<org.apache.spark.sql.types.DataType, org.apache.spark.sql.types.DataType, scala.Option<org.apache.spark.sql.types.DataType>> findTightestCommonType () ``` After apache/spark#31349, the function becomes: ``` static public scala.Option<org.apache.spark.sql.types.DataType> findTightestCommonType (org.apache.spark.sql.types.DataType t1, org.apache.spark.sql.types.DataType t2) ``` This PR is to reduce the unnecessary API change. ### Does this PR introduce _any_ user-facing change? Yes, the definition of `TypeCoercion.findTightestCommonType` is consistent with previous release again. ### How was this patch tested? Existing unit tests Closes #32493 from gengliangwang/typecoercion. Authored-by: Gengliang Wang <ltnwgl@gmail.com> Signed-off-by: Gengliang Wang <ltnwgl@gmail.com>

### What changes were proposed in this pull request? Add documentation for the ANSI implicit cast rules which are introduced from #31349 ### Why are the changes needed? Better documentation. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Build and preview in local:      Closes #33516 from gengliangwang/addDoc. Lead-authored-by: Gengliang Wang <gengliang@apache.org> Co-authored-by: Serge Rielau <serge@rielau.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request? Add documentation for the ANSI implicit cast rules which are introduced from #31349 ### Why are the changes needed? Better documentation. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Build and preview in local:      Closes #33516 from gengliangwang/addDoc. Lead-authored-by: Gengliang Wang <gengliang@apache.org> Co-authored-by: Serge Rielau <serge@rielau.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit df98d5b) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request? Add documentation for the ANSI implicit cast rules which are introduced from apache/spark#31349 ### Why are the changes needed? Better documentation. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Build and preview in local:      Closes #33516 from gengliangwang/addDoc. Lead-authored-by: Gengliang Wang <gengliang@apache.org> Co-authored-by: Serge Rielau <serge@rielau.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com>

### What changes were proposed in this pull request? Add documentation for the ANSI implicit cast rules which are introduced from apache#31349 ### Why are the changes needed? Better documentation. ### Does this PR introduce _any_ user-facing change? No ### How was this patch tested? Build and preview in local:      Closes apache#33516 from gengliangwang/addDoc. Lead-authored-by: Gengliang Wang <gengliang@apache.org> Co-authored-by: Serge Rielau <serge@rielau.com> Signed-off-by: Wenchen Fan <wenchen@databricks.com> (cherry picked from commit df98d5b) Signed-off-by: Wenchen Fan <wenchen@databricks.com>

What changes were proposed in this pull request?

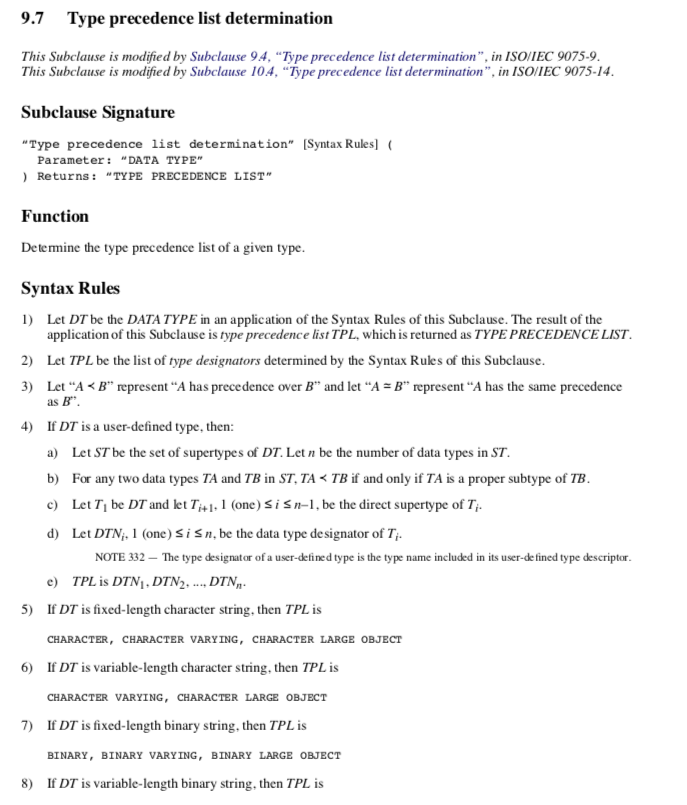

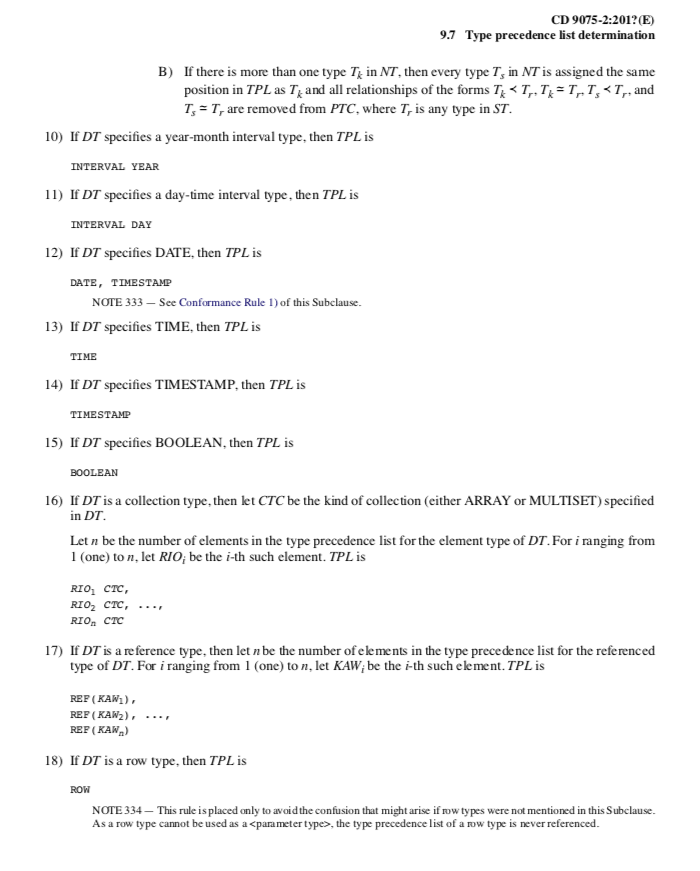

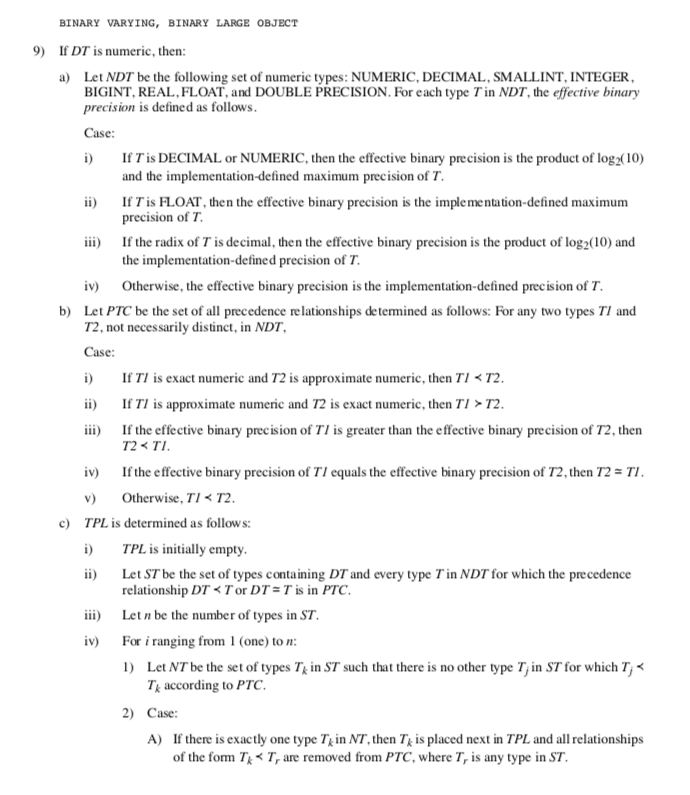

In Spark ANSI mode, the type coercion rules are based on the type precedence lists of the input data types.

As per the section "Type precedence list determination" of "ISO/IEC 9075-2:2011

Information technology — Database languages - SQL — Part 2: Foundation (SQL/Foundation)", the type precedence lists of primitive data types are as following:

As for complex data types, Spark will determine the precedent list recursively based on their sub-types.

With the definition of type precedent list, the general type coercion rules are as following:

Note: this new type coercion system will allow implicit converting String type literals as other primitive types, in case of breaking too many existing Spark SQL queries. This is a special rule and it is not from the ANSI SQL standard.

Why are the changes needed?

The current type coercion rules are complex. Also, they are very hard to describe and understand. For details please refer the attached documentation "Default Type coercion rules of Spark"

Default Type coercion rules of Spark.pdf

This PR is to create a new and strict type coercion system under ANSI mode. The rules are simple and clean, so that users can follow them easily

Does this PR introduce any user-facing change?

Yes, new implicit cast syntax rules in ANSI mode. All the details are in the first section of this description.

How was this patch tested?

Unit tests