-

Notifications

You must be signed in to change notification settings - Fork 1.2k

Closed

Labels

Description

Describe the bug

Cannot start a FrameworkProcessor (e.g. XGBoostProcessor) Processing Job using a custom-kms-encrypted S3 bucket.

I think the problem stems from this method here, it doesn't inherit the provided kms key

To reproduce

- Create a KMS key

- Add S3 bucket policy to only allow

s3:PutObjectaction with the above KMS key

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "RestrictToDefaultOrKMS",

"Effect": "Deny",

"Principal": "*",

"Action": "s3:PutObject",

"Resource": "<bucket-arn>/*",

"Condition": {

"Null": {

"s3:x-amz-server-side-encryption": "false"

},

"StringNotEquals": {

"s3:x-amz-server-side-encryption": "aws:kms"

}

}

},

{

"Sid": "Restrict_KMS_Key",

"Effect": "Deny",

"Principal": "*",

"Action": "s3:PutObject",

"Resource": "<bucket-arn>/*",

"Condition": {

"StringNotEqualsIfExists": {

"s3:x-amz-server-side-encryption-aws-kms-key-id": <kms-key-arn>

}

}

}

]

}

- Start a Sagemaker Processing Job with

FrameworkProcessor, based on the processing step here

region = sagemaker.Session().boto_region_name

sm_client = boto3.client("sagemaker")

boto_session = boto3.Session(region_name=region)

sagemaker_session = sagemaker.session.Session(

boto_session=boto_session,

sagemaker_client=sm_client,

default_bucket=<bucket-name>,

)

processor = XGBoostProcessor(

framework_version="1.3-1",

py_version="py3",

instance_type="ml.m5.xlarge",

instance_count=1,

sagemaker_session=sagemaker_session,

role=role,

output_kms_key=<kms-key-arn>,

)

processor_run_args = sklearn_processor.run(

kms_key=kms_key,

outputs=[

ProcessingOutput(

output_name="train",

source="/opt/ml/processing/train",

destination=f"s3://{default_bucket}/PreprocessAbaloneDataForHPO"

),

ProcessingOutput(

output_name="validation",

source="/opt/ml/processing/validation",

destination=f"s3://{default_bucket}/PreprocessAbaloneDataForHPO"

),

ProcessingOutput(

output_name="test",

source="/opt/ml/processing/test",

destination=f"s3://{default_bucket}/PreprocessAbaloneDataForHPO"

),

],

code="code/preprocess.py",

arguments=["--input-data", "s3://sagemaker-sample-files/datasets/tabular/uci_abalone/abalone.csv"],

)

Expected behavior

Job starts and runs normally

Screenshots or logs

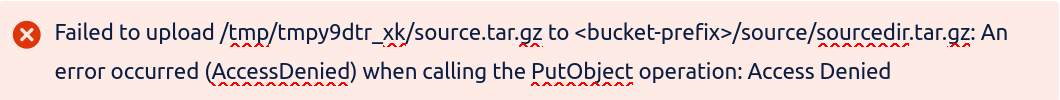

The error that I get

System information

A description of your system. Please provide:

- SageMaker Python SDK version: 2.135.1

- Framework name (eg. PyTorch) or algorithm (eg. KMeans): XGBoostProcessor (or any FrameworkProcessor)

- Framework version: 1.3-1

- Python version: py3

- CPU or GPU: cpu

- Custom Docker image (Y/N): No

Additional context

Add any other context about the problem here.