-

Notifications

You must be signed in to change notification settings - Fork 1.2k

Description

System Information

- Framework (e.g. TensorFlow) / Algorithm (e.g. KMeans): conda_mxnet_p36 (for evaluation)

- Python Version: 3.6

Describe the problem

I'm using the built-in image classification algorithm supplied by AWS Sagemaker.

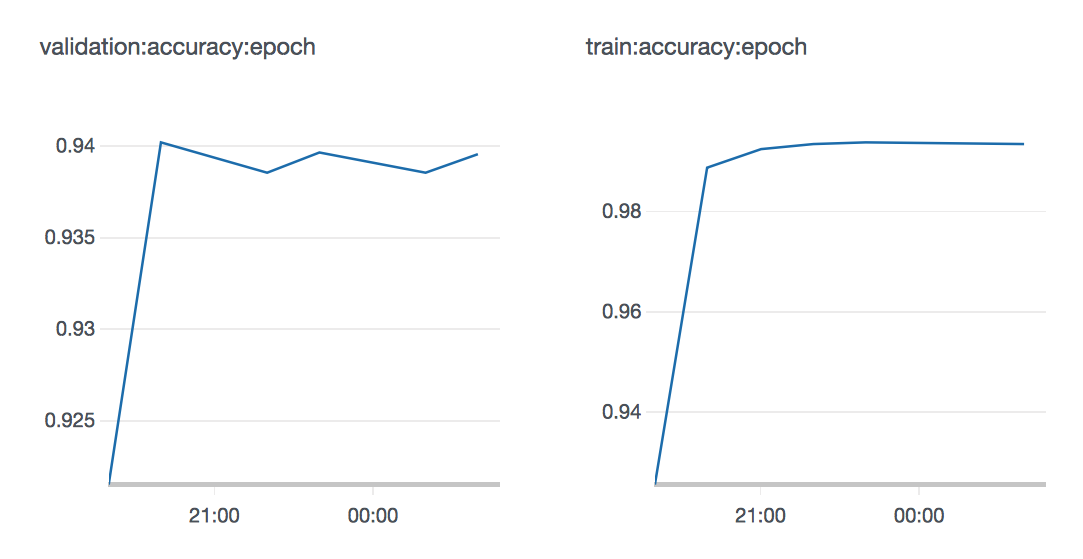

I have a binary image classification problem that I've launched from both the console and Jupyter Notebook instances. I define training and validation data channels, supplying rec files for each. Training runs and completes. The graphs on the training job page report a high validation accuracy at the end of the training job's lifetime- over 90%.

To better understand this accuracy, I open a Jupyter notebook, reload the model using sagemaker.estimator.Estimator.attach() and deploy it to an endpoint. A bring in my folder of validation images- the same ones used to create the validation channel rec file- from S3 and add them to a list. I loop over this list and evaluate each image using the code from Image-classification-transfer-learning-highlevel.ipynb, collecting the results.

Here's the code for that.

predictions = []

# test_files is a list of all the image paths in the validation set

for file_name in test_files:

with open(file_name, 'rb') as f:

payload = f.read()

payload = bytearray(payload)

working_model.content_type = 'application/x-image'

result = json.loads(working_model.predict(payload))

# the result will output the probabilities for all classes

# find the class with maximum probability and print the class index

index = np.argmax(result)

object_categories = ['CATEGORY_1', 'CATEGORY_2']

file = file_name.split('/')[-1]

predictions.append([file, dataset, result, index, object_categories[index]])

When I evaluate these predictions against the ground truth values I get an accuracy around 50%.

What could be the reason for this discrepancy? Am I misunderstanding the graphs reporting validation accuracy on the training job page? (I've attached a sample graph.)

There's a lot of moving pieces to this question, so please let me know what additional, specific information would be useful in answering.

Thanks!