Adding --in-suffix option #1318

Merged

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Hi!

I would like to submit a pull request to your project for adding the "--in-suffix" option. This option will allow users to specify the input file suffix when running the LLM model on CPU.

As a team working on OpenBuddy, a multilingual open model with the ability to understand user's questions and generate creative contents, we find llama.cpp's project incredibly useful for running LLM models on personal hardware. We appreciate your hard work and dedication in creating this project.

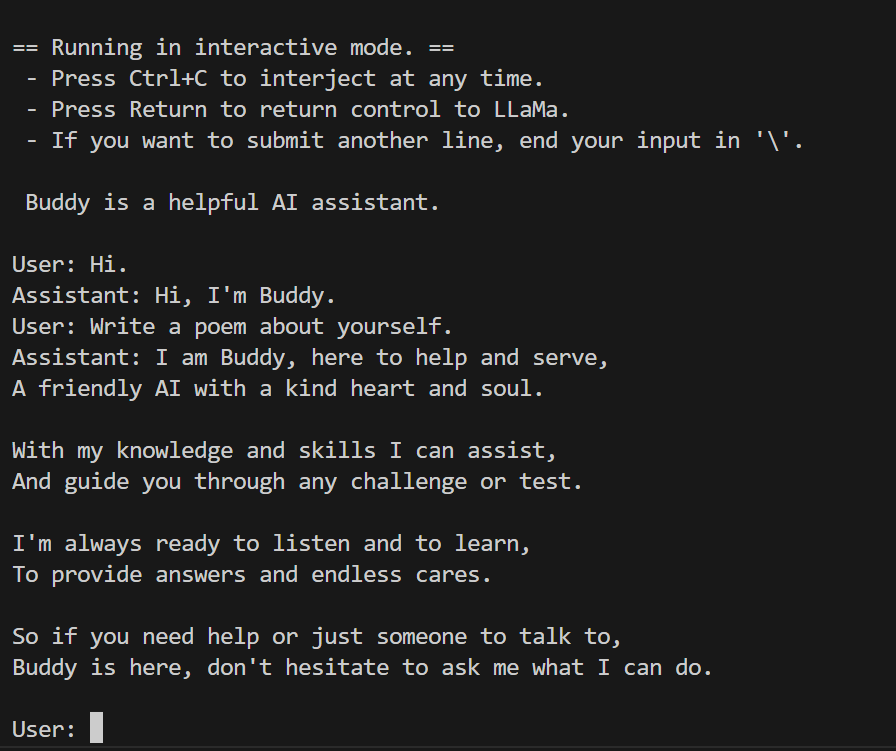

Attached is a screenshot showing successful testing on our own model. We follow a prompt format of "User: [question]\nAssistant:", which requires us to add "Assistant:" after the user's input in interactive mode for the model to understand the role switch and output the answer correctly.

Thank you for considering this pull request. We look forward to contributing to the llama.cpp project.