-

Notifications

You must be signed in to change notification settings - Fork 1.2k

ssh: increase jobs and parallelize batch_exists #2131

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

please, take a look at the codeclimate issues. Some of those probably can be addresses. Especially cognitive complexity. |

dvc/remote/ssh/connection.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

does it make sense to set the CRITICAL level globally? do we care about the logs from paramiko at all?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

If we don't do this, it will log something like: Secsh channel open FAILED: open failed: Administratively prohibited when trying to figure out the max possible connections

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yes, so my question is - do we need to see their logs at all? Can we set it to critical globally?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I'm not sure if it would be a good idea, if something goes wrong, then we will be clueless

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

but making it silent here we are already going that path, right? if we think there is some value paramiko can provide in terms of logging, why don't we switch it on when -v is passed?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

agree, @shcheklein , I'll add support for -v

|

@shcheklein codeclimate has code complexity threshold set too low. Usual value is 10, I persornaly consider 12-15 is ok. Cap at 5, which codeclimate has as default, will lead to a spaghetti of funcs/methods not doing much each. We've discussed this with @efiop and decided to raise that limit. UPDATE I read that up and looks like they are using something they call Cognitive complexity and not Cyclomatic one, which we discussed. So we should consider it again. |

|

@Suor it's not exactly "cyclomatic complexity" you are referring to re the common threshold, it's a cognitive complexity - a different beast. I don't know where did they take 5 for it. Saying that, I agree that 5 might be a little bit too aggressive. My personal take - usually it's hard to test (no matter func tests or unit) and read methods with a CC complexity of 10 and usually there is a clear path for improvement, sometimes it even means that design is bad (like take a look at the batch_exists - it's quite complicated already with all this nesting, embedded method, etc). In this specific case: try:

smt

except IOError as exc:

if exc.errno != errno.ENOENT:

raise

smththis single piece adds probably almost all CC errors - duplication, cognitive complexity, etc ... I'm not sure if there is a nice way to deal with this in Python |

dvc/remote/ssh/connection.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

are we exhausting all SSH connections on the server this way? or just a number of channels per connections/

my questions is - how this greedy aggressive strategy affects other potential SSH clients on the server?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

good question, @shcheklein!

how this greedy aggressive strategy affects other potential SSH clients on the server?

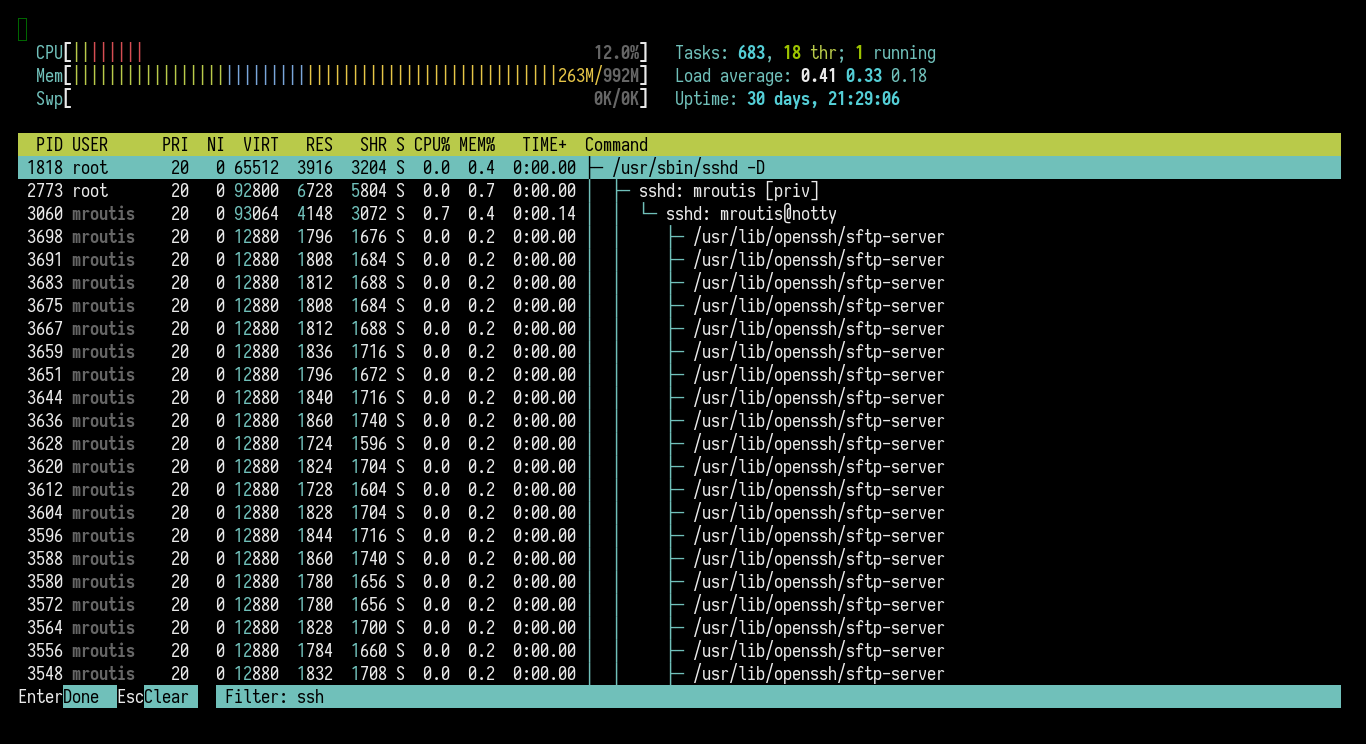

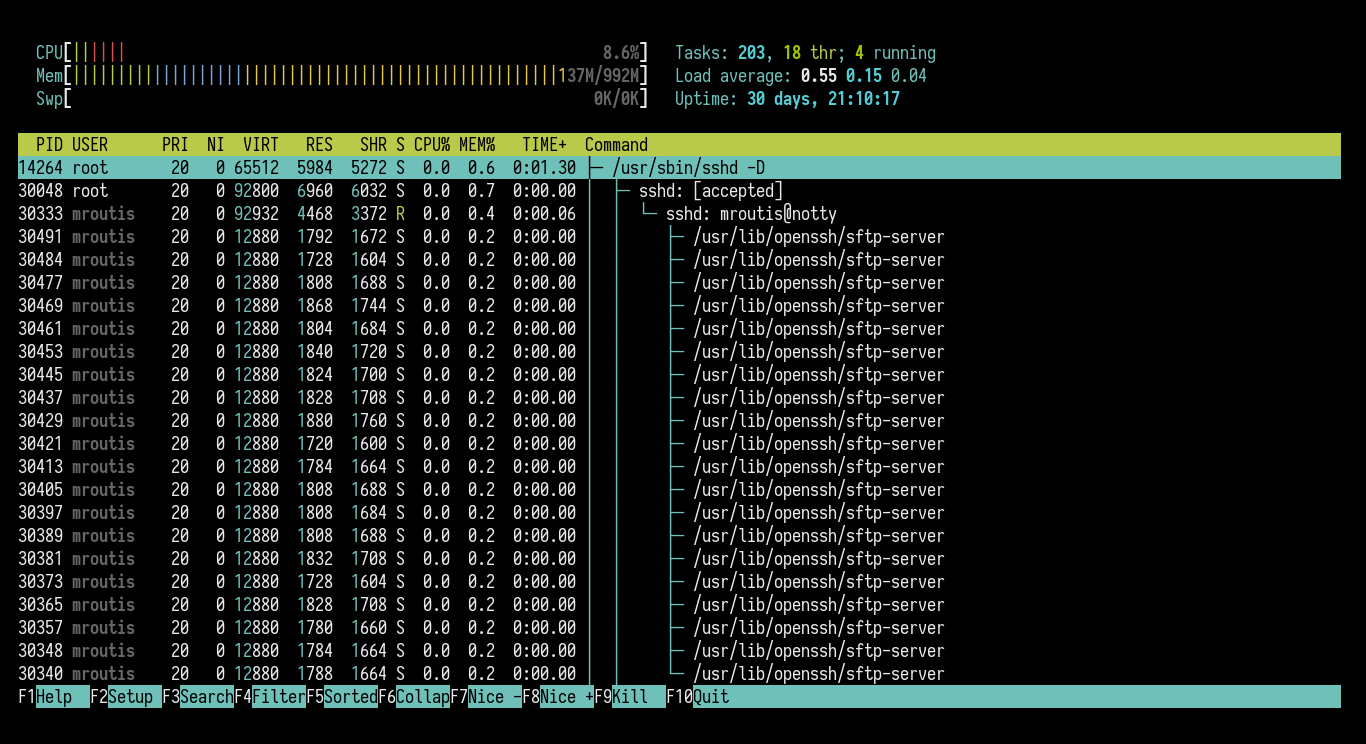

Running htop on the server while running dvc status -r -ssh showed no significant resource consumption:

And I could still open more SSH and SFTP connections without any error.

So, based on this observations, I'd say that it is not that aggressive to become a potential problem. Although, it raises the question of why paramiko is limiting us to create more than 10 jobs 🤔

dvc/remote/ssh/__init__.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

are we reusing the same single connection? what are the limits in terms of how many channels one can open per a connection?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

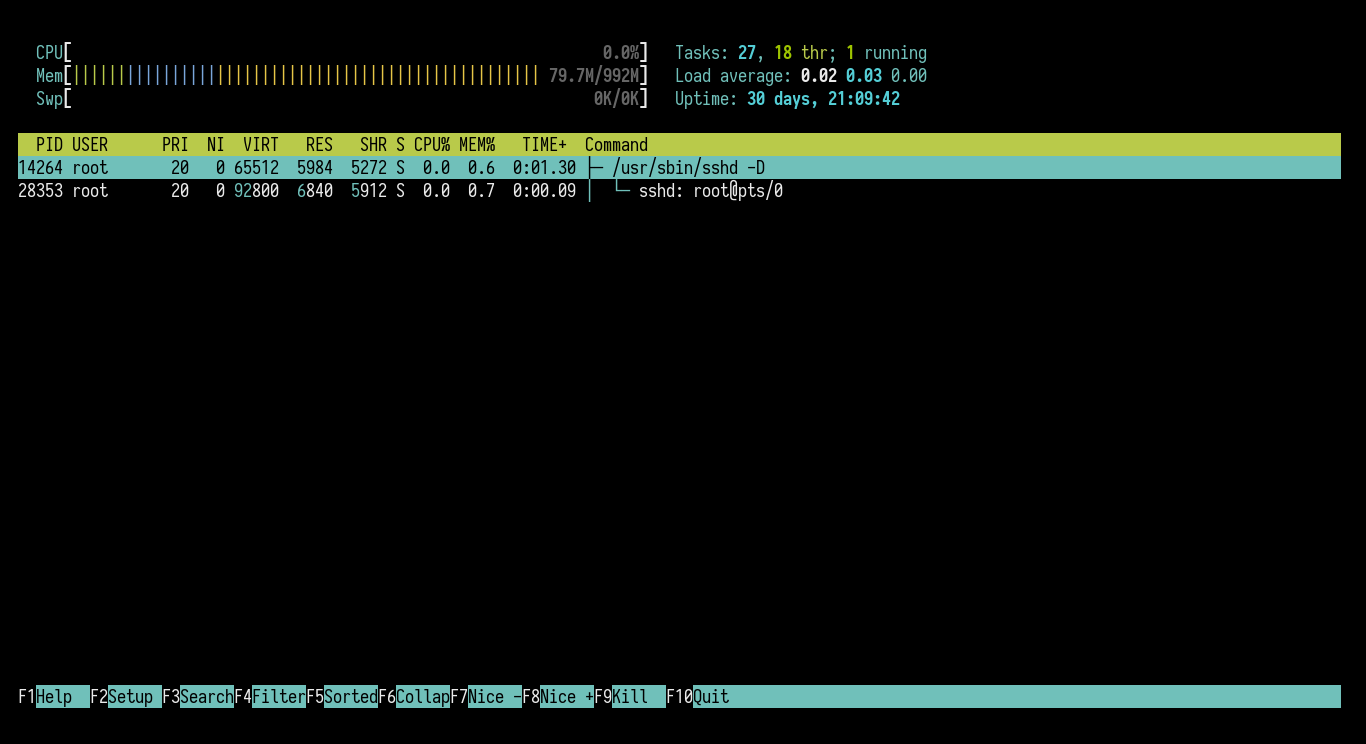

The limit of how many SFTP channels we can have per connections is set with the MaxSessions option.

It is important to note that the performance increase is not linear, for example, here, the MaxSessions is set to 80, creating a lot of tasks on the remote server:

The performance is worst than when it was set to 40.

The sweet-spot I found was setting it to 20 (running the whole operation in ~2m, compared to ~3m) when comparing 100K files (it might depend on the load of files)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I would seriously think into healing ENOENT cancer) Can we use a context manager or a higher order function to do that?

dvc/remote/ssh/__init__.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why do we ignore the result of channel.stat() here? We don't in .exists().

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There's no such thing as channel.exists and we can't call self / ssh exists because SSH is not thread safe (using the same connection in different threads).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't understand the answer. The channel is an sftp instance, so why we don't examine what .stat() returns the same way we do for single exists?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't know why I answered that, @Suor, it doesn't make sense at all! my bad 😅

We are ignoring the result because we only care about knowing whether the file exists or not.

@Suor , I agree, although, this would not be a problem when we move to Python 3, since |

Tried with the context manager approach and it doesn't fit situations when we are returning an specific value or continue the execution of a loop only if the file wasn't found, it kind of obscures the implementation and is not that a hassle to deal with. I won't address this on this PR, if you think is really important, @Suor, let's create another issue

|

@Suor , I'll do it but just for |

- SSH number of jobs increased from 4 to 8. - `batch_exists` now opens all the SFTP sessions available to distribute the work between them. Number of sessions is restricted by the `MaxSessions` option configurable by the `sshd_config`. - Fix #2104 With the optimizations mentioned above, we increased the speed by 8x (using the default `MaxSessions` configuration -- 10). Shout-outs to @efiop, @shcheklein, and @dmpetrov for suggesting the optimization techniques.

Have you followed the guidelines in our

Contributing document?

Does your PR affect documented changes or does it add new functionality

that should be documented? If yes, have you created a PR for

dvc.org documenting it or at

least opened an issue for it? If so, please add a link to it.

SSH number of jobs increased from 4 to 8.

batch_existsnow opens all the SFTP sessions available todistribute the work between them. Number of sessions is restricted

by the

MaxSessionsoption configurable by thesshd_config.Fix ssh: check IOError key code #2104

With the optimizations mentioned above, we increased the speed by 8x

(using the default

MaxSessionsconfiguration -- 10).Shout-outs to @efiop, @shcheklein, and @dmpetrov for suggesting the

optimization techniques.