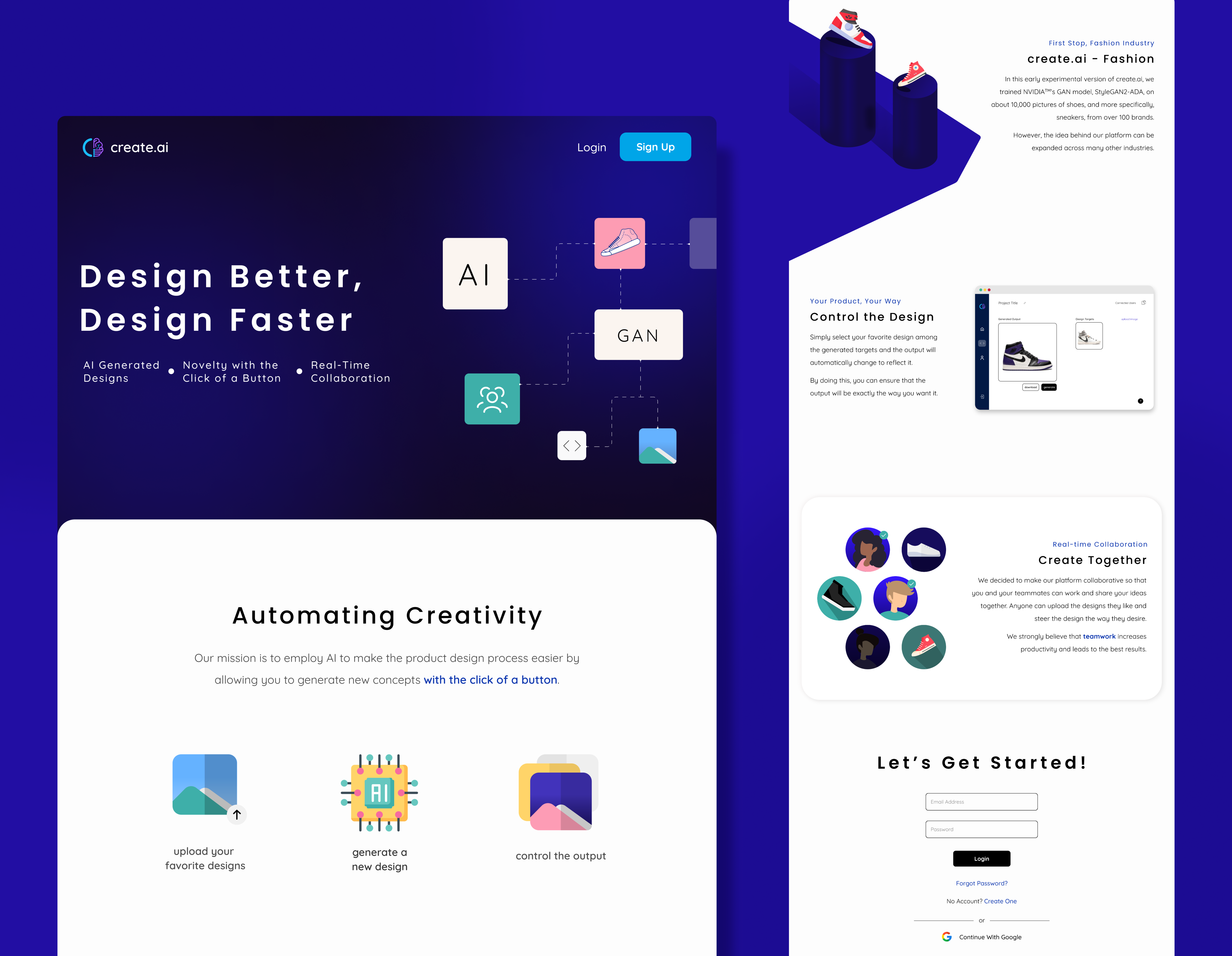

An experimental product design platform where users can collaborate on the final design of a product with the help of AI.

Overview • Built With • How It Works • Training the GAN Model • System Design

In this project, we exploit the ability of Generative Adversarial Networks (GANs) to generate novel content by integrating them into a collaborative platform where they can assist users in designing their product.

The goal is to explore how the power of GANs as design tools can help revolutionize the design process and see how this combination of deep neural networks and human curation can give artists who are lacking inspiration a place to start from.

Main tools and frameworks used:

- Next.js - a React framework

- Tailwind CSS - utility-first CSS framework

- HeadlessUI - unstyled, fully accessible UI components, designed to integrate beautifully with Tailwind CSS

- styled-components - a CSS-in-JS library

- Firebase - Google’s Backend-as-a-Service (BaaS)

- AWS Lambda - an event-driven, serverless computing platform provided by Amazon as a part of Amazon Web Services

- Amazon API Gateway - an AWS service for creating, publishing, maintaining, monitoring, and securing REST, HTTP, and WebSocket APIs at any scale

- Amazon S3 - an AWS service that provides cloud object storage

- Google Compute Engine - customizable compute service that lets you create and run virtual machines on Google's infrastructure

We cannot train the GAN model on multiple products, so we decided to test the idea on sneakers. The most important part of the platform is the GAN workspace, which can be accessed after the user successfully creates a file inside a project (or a draft file). This is where users can start by generating six design targets, which are shoe images generated by the GAN model and are later used to control the final design. The user can always reshuffle the targets until satisfied with the results.

Once the design targets are present, clicking the generate button will generate an output equidistant from (resembles) all those targets. The output can then be changed by clicking a target, which would steer the final design more towards the selected target.

To give users control over which designs to keep as targets and which ones to update, we've added a simple lock feature that locks a target and prevents it from changing when the targets are reshuffled.

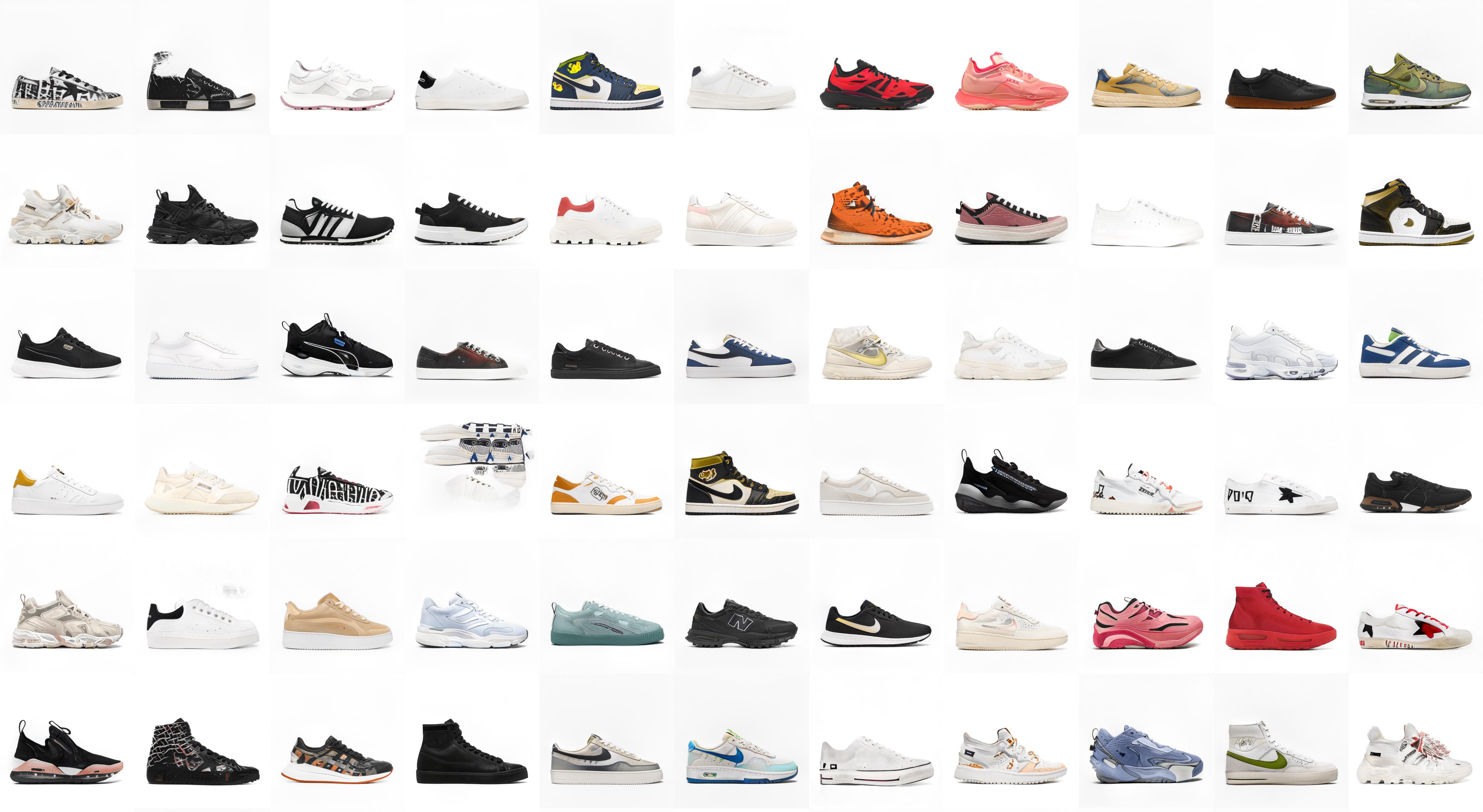

We trained the StyleGAN2-ADA model (see official repository) on 9,171 high-quality sneaker images from over 100 brands, manually collected from around the web. The images were then preprocessed using the OpenCV Python library so that all the sneakers are aligned to the center of the image and have a light background.

StyleGAN2-ADA requires at least 1 high-end NVIDIA GPU to run the training; the model was trained on a Google compute engine VM instance with 8 vCPUs, 30GB of RAM, 200GB of persistent disk storage, and 1 NVIDIA V100 GPU. The training was stopped after 5 days at 3000 kimg (FID value: 5.94). Below you can see the final results of training the GAN model.

After training, the model was tested on Google Colab for variety, details, linear space interpolation, and so on.

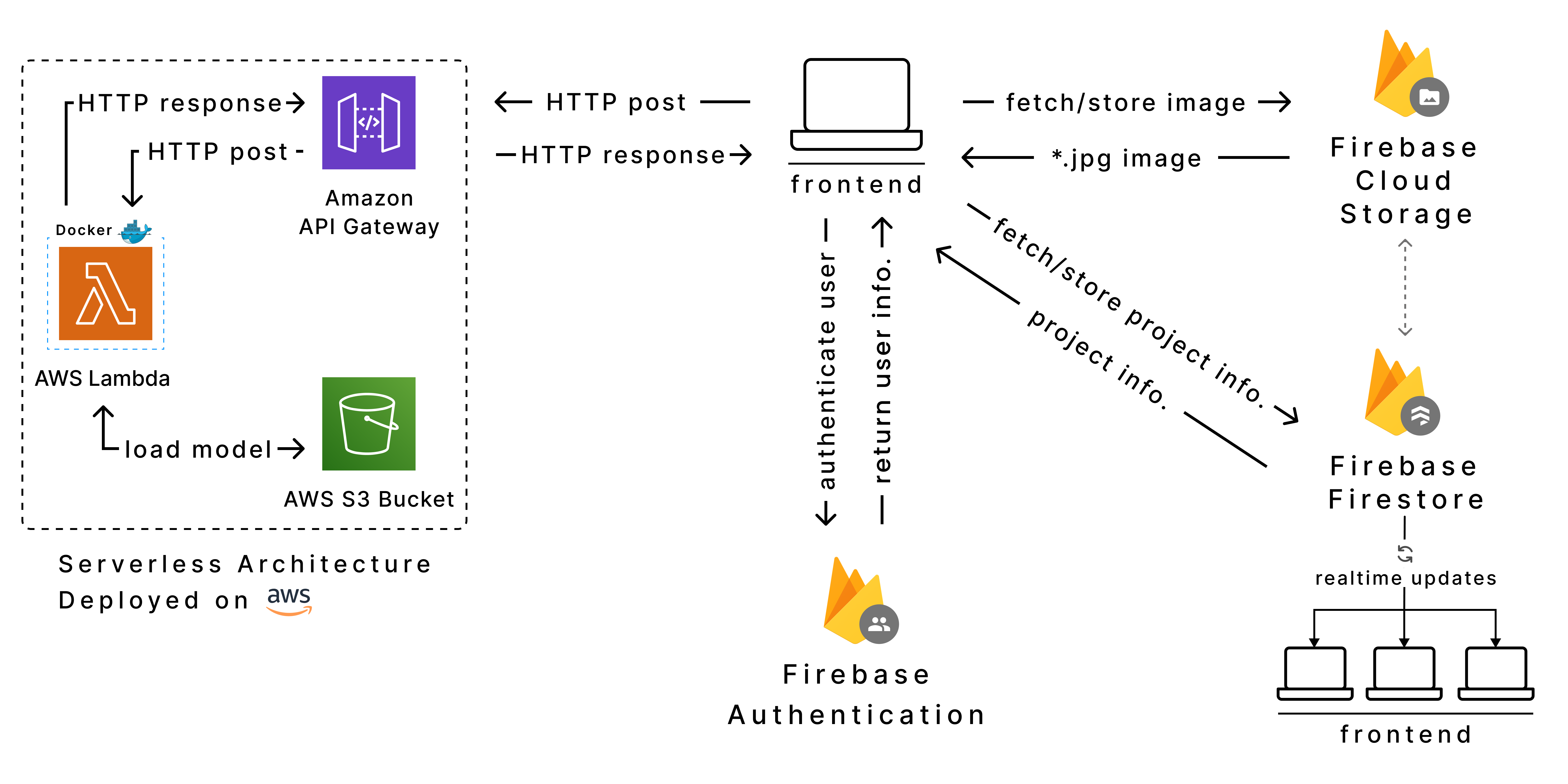

The above figure provides an abstract view of the components that make the system work. It consists of three main parts: the frontend, the backend, and the GAN model.

The frontend is a Next.js application where authenticated users can interact with the platform and make various requests and operations.

As opposed to traditional web applications, the backend relies on Firebase—Google’s Backend-as-a-Service (BaaS), and most of the communication with the service happens client-side and is protected by security rules that prevent non-authenticated users from interacting with the database.

Firebase provides three main services that are most important to the system: Firebase Authentication, Cloud Storage, and Cloud Firestore. Users are authenticated using Firebase authentication, and Firestore is used to store and fetch user-generated data related to users, projects, teams, files, and file data. Large files such as images and thumbnails, on the other hand, are stored in Firebase Cloud Storage.

The platform supports real-time updates; therefore, any changes in one file under which a team is collaborating will be visible to all team members instantly.

The GAN model is deployed on Amazon Web Services (AWS) using a serverless architecture consisting of Amazon API Gateway, AWS Lambda, and an AWS S3 storage bucket. Any request to the GAN model will go through the API Gateway, which will trigger AWS Lambda to start. The latter then loads the model from the S3 bucket and runs inference on it. Finally, the output of the generator model is sent back to be visualized by the client and stored inside Firebase’s storage services. However, to be able to adopt this approach, we had to adjust the generator model first to run on a CPU rather than a GPU.