-

Notifications

You must be signed in to change notification settings - Fork 19.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

using pre trained VGG16 for another classification task #4465

Comments

|

One way to do this, is to not include the fully-connected layers at the top of the network. The convolutional layers will be initialized with weights based on a training on ImageNet dataset. But you to train train the whole network for your 8 classes. This training will train the new fully-connected layers and fine-train the convolutional layers. (you can freeze the convolutional layers, to keep the same features extractors). |

|

If you want to change only the last layer : |

|

Thank you very much! |

|

Thank you JGuillaumin! |

|

For training do you just use the model.compile() and model.fit(data,labels) commands? |

|

@JGuillaumin In your reply, when we only want to change the last layer, did you mean vgg16 = VGG16(weights='imagenet', include_top=True) instead of vgg16 = VGG16(weights=None, include_top=True) |

|

@howardya , actually not. |

|

just a small correction it should be shape=(200, 200, 3) in Otherwise, you will get this error: |

|

@JGuillaumin Thank you very much! |

|

Hi @JGuillaumin and Everyone I really appreciate your help gyus Kamal |

|

@KamalOthman I am also trying to do the same thing. Update if you find any solution. |

|

Hi @chauhan-utk Cheers |

|

Should the input images be normalised in some specific way in order to make best use of the features? |

|

@LindaSt Is your problem solved? |

|

@DanqingZ Yes, I solved my problem by freezing the layers in the base model. |

|

@LindaSt @DanqingZ I am using conv layers of VGG16 for feature extraction. I tried freezing layers of VGG16 but still accuracy on train and val still remains at 10%, Can u pls help? |

|

@LindaSt @DanqingZ@aniket03 BTW, the application resnet50 model works good on the cifar10 data set when upsample the image size to 197*197. |

|

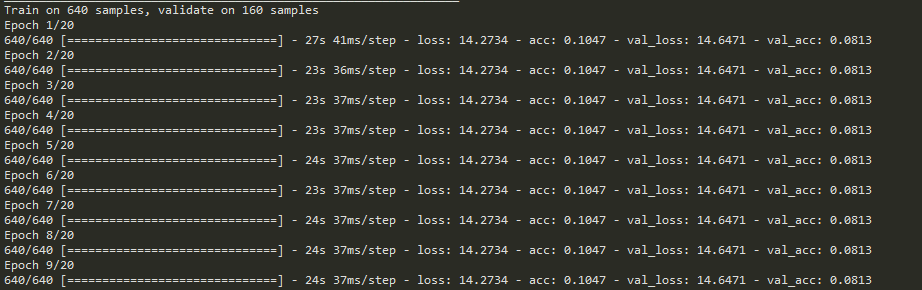

here is the output: here is the code: """

Adapted from keras example cifar10_cnn.py

Train ResNet-18 on the CIFAR10 small images dataset.

GPU run command with Theano backend (with TensorFlow, the GPU is automatically used):

THEANO_FLAGS=mode=FAST_RUN,device=gpu,floatX=float32 python cifar10.py

"""

from __future__ import print_function

from keras.datasets import cifar10

from keras.preprocessing.image import ImageDataGenerator

from keras.utils import np_utils

from keras.callbacks import ReduceLROnPlateau, CSVLogger, EarlyStopping

from scipy.misc import toimage, imresize

import numpy as np

#import resnet

from keras.applications.vgg16 import VGG16

from keras.preprocessing import image

from keras.applications.vgg16 import preprocess_input

from keras.layers import Input, Flatten, Dense

from keras.models import Model

import numpy as np

from keras.callbacks import ModelCheckpoint

from keras import backend as K

#K.set_image_dim_ordering('th')

# fix random seed for reproducibility

seed = 7

np.random.seed(seed)

lr_reducer = ReduceLROnPlateau(factor=np.sqrt(0.1), cooldown=0, patience=5, min_lr=0.5e-6)

early_stopper = EarlyStopping(min_delta=0.001, patience=20)

csv_logger = CSVLogger('./results/vgg16imagenetpretrained_upsampleimage_cifar10_data_argumentation.csv')

batch_size = 32

nb_classes = 10

nb_epoch = 200

data_augmentation = True

# input image dimensions

img_rows, img_cols = 197, 197

I_R = 64

# The CIFAR10 images are RGB.

img_channels = 3

# The data, shuffled and split between train and test sets:

(X_train_original, y_train), (X_test_original, y_test) = cifar10.load_data()

# Convert class vectors to binary class matrices.

Y_train = np_utils.to_categorical(y_train, nb_classes)

Y_test = np_utils.to_categorical(y_test, nb_classes)

X_train_original = X_train_original.astype('float32')

X_test_original = X_test_original.astype('float32')

# upsample it to size 64X64X3

X_train = np.zeros((X_train_original.shape[0],I_R,I_R,3))

for i in range(X_train_original.shape[0]):

X_train[i] = imresize(X_train_original[i], (I_R,I_R,3), interp='bilinear', mode=None)

X_test = np.zeros((X_test_original.shape[0],I_R,I_R,3))

for i in range(X_test_original.shape[0]):

X_test[i] = imresize(X_test_original[i], (I_R,I_R,3), interp='bilinear', mode=None)

# subtract mean and normalize

mean_image = np.mean(X_train, axis=0)

X_train -= mean_image

X_test -= mean_image

X_train /= 128.

X_test /= 128.

print(X_train.shape)

#model = resnet.ResnetBuilder.build_resnet_18((img_channels, img_rows, img_cols), nb_classes)

#model =get_vgg_pretrained_model()

#Get back the convolutional part of a VGG network trained on ImageNet

model_vgg16_conv = VGG16(input_shape=(I_R,I_R,3),weights='imagenet', include_top=False,pooling=max)

model_vgg16_conv.summary()

#print("ss")

#Create your own input format (here 3x200x200)

input = Input(shape=(I_R,I_R,3),name = 'image_input')

#print("ss2")

#Use the generated model

output_vgg16_conv = model_vgg16_conv(input)

print("ss3")

#Add the fully-connected layers

x = Flatten(name='flatten')(output_vgg16_conv)

x = Dense(512, activation='relu', name='fc1')(x)

x = Dense(128, activation='relu', name='fc2')(x)

x = Dense(10, activation='softmax', name='predictions')(x)

#Create your own model

my_model = Model(input=input, output=x)

#In the summary, weights and layers from VGG part will be hidden, but they will be fit during the training

my_model.summary()

my_model.compile(loss='categorical_crossentropy',

optimizer='adam',

metrics=['accuracy'])

# serialize model to JSON

model_json = my_model.to_json()

with open("./results/model_data_argumentation.json", "w") as json_file:

json_file.write(model_json)

print(my_model.summary())

if not data_augmentation:

print('Not using data augmentation.')

# checkpoint

# filepath="./results/weights-improvement-{epoch:02d}-{val_acc:.2f}.hdf5"

# checkpoint = ModelCheckpoint(filepath, monitor='val_acc', verbose=1, save_best_only=True, mode='max')

# callbacks_list = [checkpoint]

# Fit the model

#model.fit(X, Y, validation_split=0.33, epochs=150, batch_size=10, callbacks=callbacks_list, verbose=0)

my_model.fit(X_train, Y_train,

batch_size=batch_size,

nb_epoch=nb_epoch,

validation_data=(X_test, Y_test),

shuffle=True,

callbacks=[lr_reducer, early_stopper, csv_logger])

else:

print('Using real-time data augmentation.')

# This will do preprocessing and realtime data augmentation:

datagen = ImageDataGenerator(

featurewise_center=False, # set input mean to 0 over the dataset

samplewise_center=False, # set each sample mean to 0

featurewise_std_normalization=False, # divide inputs by std of the dataset

samplewise_std_normalization=False, # divide each input by its std

zca_whitening=False, # apply ZCA whitening

rotation_range=0, # randomly rotate images in the range (degrees, 0 to 180)

width_shift_range=0.1, # randomly shift images horizontally (fraction of total width)

height_shift_range=0.1, # randomly shift images vertically (fraction of total height)

horizontal_flip=True, # randomly flip images

vertical_flip=False) # randomly flip images

# Compute quantities required for featurewise normalization

# (std, mean, and principal components if ZCA whitening is applied).

datagen.fit(X_train)

# Fit the model on the batches generated by datagen.flow().

my_model.fit_generator(datagen.flow(X_train, Y_train, batch_size=batch_size),

steps_per_epoch=X_train.shape[0] // batch_size,

validation_data=(X_test, Y_test),

epochs=nb_epoch, verbose=1, max_q_size=100,

callbacks=[lr_reducer, early_stopper, csv_logger])

my_model.save_weights("./results/vgg16_pretrained_upsample_model_data_argumentation.h5")

print("Saved model to disk")

|

|

How do You get all the known classes of the pretrained VGG16 model?Thanks |

|

Hi everyone, This is the output I am seeing Model Summary: This is the code I am using: |

|

Hi, You final layers are very big !! |

|

I think it does not train because you use |

|

Hi Julien, |

|

Because softmax is not an element-wise activation function. Here is the formula for x a vector of dim K (sorry there is no MathJax rendering in GitHub issue .. ) When you have K=1 (your case), whatever your network outputs, the softmax will be 1 ! That's why your loss is constant ! |

|

awesome thank you for this! 👍 |

|

Sorry for the bump - but would retraining those layers as mentioned herein, improve the results for my domain specific feature comparison (aka: similar image finding) where i'm using a cosine difference of the feature (4096-element array, which is the activations of the last fully-connected layer fc2 in VGG16) to compare image "similarity" between an image set? I'm comparing a lot of images which are mostly related, but I suspect I could get better results by training on my own huge dataset (250,000 images, can be divided into 6 categories easily) ie

|

|

@JGuillaumin How do I do to train a model that has images with 5 channels in input using pre trained VGG16? |

How do I do to train a model that has images with 5 channels in input using pre trained VGG16? |

The key lies in keras api load_weights parameter by_name.If by_name is TRUE, weights are loaded into layers only if they share the same name. This is useful for fine-tuning or transfer-learning models where some of the layers have changed. This is my model with 4 channels,you can do like this. |

Hi @xuzhengxiao, how are you? I am new to the area of neural networks and python. I'm studying alone, so I have some doubts. Your idea seems to be very interesting. But I did not understand some commands. I could not understand why you used base_model_i4.layers [2] .name = 'new_conv1 / conv'. What does 'new_conv1 / conv' mean? How did you know it was layer [2] to replace the name? In the command f ['conv1'] ['conv'] ['conv1'] ['conv'] ['kernel: 0']. Value, how did you discover this conv and conv1 sequence? And what does ['kernel: 0'] mean? I thank you for your attention, |

|

@gledsonmelotti 2.which layer shoud be changed depends on your needs and pre trained model.In my case,I want to substitute input layer with 4-channel layer.Using model.summary() to check the pre trained model structure.(check this link :https://user-images.githubusercontent.com/9840937/50503586-e84f4680-0aa2-11e9-8648-a54cce1406e2.png). In pre trained model,input layer has 3 channels,so the in channel of first conv layer is 3,which doesn't meet my customed need(in channel 4).So model.layers[2] should be changed. 3.f['conv1']['conv']['conv1']['conv']['kernel:0'].value,actually you need check the h5 file to load the weights you needed. |

|

@nabsabraham #Use the generated model #Add the fully-connected layers |

|

Hi I also try to create my model with VGG16 pretrained model but I am getting following error: the code files are attached for reference |

if my input is having "channels_first" what should be the changes?? |

Tank you very much @xuzhengxiao. I really liked your explanation. Thank you very much. |

Worked very well. I have used the same model as @xuzhengxiao and had the same need of adding the 4th channel. But if anyone tries this, remember to set the layer name back in order to save/load the model afterwards without any problems. |

|

@DanqingZ help me out please |

I had the same issue with VGG16 and this problem can be solved using BatchNormalization layers between the dense layers. |

How can I user the new keras.applications.VGG16 class to start my training with the weights in H5 file, but for a new task with 8 classes only?

I didn't figure out how to pop the softmax layer and put another one with 8 perceptons only.

The text was updated successfully, but these errors were encountered: