-

Notifications

You must be signed in to change notification settings - Fork 50

Description

This is an attempt to spot all geometry issues in VIPS resizing at once.

Pillow 7.0 has been released today (no SIMD version yet) and I've implemented a new resizing method called reduce(). It allows very fast reducing an image by integer times in both dimensions and could be used as a first step before true-convolution resampling. I know VIPS also works the same way. So, I borrowed this idea and come to share some ideas, test cases, and bugs in VIPS resizing. I hope this will be useful.

At first, let's learn the tools we have.

In Pillow

im.reduce()— fast image shrinking by integer times. Each result pixel is just an average value of particular source pixels.im.resize()— convolution-based resampling with adaptive kernel size.im.resize(reducing_gap=2.0)— 2-step resizing where the first step isim.reduce()and second step is convolution-based resampling. The intermediate size is not less than two times bigger than the requested size.

In VIPS

im.shrink()— fast image shrinking by integer times and unknown algorithm for fractional scales.im.reduce()— convolution-based resampling with adaptive kernel size. The documentation says "This will not work well for shrink factors greater than three", it is not clear for me why.im.resize()— 2-step resizing where the first step isim.shrink()and second step is convolution-based resampling withim.reduce(). The documentation says "How much is done by vips_shrink() vs. vips_reduce() varies with the kernel setting" but I pretty sure for this is equivalent of Pillow'sreducing_gap=2.0at least for current cases.

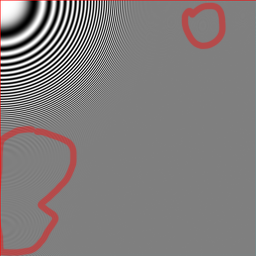

The test image

It has the following features:

- Quite large, could be used for massive downscaling.

- Has very contrast lines with variable widths and different angles.

- Average color on large scale is uniform gray. The closer implementation to true convolution resampling, the flatter will be resized image.

- Size of the image is 2049 × 2047, which allows test different rounding behavior.

- It has a pixel-width red border which allows test edges handling correctness.

The test code

import sys

import timeit

import pyvips

from PIL import Image

image = sys.argv[1]

number, repeat = 10, 3

pyvips.cache_set_max_mem(0)

im = pyvips.Image.new_from_file(image)

print(f'\nSize: {im.width}x{im.height}')

for scale in [8, 9.4, 16]:

for method, call in [

('shrink', lambda im, scale: im.shrink(scale, scale)),

('reduce', lambda im, scale: im.reduce(scale, scale, centre=True)),

('resize', lambda im, scale: im.resize(1/scale, vscale=1/scale)),

]:

fname = f'{scale}x_vips_{method}.png'

call(im, scale).write_to_file(fname)

time = min(timeit.repeat(

lambda: call(im, scale).write_to_memory(),

repeat=repeat, number=number)) / number

print(f'{fname}: {time:2.6f}s')

im = Image.open(image)

print(f'\nSize: {im.width}x{im.height}')

for scale in [8, 9.4, 16]:

for method, call in [

('reduce', lambda im, scale: im.reduce((scale, scale))),

('resize', lambda im, scale:

im.resize((round(im.width / scale), round(im.height / scale)), Image.LANCZOS)),

('resize_gap', lambda im, scale:

im.resize((round(im.width / scale), round(im.height / scale)),

Image.LANCZOS, reducing_gap=2.0)),

]:

try:

fname = f'{scale}x_pillow_{method}.png'

call(im, scale).save(fname)

time = min(timeit.repeat(

lambda: call(im, scale),

repeat=repeat, number=number)) / number

print(f'{fname}: {time:2.6f}s')

except TypeError:

# reduce doesn't work with float scales

passPillow reduce() vs VIPS shrink()

I will use only 8x versions. It is better to compare Images by opening in adjacent browsers tabs and fast switch.

The results are really close. The only noticeable difference is the last column. Indeed, we are scaling by a factor of 8 and 2049 / 8 is 256.125 pixels. So VIPS strips the last column, while Pillow expands the last column of red pixels to the whole last column of the resulting image. At first glance VIPS's behavior looks more correct since the last pixel should be 0.125 pixels width in the resulting image and this is close to 0 than to 1. But in practice, we lost the information from the last column completely for further processing. While in Pillow's version we still can take into account the impact of the last column in further processing.

The same behavior as Pillow shows libjpeg when you are using DCT scaling. If you are opening a 2049 × 2047 jpeg image with scale = 8, you get 257×256 image where the last source column is expanded on the whole column of the resulting image.

Pillow resize() vs VIPS reduce()

There are some problems with VIPS version. The whole image is shifted by about 0.5 pixels to the bottom right. As a result, top and left red lines are too bold while the bottom and right one are completely lost. This looks like a rounding error at some stage. Very likely this is the same issue as in libvips/libvips#703. The Pillow result, as the original image, has tiny rose border which looks equally on different sides.

Another Issue is some additional moire where it shouldn't be. This looks like a precision error.

Pillow resize(reducing_gap=2.0) vs VIPS resize()

Surprisingly, all of these issues don't affect resizing too much. Both images have moire due to precision loss on the first step which is ok. The locations of rings are different which is also probably is ok. But VIPS losts the right red line completely.

How this works in Pillow

The main invention in Pillow is box argument for resizing. box is an additional bunch of coordinates in the source image which are used as pixels source instead of the whole image. At first glance, this looks like crop + resize sequence, but the main advantage what box is not used for size calculation, it only used for resizing coefficient calculations and this is why coordinates could be floats.

So, what happens when we need to resize 2049×2047 image to 256×256 size with reducing_gap=2?

- We need to calculate how much we can reduce the image. Since we have

reducing_gap, it will be 2049 / 256 / 2 = 4.001953125 times for width and 2047 / 256 * 2 = 3.998046875 times for height. The real reduction will be in (4, 3) times (4 for width, 3 for height). - As a result, we have a reduced image with size 2049 / 4 = 512.25 by 2047 / 3 = 682.333 which both rounded up to 513 by 683 pixels image. But also we have the box! It is coordinates of the source image in the reduced image coordinate system and this box is (0, 0, 512.25, 682.333).

- All we need is to resize the reduced 513×683 pixels image to 256×256 using (0, 0, 512.25, 682.333) box.

Performance issues

Also, I see some unexpected results for the performance of resizing.

$ VIPS_CONCURRENCY=1 python ./shrink.py ../imgs/radial.rgb.png

Size: 2049x2047

8x_vips_shrink.png: 0.014493s

8x_vips_reduce.png: 0.016885s

8x_vips_resize.png: 0.028791s

9.4x_vips_shrink.png: 0.016348s

9.4x_vips_reduce.png: 0.050876s

9.4x_vips_resize.png: 0.028615s

16x_vips_shrink.png: 0.009454s

16x_vips_reduce.png: 0.065079s

16x_vips_resize.png: 0.014835s

Size: 2049x2047

8x_pillow_reduce.png: 0.002971s

8x_pillow_resize.png: 0.045327s

8x_pillow_resize_gap.png: 0.010557s

9.4x_pillow_resize.png: 0.046137s

9.4x_pillow_resize_gap.png: 0.006636s

16x_pillow_reduce.png: 0.002880s

16x_pillow_resize.png: 0.043109s

16x_pillow_resize_gap.png: 0.004624s

Shrink has comparable time fox 8x and 16x which is ok. But if you look at reduce, it performs almost 4 times faster for 8x reducing than for 16x. This shouldn't happen, I think. Despite this fact, resize itself works 2 times slower for 8x resizing which is also very strange. It works even slower than true-convolution reduce.