Evaluating language knowledge of ELL students from grades 8-12

-

Exploratory data analysis (EDA) was conducted to the training data, which has 3911 unique entries, not a large dataset. According to the size, some simple traditional NLP approaches, such as

Bag-of-Words,tf-idf, etc., could work supprisingly well. Another popular approach would be using fine-tuning pre-trained large language models, which have learnt deep human language patterns from huge training datasets and store the patterns in their tens of millions even billions of parameters, such asDeBERTa-V3-Base(86M). -

7 different types of machine learning models were trained and submitted to Kaggle, with architectures from simple to complex, sizes from small to large, scores from low to very close to the top ones 0.433+ (my best score so far is 0.440395, would rank around 1,108 of 2,654 teams).

-

Among the 7 models,

-

5 models utilized a scikit-learn (sklearn) pipeline and 2 a regular neural network training class. The sklearn pipeline combines

- manually engineered features such as

unigrams count(reflecting the english learners' vocabulary)line breaks count(for that essays with lower scores tend to have too few or too many line breaks)I vs. iandbad punctuation(for that worse essays usually don't pay attention to the capitalization and punctuation rules)tf-idf(a widely used statistical method), etc.

- a feature engineered with fastText, such as

english score, to measure how much likely an essay is classified as English (for that essays with lower scores were written by non-native English speakers who tend to use more non-English words), etc. - the output of a state-of-the-art natural language model, in this case, the pre-trained transformer-based DeBERTa-V3-Base model, as a "feature"

and feed the combinations into the relatively "traditional" simple machine learning regressors, such as

linear,xgboost, LightGBM (lgb), and a 2 fully connected layer vanilla neural network (nn) - manually engineered features such as

-

2 models each utilized a fine-tuned custom pre-trained

DeBERTamodel, which consists of the deberta-v3-base model, a pooling layer, and one or two fully connected layers.

-

With such a design, different types of models can be trained, evaluated, tested, and submitted with similar APIs.

E.g.

- The scikit-learn pipeline

def make_features_pipeline(fastext_model_path,

deberta_config:MSFTDeBertaV3Config):

pipes = [

("unigrams_count", number_of_unigrams_pipe),

("line_breaks_count", number_of_line_breaks_pipe),

("english_score", make_english_score_pipe(fastext_model_path)),

("i_vs_I", i_pipe),

("bad_punctuation", bad_punctuation_pipe),

("tf-idf", tf_idf_pipe)

]

if deberta_config.using_deberta:

print("using deberta embedding")

pipes += [("deberta_embedding", make_deberta_pipe(deberta_config))]

else:

print("not using deberta embedding")

features_pipeline = FeatureUnion(pipes)

return features_pipelines- The trainer clasess

inheritance relationship:

ModelTrainer

|--> SklearnTrainer

|--> SklearnRegressorTrainer (dummy, linear, xgb, lgb)

|--> NNTrainer (nn)

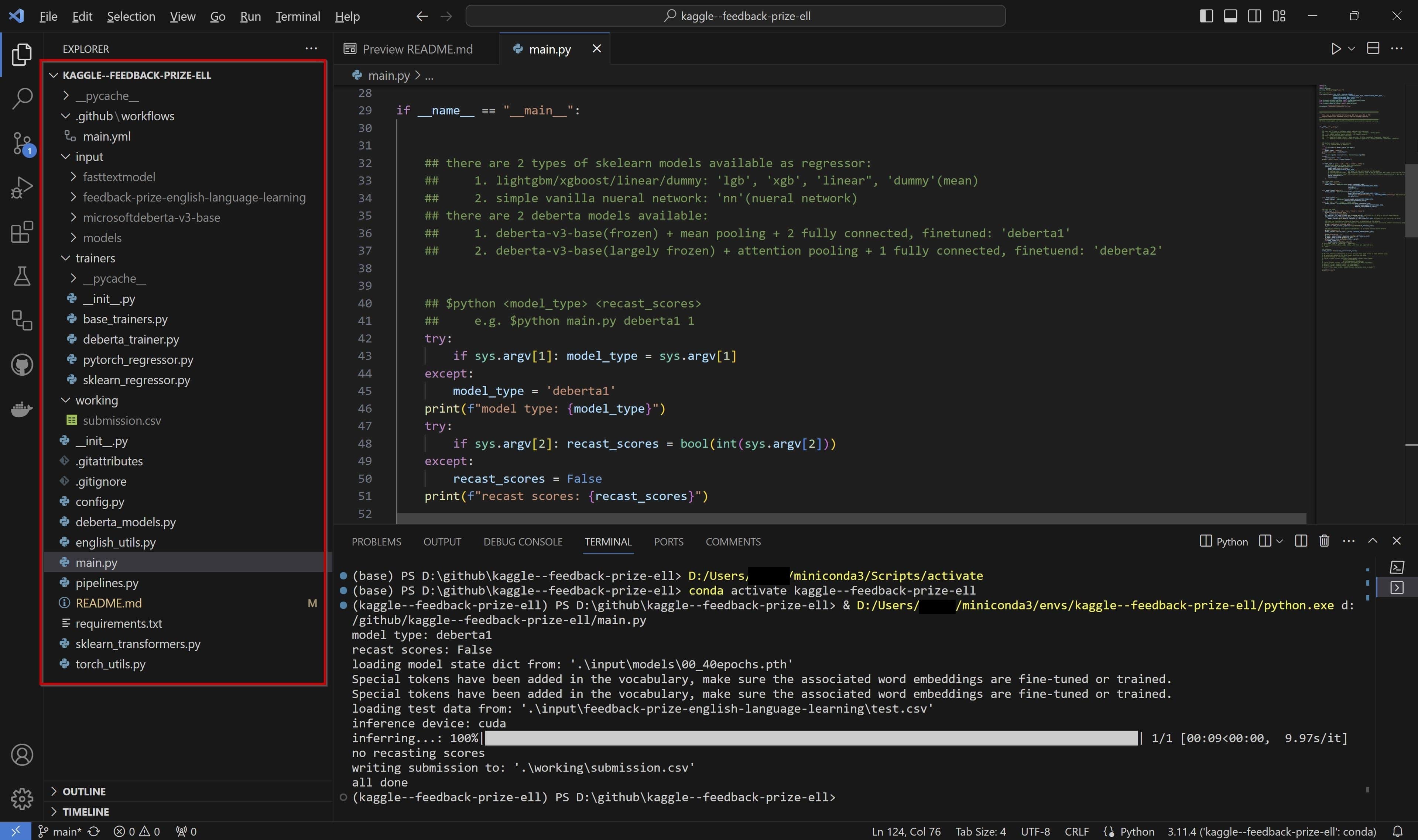

|--> DebertaTrainer (deberta1, deberta2)- A better workflow was established with GitHub Actions, which enables code firstly to be written in a local IDE, then committed to GitHub and automatically uploaded to Kaggle as a "dataset" (if the commit message title contains the string "upload to kaggle"), and finally imported to a Kaggle Notebook and executed. In the Kaggle Notebook, I just needed to type

$python main.py <model_type> <recast_scores> <using_deberta>to choose different models to run. With the workflow, I could quickly iterate the code, test out different models with different hyperparameters. (The Kaggle dataset uploading public APIs are not very user friendly.)

<- Watch the video!

<- Watch the video!

- For successful Kaggle submissions, I also had to figure out how to install Python libraries, import deberta-v3-base model without the Internet (as the competition required), and load the model checkpoints which were fine-tuned and saved in Google Colab or locally on my laptop (Kaggle's GPU weekly quota is 30 hours, and mine was solely used for submissions). It turned out all these files can be uploaded to Kaggle as "datasets", then you can

Add Datain a Kaggle Notebook, and these "datasets" will be added to theInputdirectory under different folders.

E.g.

- upload wheel files as Kaggle dataset

python, nextAdd Datain the notebook, then install the librarysklegofrom the Kaggle directory by executing the command$pip install sklego --no-index --find-links=file:///kaggle/input/python.

- What can be improved? A lot of code refactoring can be done in the future, to make the training/evaluating/testing APIs and the training hyperparameters more unified, and the whole framework more flexible and automated. MLOps platforms such as Weights & Biases could be integrated, for better tracking and analysing of the training processes. And perhaps I could deploy one of the models somewhere on the Internet, and use it to score my own writing... 🤞

P.S.

- Kaggle leaderboard

-

Project Proposal【google docs】

-

Exploratory Data Analysis【notebook】

-

Code in 【GitHub repo】, or as 【Kaggle dataset】

-

Prototype and fine-tune Deberta (with Accelerate and W&B)【notebook】V1, V2

-

GitHub Actions enable auto uploading repo to Kaggle【how-to doc】 【github code】【notebook for debugging】

-

Kaggle submissions

| Notebook Version | Run | Private Score | Public Score | |

|---|---|---|---|---|

| n1v1 - baseline | 23.0s | 0.644705 | 0.618673 | column means as baseline |

| n1v20 - dummy | 260.8s - GPU T4 x2 | 0.644891 | 0.618766 | train and infer on GPU |

| n1v21 - linear | 498.6s - GPU T4 x2 | 1.266085 | 1.254728 | train and infer on GPU |

| n1v27 - linear, no deberta embedding | 85.5s - GPU T4 x2 | 0.778257 | 0.769154 | train and infer |

| n1v25 - xgb | 467.2s - GPU T4 x2 | 0.467965 | 0.471593 | train and infer on GPU |

| n1v28 - xgb, no deberta embedding | 107.7s - GPU T4 x2 | 0.540446 | 0.531599 | train and infer |

| n1v23 - lgb | 323.2s - GPU T4 x2 | 0.458964 | 0.459379 | train and infer on GPU |

| n1v26 - lgb, no deberta embedding | 71.9s - GPU T4 x2 | 0.540557 | 0.528224 | train and infer |

| n1v6 - nn | 270.8s - GPU T4 x2 | 0.470323 | 0.466773 | train and infer on GPU |

| n1v7 - nn | 7098.2s | 0.470926 | 0.465280 | train and infer on CPU |

| n1v29 - nn, no deberta embedding | 99.8s - GPU T4 x2 | 0.527629 | 0.515268 | train and infer on GPU |

| n1v12 - deberta1 (invalid) | 80.9s - GPU T4 x2 | 0.846934 | 0.836776 | fine-tuned custom deberta-v3-base, 7 epochs, with Accelerate, infer only |

| n1v19 - deberta1 | 86.9s - GPU T4 x2 | 0.474335 | 0.478700 | fine-tuned custom deberta, 30 epochs, infer only |

| n2v1 - deberta2 | 9895.7s - GPU P100 | 0.440395 | 0.441242 | fine-tuned custom, 5 epochs, Multilabel Stratified 4-Fold, train and infer |

| n1v18 - deberta2 | 80.8s - GPU T4 x2 | 0.444191 | 0.443768 | fine-tuned custom, 5 epochs, infer only |

Note:

1. From the n1v7 Kaggle execution log we know that the time to train the simple hidden layer neural network on CPU is 4816-4806=10s, simliar to that on GPU, which means almost all time was spent on training data and testing data transformation, due to the size of the Deberta model. Hence there is no need to test out training on GPU and infering on CPU, which would be as slow as the scenario of both processes on CPU.

2. From n1v12 to v13, with a few more epochs, there is a little improvement. However, the scores 0.88+ are way larger than the column-mean baseline score 0.644, which indicates some problem with the model or the training. It turned out that the shuffling of the test data caused the problem.

3. Submission n2v1 and n1v18 used a different cutome model, which has one attention pooling layer and only one fully connected layer. Mixed techniques were adopted during the traing, such as gradient accumulation, layerwise learning rate decay, Automatic Mixed Precision, Multilabel Stratified K-Fold, Fast Gradient Method, etc.. These techniques largely imporved the final score. With a pre-trained model, train only 5 epochs, less than 10,000 seconds, we could get very close to the best score.

- Code repo structure explained

main.py- entry point, provides a command-line interface for a packageconfig.py- all the configurationssklearn_transformers.py- generate fasttext and deberta featuresenglish_utils.py- fasttext functions, text processing functionstorch_utils.py- dataset classes, neural network pooling layer classespipelines.py- all the scikit-learn pipelines (for model types: dummy, linear, xgb, lgb, nn)deberta_models.py- custom deberta modelstrainersfolder - all the training classesinputandworkingfolders - simulate the Kaggle folders- the Kaggle data files and sample submission files are stored in

\input\feedback-prize-english-language-learning - the Kaggle Notebook working and output direcotry is

\working - your own or someone else's datasets (which might include repos, python library wheel files, models, etc. uploaded to Kaggle) will be linked as sub-directories (by clicking on the

Add Databutton) under theinputdirectory

- the Kaggle data files and sample submission files are stored in

main.yml- GitHub Actionsupload github code to kaggle

- The architecture of the BERT family models and how to train them【notebook】

- Weights & Biases MLOPS-001【notebooks】

- Scikit-lego mega model example code【notebook】

- Loading HuggingFace models【notebook】

- Scikit-learn CountVectorize, csr_matrix, np.matrix.A【notebook】

- Connect Google Colab with a local runtime on a docker image【notebook】

- Imporve your Kaggle workflow with GitHub Actions【Google Docs】

- Kaggle dataset uploading public APIs【notebook】

- Python Protocol or Abstract Basic Class【notebook】

... or check this Google Drive folder

2023-09-21

- project submitted to Udacity

2023-09-19

- added another fine-tuned cutome deberta-v3-base model (model_type='deberta2'), which consists of deberta-v3-base (layer learning rate decay) + attention pooling + single fully-connected layer, trained 5 epochs on Google Colab GPU T4

- bug fix: there is a large discrepancy bwtween the training score (around 0.45) and the testing score (around 0.8). went through the

deberta_trainer.pycode part by part, and finally figured out the problem was caused by the data loader. for testing data,suffle=Falseshould be configured.

2023-09-11

- added fine-tuned deberta-v3-base model (model_type='derberta1'), which consists of deberta-v3-base (frozen) + mean pooling + 2 fully-connected layer with ReLU and dropout layers in-between, trained on Google Colab GPU T4. 7 epochs can reach a score around 4.8. typically for transferred learning, a wide fully-connected layer is enough, while more might be suboptimal. also ReLU and dropout might reduce the performance.

- train with Accelerate and Weights & Biases

2023-09-10

- minor refactoring, bug fix, all done

- initial training, having all the current features and a simply vanilla neural network with hidden dimension [64] as the regressor yields the best result (has reached MCRMSE=0.47+ at 200 epochs, the best score is 0.43+) so far. refer to the training log

- fasttext and deberta (pre-trained, not fine-tuned) for feature extraction, and hyperparameters of the regressor could be fine-tuned for better result

- add requirements.txt (pip freeze > requirements.txt)

- submitted notebooks in kaggle

2023-09-07

- forked then bug-fixed the github action (issue and pull request)

- updated the kaggle python api version in the action, from 1.5.12 to 1.5.16

- the upload workflow will only be triggered if string "upload to kaggle" is found in the commit message (main.yml)

- Kaggle Notebook, Using 🤗 Transformers for the first time | Pytorch by @BRYAN SANCHO

- Kaggle Notebook, DeBERTa-v3-base | 🤗️ Accelerate | Finetuning by @SHREYAS DANIEL GADDAM

- Kaggle Notebook, FB3 English Language Learning by @张HONGXU

- Kaggle Notebook, 0.45 score with LightGBM and DeBERTa feature by @FEATURESELECTION

- GitHub Actions repo, jaimevalero/push-kaggle-dataset

- GitHub repo, https://github.com/microsoft/DeBERTa

%20_%20Kaggle-min.jpg?raw=true)