Machine Learning Using Tensorflow (RNN) to implement language translator for English-French translation.

Translating English sentences to French, using LSTM on RNN architecture. Accuracy of model : 99.45%

A recurrent neural network (RNN) is a class of artificial neural networks where connections between nodes form a directed graph along a temporal sequence. This allows it to exhibit temporal dynamic behavior. Derived from feedforward neural networks, RNNs can use their internal state (memory) to process variable length sequences of inputs. This makes them applicable to tasks such as unsegmented, connected handwriting recognition or speech recognition.

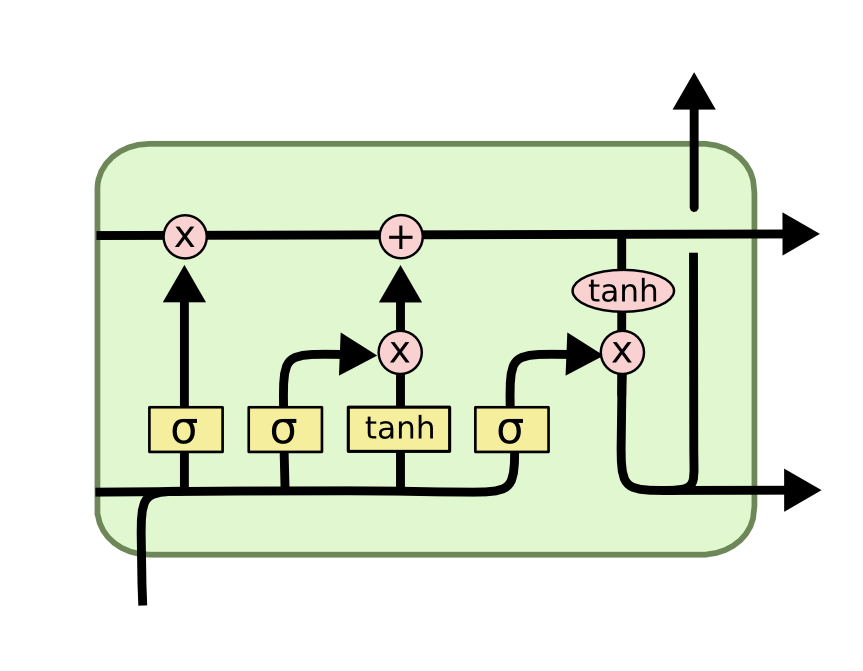

Long short-term memory is an artificial recurrent neural network architecture used in the field of deep learning. Unlike standard feedforward neural networks, LSTM has feedback connections. It can not only process single data points, but also entire sequences of data.

- Working on improving standards on dataset.

- Working on better architecture.

- Training done on smaller database use limitation on Google Colab.