-

Notifications

You must be signed in to change notification settings - Fork 25.5k

Description

🐛 Bug

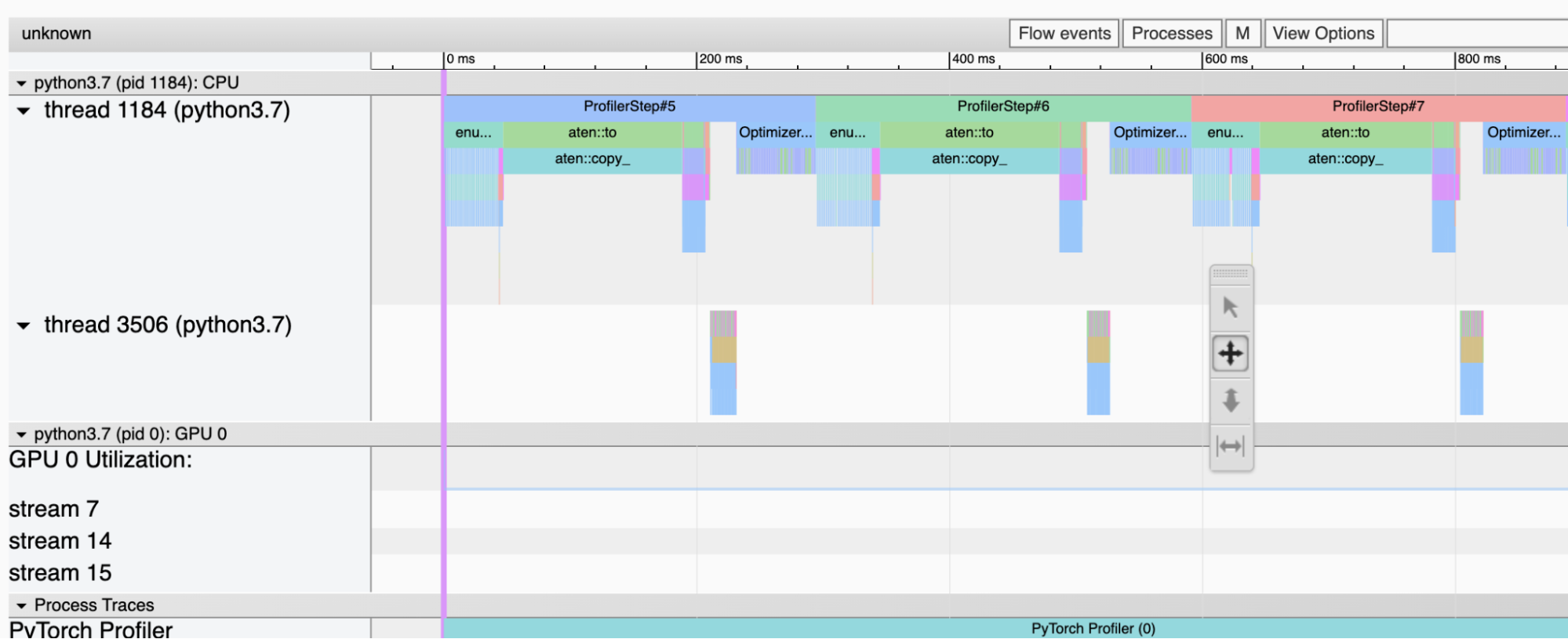

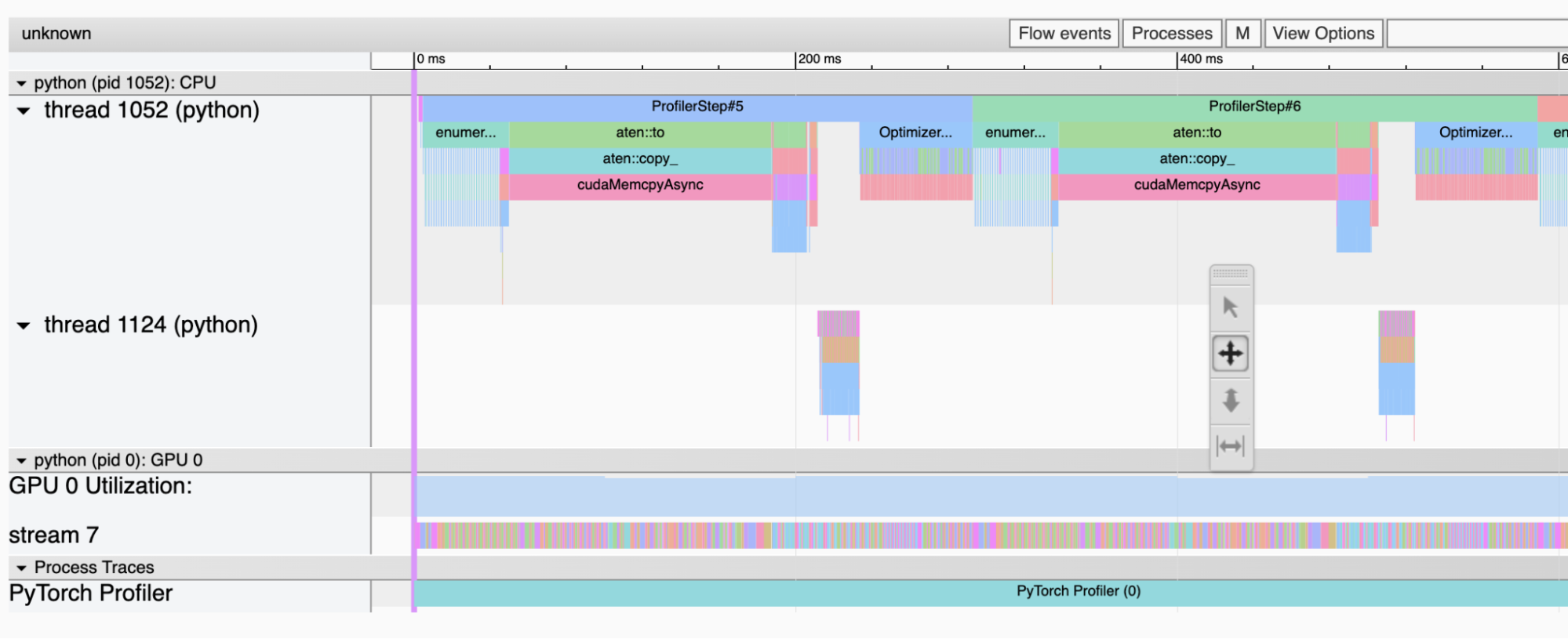

PyTorch profiler doesn't produce GPU trace when I build with bazel.

To Reproduce

Steps to reproduce the behavior:

- Add Kineto to PyTorch bazel build

pytorch/BUILD.bazel

Add

"-DUSE_KINETO",

"-DEDGE_PROFILER_USE_KINETO",

to COMMON_COPTS

Add

"@libkineto//:libkineto",

under deps of cc_library caffe2 and torch

libkineto.BUILD

cc_library(

name = "libkineto",

srcs = glob(

[

"src/*.cpp",

"src/*.h",

],

),

hdrs = glob([

"include/*.h",

"src/*.tpp",

]),

copts = [

"-DKINETO_NAMESPACE=libkineto",

"-DHAS_CUPTI",

],

includes = [

"include",

],

deps = [

"@cuda//:cuda_headers",

"@cuda//:cupti",

"@cuda//:cupti_headers",

"@cuda//:nvperf_host",

"@cuda//:nvperf_target",

"@fmt",

],

)

-

Run example script https://github.com/pytorch/kineto/blob/main/tb_plugin/examples/resnet50_profiler_api.py

-

No GPU trace in Tensorboard

Expected behavior

GPU trace is produced (I managed to get the trace by running with pre-built PyTorch)

Environment

PyTorch version: 1.9.0

Is debug build: False

CUDA used to build PyTorch: 10.2

ROCM used to build PyTorch: N/A

OS: Ubuntu 18.04.6 LTS (x86_64)

GCC version: (Ubuntu 7.5.0-3ubuntu1~18.04) 7.5.0

Clang version: Could not collect

CMake version: Could not collect

Libc version: glibc-2.10

Python version: 3.7.3 | packaged by conda-forge | (default, Jul 1 2019, 21:52:21) [GCC 7.3.0] (64-bit runtime)

Python platform: Linux-5.4.0-1053-gcp-x86_64-with-debian-buster-sid

Is CUDA available: True

CUDA runtime version: Could not collect

GPU models and configuration:

GPU 0: Tesla T4

GPU 1: Tesla T4

Nvidia driver version: 460.73.01

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Additional context

cc @ilia-cher @robieta @chaekit @gdankel @bitfort @ngimel @orionr @nbcsm @guotuofeng @guyang3532 @gaoteng-git