Make cuDNN use the current stream #2984

Merged

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Summary

cudnnSetStream calls were missing in the cudnn bindings, resulting in cudnn not using the current stream. This makes it so that the cudnn functions use the current stream. #2523

Test plan

Create a test file (adopted from here)

Ran it through

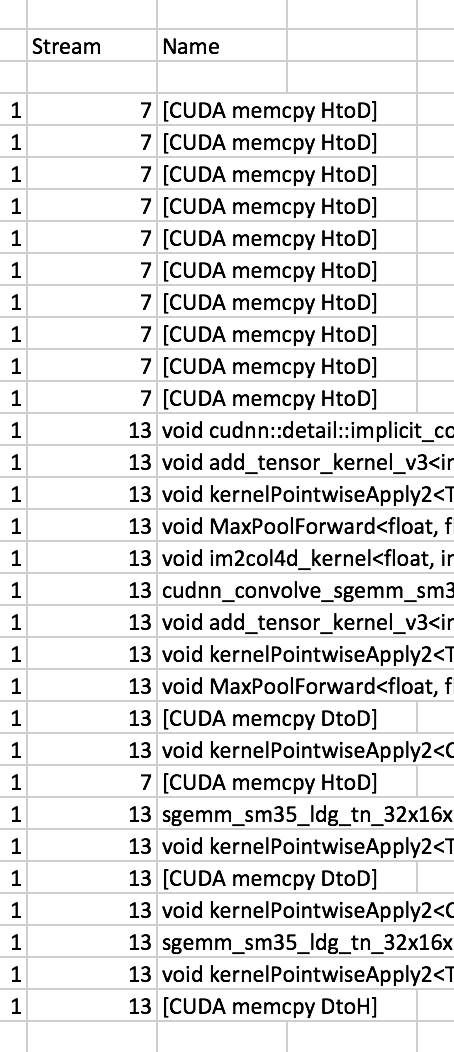

nvprof. Asserted that the cudnn functions did not use the current stream before changes. Asserted that the cudnn functions did use the current stream after changes.Before:

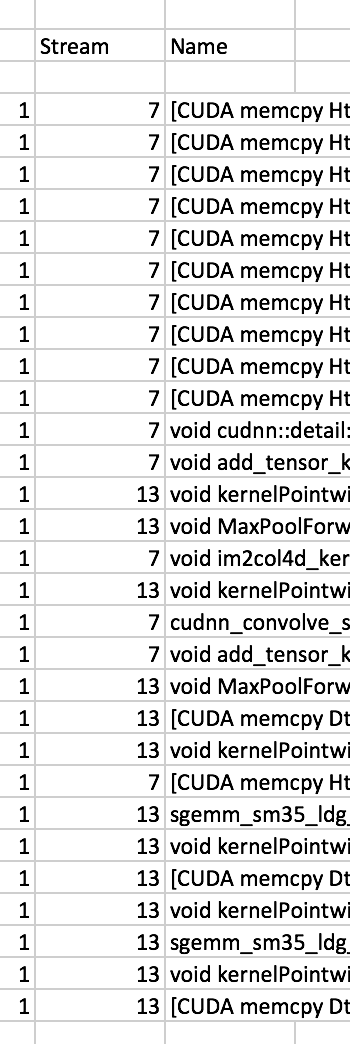

After: