-

Notifications

You must be signed in to change notification settings - Fork 128

grid_sampler_2d: removed lowering #1134

Conversation

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 [ghstack-poisoned]

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 ghstack-source-id: d8f7d54 Pull Request resolved: #1134

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 [ghstack-poisoned]

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 ghstack-source-id: 7ff6b8a Pull Request resolved: #1134

| make_fallback(aten._embedding_bag) | ||

| make_fallback(aten._embedding_bag_forward_only) | ||

| make_fallback(aten._fused_moving_avg_obs_fq_helper) | ||

| make_fallback(aten.grid_sampler_2d_backward) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't think this PR resolves that, no? Since we're currently applying decompositions after autograd.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

You are right! Thanks, fixed now.

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 [ghstack-poisoned]

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 ghstack-source-id: 3e6559d Pull Request resolved: #1134

|

The test failures are in |

|

@fdrocha yeah they're not caused by this PR, but by updating the pin. |

Thanks @Chillee . I was able to get the tests to go green by replacing "fake_result" with "val" in Should I make those changes? Don't understand those parts of the code base, but it seems like the right thing... There also a bunch of other instances of "fake_result" in torch repo that should probably be changed to "val" FWIW |

|

@fdrocha Yeah we just need to get a PR in updating all the uses (also see pytorch/pytorch#84432). |

I see. I guess I will wait for that PR to be merged before trying to merge this one. |

|

We could also just skip the tests until the upstream breaking is fixed. |

|

Yeah let's do that and land this. |

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 [ghstack-poisoned]

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 ghstack-source-id: c91a2c5 Pull Request resolved: #1134

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 [ghstack-poisoned]

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 ghstack-source-id: 986b35a Pull Request resolved: #1134

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 [ghstack-poisoned]

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 ghstack-source-id: adef10f Pull Request resolved: #1134

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 [ghstack-poisoned]

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 ghstack-source-id: 4f2951f Pull Request resolved: #1134

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 [ghstack-poisoned]

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 ghstack-source-id: 872e1f1 Pull Request resolved: #1134

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 [ghstack-poisoned]

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 ghstack-source-id: 93522ab Pull Request resolved: #1134

|

This looks like it was not properly merged @fdrocha -- the merge button doesn't work with ghstack |

Drat. I did use Any idea on how to fix this? |

|

@ezyang what permissions do we need to land ghstack PRs? |

|

This is landed now. Apparently you need to be repo admin lol |

|

oops actualy not yet |

There is now a decomposition in pytorch that seems to have better performance, see benchmarks at pytorch/pytorch#84350 ghstack-source-id: 93522ab Pull Request resolved: #1134

|

@fdrocha we should start sending PRs without ghstack then :( |

|

@ezyang this is not merged yet, right? |

|

Ok, never mind then!

…On Mon, Sep 19, 2022 at 10:22 AM Mario Lezcano Casado < ***@***.***> wrote:

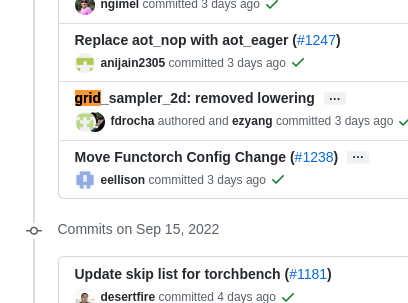

I think this was merged a couple days ago:

[image: image]

<https://user-images.githubusercontent.com/3291265/190987637-f4b3a3a1-ff7b-49c1-85b9-c3f15724f7d8.png>

—

Reply to this email directly, view it on GitHub

<#1134 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AX23VOJ7C2332KWTG2HXJCTV7AWGVANCNFSM6AAAAAAQGB3E4U>

.

You are receiving this because you were mentioned.Message ID:

***@***.***>

|

|

I was talking to @ezyang and his opinion was that "we should just ask him to merge ghstack PRs if we want them landed" (for now). |

Stack from ghstack (oldest at bottom):

There is now a decomposition in pytorch that seems to have

better performance, see benchmarks at

pytorch/pytorch#84350