-

Notifications

You must be signed in to change notification settings - Fork 49

Change locator reconnection #134

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

- Fixes: #133 - Remove PublishingIdStrategy interface. It is not necessary, the idea was to create a generic interface to get the publishingId. - Add interlock _publishingId in ReliableProducer to be thread-safe - Add GetLastPublishingId on the Producer Class - Use GetLastPublishingId on the ReliableProducer class Signed-off-by: Gabriele Santomaggio <G.santomaggio@gmail.com>

|

@ricsiLT Can you please try it? |

Codecov Report

@@ Coverage Diff @@

## main #134 +/- ##

==========================================

+ Coverage 91.52% 91.71% +0.18%

==========================================

Files 77 77

Lines 5828 5815 -13

Branches 358 358

==========================================

- Hits 5334 5333 -1

+ Misses 406 394 -12

Partials 88 88

Continue to review full report at Codecov.

|

- Fixes: #133 - Remove PublishingIdStrategy interface. It is not necessary, the idea was to create a generic interface to get the publishingId. - Add interlock _publishingId in ReliableProducer to be thread-safe - Add GetLastPublishingId on the Producer Class - Use GetLastPublishingId on the ReliableProducer class Signed-off-by: Gabriele Santomaggio <G.santomaggio@gmail.com>

2cf66c9 to

be63005

Compare

|

On it :) |

Signed-off-by: Gabriele Santomaggio <G.santomaggio@gmail.com>

…am-dotnet-client into reconnect_locator

Signed-off-by: Gabriele Santomaggio <G.santomaggio@gmail.com>

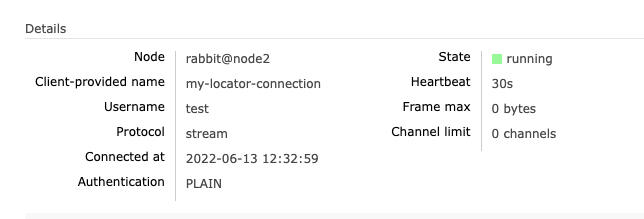

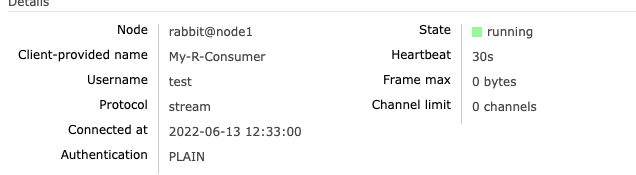

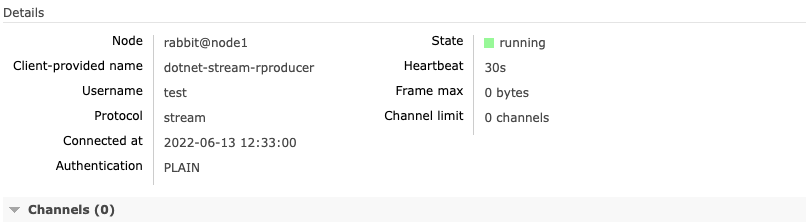

Result of testing :( It did raise exceptions on server side tho. If it's of any importance, exceptions were raised on nodes that are not the leader. Also, weirdly, heartbeat was not set to anything on both locator and producer connections. |

What error?

That's weird |

The heartbeat is set correctly. my setup is connection: Console.WriteLine("Reliable .NET Producer");

var addressResolver = new AddressResolver(IPEndPoint.Parse("192.168.56.10:5553"));

/// 192.168.56.10:5553 proxy address

var config = new StreamSystemConfig()

{

Heartbeat = TimeSpan.FromSeconds(30),

AddressResolver = addressResolver,

UserName = "test",

Password = "test",

ClientProvidedName = "my-locator-connection",

Endpoints = new List<EndPoint>() {addressResolver.EndPoint}

}; |

- Fixes: #133 - Remove PublishingIdStrategy interface. It is not necessary, the idea was to create a generic interface to get the publishingId. - Add interlock _publishingId in ReliableProducer to be thread-safe - Add GetLastPublishingId on the Producer Class - Use GetLastPublishingId on the ReliableProducer class Signed-off-by: Gabriele Santomaggio <G.santomaggio@gmail.com>

Signed-off-by: Gabriele Santomaggio <G.santomaggio@gmail.com>

Signed-off-by: Gabriele Santomaggio <G.santomaggio@gmail.com>

5544300 to

4e28b7a

Compare

…am-dotnet-client into reconnect_locator

|

|

|

Ye, will do upgrade in a few hours/tomorrow :( |

|

FYI: IF you want, you can find me https://rabbitmq-slack.herokuapp.com/ to have a faster interaction. |

|

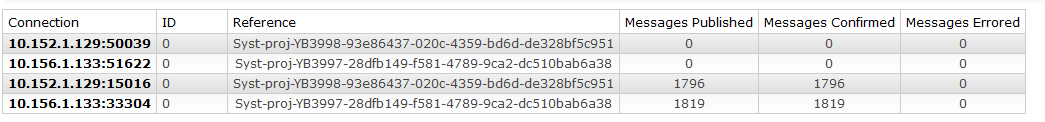

Another issue we see is doubling of connections, so to speak. Both seem to be alive. (in this case, reference for publisher is Again, tomorrow we should have our shiny new cluster with RHEL8/RMQ3.10.x so I can see whether that's the only issue. |

|

@lukebakken it is enough to configure a cluster with a loadbalacer. # -*- mode: ruby -*-

# vi: set ft=ruby :

# All Vagrant configuration is done below. The "2" in Vagrant.configure

# configures the configuration version (we support older styles for

# backwards compatibility). Please don't change it unless you know what

# you're doing.

BOX_IMAGE = "ubuntu/bionic64"

NODE_COUNT = 3

Vagrant.configure("2") do |config|

config.vm.define "node0" do |subconfig|

subconfig.vm.box = BOX_IMAGE

subconfig.vm.hostname = "node0"

subconfig.vm.network :private_network, ip: "192.168.56.10"

end

(1..NODE_COUNT).each do |i|

config.vm.define "node#{i}" do |subconfig|

subconfig.vm.box = BOX_IMAGE

subconfig.vm.hostname = "node#{i}"

subconfig.vm.network :private_network, ip: "192.168.56.#{i + 10}"

end

end

# Install avahi on all machines

config.vm.provision "shell", inline: <<-SHELL

sudo echo "192.168.56.11 node1 " >> /etc/hosts

sudo echo "192.168.56.12 node2 " >> /etc/hosts

sudo echo "192.168.56.10 node0 " >> /etc/hosts

SHELL

endenvoy: admin: {"accessLogPath":"/dev/null","address":{"socketAddress":{"address":"0.0.0.0","portValue":9901}}}

static_resources:

listeners:

- name: stream-ingress

per_connection_buffer_limit_bytes: 4096

address:

socket_address:

address: 0.0.0.0

port_value: 5553

filter_chains:

- filters:

- name: envoy.filters.network.tcp_proxy

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.tcp_proxy.v3.TcpProxy

stat_prefix: ingress

cluster: stream-cluster

- name: management-ui

address:

socket_address:

address: 0.0.0.0

port_value: 15673

filter_chains:

- filters:

- name: envoy.filters.network.http_connection_manager

typed_config:

"@type": type.googleapis.com/envoy.extensions.filters.network.http_connection_manager.v3.HttpConnectionManager

codec_type: auto

stat_prefix: ingress_http

route_config:

name: local_route

virtual_hosts:

- name: service

domains:

- "*"

routes:

- match:

prefix: "/"

route:

cluster: management-cluster

http_filters:

- name: envoy.filters.http.router

typed_config: { }

clusters:

- name: stream-cluster

connect_timeout: 0.25s

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: stream

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: node0

port_value: 5552

- endpoint:

address:

socket_address:

address: node1

port_value: 5552

- endpoint:

address:

socket_address:

address: node2

port_value: 5552

- name: management-cluster

connect_timeout: 0.25s

type: STRICT_DNS

lb_policy: ROUND_ROBIN

load_assignment:

cluster_name: management1

endpoints:

- lb_endpoints:

- endpoint:

address:

socket_address:

address: node0

port_value: 15672

- endpoint:

address:

socket_address:

address: node1

port_value: 15672

- endpoint:

address:

socket_address:

address: node2

port_value: 15672Then kill the locator connection and consumer/producer connection. |

|

@ricsiLT did you have a chance to test? |

|

Will come back with results today, sorry for the lag :( |

|

MErged per conversion with @ricsiLT ! Thanks for your help! |

a generic interface to get the publishingId.

Signed-off-by: Gabriele Santomaggio G.santomaggio@gmail.com