jupyter/ipython experiment containers and utils for profiling and reclaiming GPU and general RAM, and detecting memory leaks.

This module's main purpose is to help calibrate hyper parameters in deep learning notebooks to fit the available GPU and General RAM, but, of course, it can be useful for any other use where memory limits is a constant issue. It is also useful for detecting memory leaks in your code. And over time other goodies that help with running machine learning experiments have been added.

This package is slowly evolving into a suite of different helper modules that are designed to help diagnose issues with memory leakages and make the debug of these easy.

Currently the package contains several modules:

IpyExperiments- a smart container for ipython/jupyter experiments (documentation / demo)CellLogger- per cell memory profiler and more features (documentation / demo)ipythonutils - workarounds for ipython memory leakage on exception (documentation)- memory debugging and profiling utils (documentation)

Using this framework you can run multiple consequent experiments without needing to restart the kernel all the time, especially when you run out of GPU memory - the familiar to all "cuda: out of memory" error. When this happens you just go back to the notebook cell where you started the experiment, change the hyper parameters, and re-run the updated experiment until it fits the available memory. This is much more efficient and less error-prone then constantly restarting the kernel, and re-running the whole notebook.

As an extra bonus you get access to the memory consumption data, so you can use it to automate the discovery of the hyper parameters to suit your hardware's unique memory limits.

The idea behind this module is very simple - it implements a python function-like functionality, where its local variables get destroyed at the end of its run, giving us memory back, except it'll work across multiple jupyter notebook cells (or ipython). In addition it also runs gc.collect() to immediately release badly behaved variables with circular references, and reclaim general and GPU RAM. It also helps to discover memory leaks, and performs various other useful things behind the scenes.

If you need a more fine-grained memory profiling, the CellLogger sub-system reports RAM usage on a per cell-level when used with jupyter or per line of code in ipython. You get the resource usage report automatically as soon as a command or a cell finished executing. It includes other features, such as resetting RNG seed in python/numpy/pytorch if you need a reproducible result when re-running the whole notebook or just one cell.

Currently this sub-system logs GPU RAM, general RAM and execution time. But it can be expanded to track other important things. While there are various similar loggers out there, the main focus of this implementation is to help track GPU, whose main scarce resource is GPU RAM.

-

pypi:

pip install ipyexperiments -

conda:

conda install -c fastai -c stason ipyexperiments -

dev:

pip install git+https://github.com/stas00/ipyexperiments.git

Here is an example with using code from the fastai v1 library, spread out through 8 jupyter notebook cells:

# cell 1

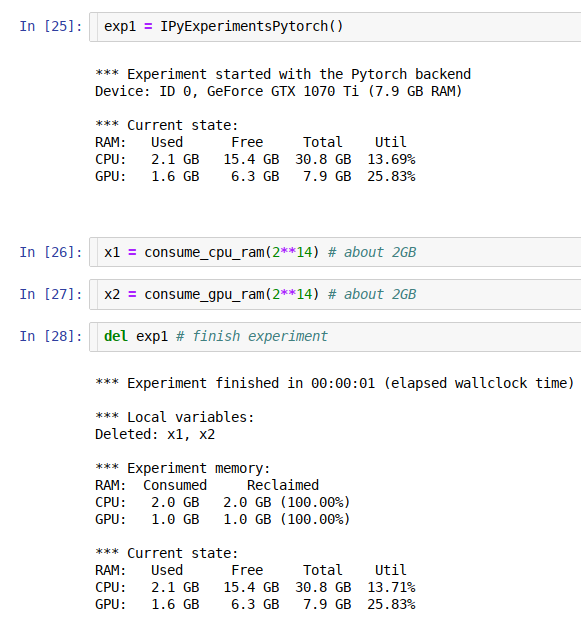

exp1 = IPyExperimentsPytorch() # new experiment

# cell 2

learn1 = language_model_learner(data_lm, bptt=60, drop_mult=0.25, pretrained_model=URLs.WT103)

# cell 3

learn1.lr_find()

# cell 4

del exp1

# cell 5

exp2 = IPyExperimentsPytorch() # new experiment

# cell 6

learn2 = language_model_learner(data_lm, bptt=70, drop_mult=0.3, pretrained_model=URLs.WT103)

# cell 7

learn2.lr_find()

# cell 8

del exp2

See this demo notebook, to see how this system works.

Up to date nvidia-ml-py3. For pytorch-mode on NVIDIA GPU this project relies on nvidia-ml-py3, which hasn't been released on pip since 2017. If this library doesn't work for you, you may need to install its newer version directly from github, using:

pip install git+https://github.com/nicolargo/nvidia-ml-py3

Please see CONTRIBUTING.md.

A detailed history of changes can be found here.