- API using FastAPI + SQLAlchemy + pydantic

- API using Flask + Protobuf

- Plotly Dash + API and Docker

- ETL Pipelines

- Airflow + Docker

This project is a minimalistic implementation of a FastAPI HTTP REST service that only returns serialized stock time-series data stored in a sqlite database. We will be using SQLAlchemy as the ORM and pydantic for serialization and validation for our endpoints.

Project path: datatools/api_fastapi

Further information: datatools/api_fastapi/README.md

This project contains the following:

- a basic synchronous HTTP server using FastAPI, a modern, high-performance web framework for building APIs

- use FastAPI's swagger documentation to access the app's endpoints.

- how to use SQLAlchemy to model and query the data stored in a local SQLite

- how to create data schemas to enforce type hints at runtime using pydantic

Using a simple ETL script datatools/api_fastapi/load_db.py

extracted all ticker data from the parquet files in data/all-tickers

and stored it in a single prices table in api_fastapi/sync/app.db.

(venv) ➜ cd datatools/api_fastapi/sync

(venv) ➜ uvicorn app.main:app --reload

INFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)

INFO: Started reloader process [29317] using statreload

INFO: Started server process [29319]

INFO: Waiting for application startup.

INFO: Application startup complete.You can use the command line to test the tickers endpoint

curl -X 'GET' 'http://localhost:8000/tickers/' -H 'accept: application/json'

[

{

"ticker": "AAPL"

},

{

"ticker": "AMZN"

},

{

"ticker": "FB"

},

{

"ticker": "IBM"

},

{

"ticker": "MSFT"

}

]Or you can navigate to the api documentation page at http://127.0.0.1:8000/docs and execute the same query

- implement an async api - which is what really makes FastAPI's performance comparative to node, etc.

- customize the swagger documentation to use less generic text and placeholders

- dockerize the application

This project is a minimalistic implementation of a flask HTTP REST service that only returns serialized stock time-series data originally stored in a local parquet file. Protobuf is only used for the serialization/deserialization of messages over HTTP.

Project path: datatools/api

Further information: datatools/api/README.md

This project contains the following:

- a basic HTTP server using Flask Restful

- an implementation of Protobuf to serialize and send structured data over HTTP

- how to use Dask on the backend to read/manipulate data from a local parquet file and serialize using protobuf

The data served is stored in a parquet in the datatools/api/data directory which

was generated via an ETL pipeline, found in datatools/pipelines/jobs.

Protobuf was only used for message structuring and serialization. In future efforts,

I will look to implement a full gRPC service using the grpcio library as it provides lower

latency bi-directional streaming, which are better suited for distributed systems.

However, for the scope of this project, the combination of Flask and Protobuf allows me to implement a satisfactory

solution for demonstrative purposes much faster.

Dask was used to read data from the local parquet file for a number of reasons. Firstly, while Pandas is simple to use, it needs to read the full parquet file before filtering. This is too memory intensive and only works on single workloads.

Secondly, PySpark has a performance overhead since every time you need to access data you need to initiate a spark session. Since this project does not work with large distributed datasets and the requests are pretty simple, pySpark is not best suited.

So then you have Dask. It is built purely in python and leverages numpy and pandas but is designed to work with distributed loads. Dask does not have the lag of pyspark but allows you to specify the dataframe operations/filtering in advance, so it is not as memory heavy as pandas.

- Upgrade the API to be a fully implemented RPC framework (maybe create a second project to run comparisons)

- Add some more useful meaningful services

A very minimalist dashboard has been built using plotly Dash, which displays a stock return series and a daily returns distribution plot.

Project path: datatools/dashboard

Further information: datatools/dashboard/README.md

The dashboard is used in tandem with the datatools/api so in order to facilitate smooth simulation

both the dashboard and api have been dockerized and orchestrated using docker-compose.

To run the dashboard and API:

(venv) ➜ git clone https://github.com/rjdscott/datatools.git

(venv) ➜ cd datatools/dashboard

(venv) ➜ docker-compose build

(venv) ➜ docker-compose up -d --force-recreate

Then navigate to http://localhost:8050 to see the dashboard.

Project path: datatools/pipelines

Further information: datatools/pipelines/README.md

This project showcases the implementation of a simplified ETL pipeline using pySpark, Dask, Pandas and Parquet.

What's covered:

- extract csv files from

datatools/data/using a predefined schema - transform and clean the data by adding some basic analytics

- store the data in a parquet file in

datatools/data/

In this example I decided to go with a static pipeline that takes stock data from CSVs, transforms then loads into a parquet file. Although there are many tools available for ETL jobs, you will find an implementation of the same pipeline using PySpark, Dask and Pandas.

The goal of having the same pipeline implemented in different ways is to allow for direct comparison of use and ease of implementation. On one end, you have the simplicity of Pandas, which can be used for lighter and simpler workloads. On the other you have tools that cna be scaled up to thousand core clusters for computation on terabytes of data with ease. You will also see how easy it is to implement a parallel pipeline using Dask.

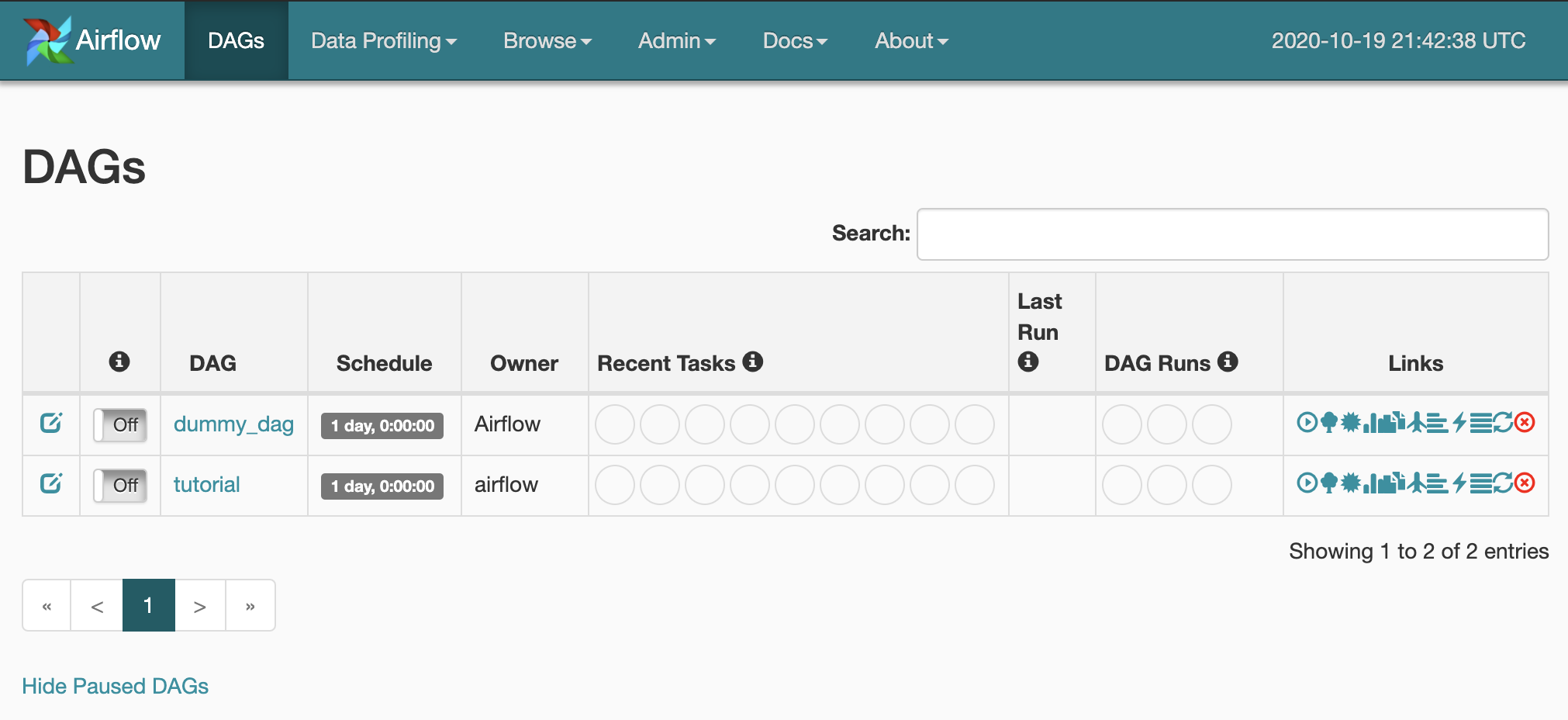

A minimalist implementation of airflow with docker.

Project path: datatools/airflow

Further information: datatools/airflow/README.md

To use:

- create your own DAG in the

airflow/dags - run

docker-compose up -dyou can add--buildarg to build all images - view the dashboard available at http://localhost:8080