-

Notifications

You must be signed in to change notification settings - Fork 342

Complex dot() #1106

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Complex dot() #1106

Conversation

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Good. I guess we need to document somewhere that what gemm operations we support with matrixmultiply vs blas

|

Fwiw, in the absence of BLAS, falling back to four matrix multiplications with |

|

Good point! I can add that as well if you want. |

|

If you decide to do so, PR #1029 would make it easier and is useful functionality on its own anyway. Feel free to take over work on that if you'd like; I'm very busy right now. |

|

I actually could use that functionality too (I implemented it myself for my own uses, but want to upstream things...) so I will go ahead and take over that PR and tackle it first! |

src/linalg/impl_linalg.rs

Outdated

| #[cfg(feature = "blas")] | ||

| use crate::dimension::offset_from_low_addr_ptr_to_logical_ptr; | ||

| use crate::imp_prelude::*; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

(We don't need to change anything, just talking). It's just weird that tools would move down the implementation prelude import. They are wrong, that import should clearly be first. (Probably accomplished by having it with a blank line after).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Personally, I have always liked that Rustfmt sorts use statements. Whether sorting them alphabetically is good or a bad is a reasonable discussion to have, but as usual with aesthetics, just the fact that the decision is automatic seems to bring almost all of the benefit, i.e. no more tedious discussions on how to sort here or there or writing up rules as a company-wide guideline.

Additionally, I find the ecosystem-wide readability resulting from the common application of Rustfmt's defaults highly beneficial to upstream collaboration.

(Especially since the workaround using a blank line really seems like a good idea if the import actually is special among the others next to it.)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah I agree, it is a bit strange. Perhaps so other imports don't silently clobber items brought in from the prelude?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Sounds good @adamreichold. You'll notice that I'm not yet very fond of rustfmt, unfortunately. I'd be happier if it could accept "multiple good ways" to format the same code.

|

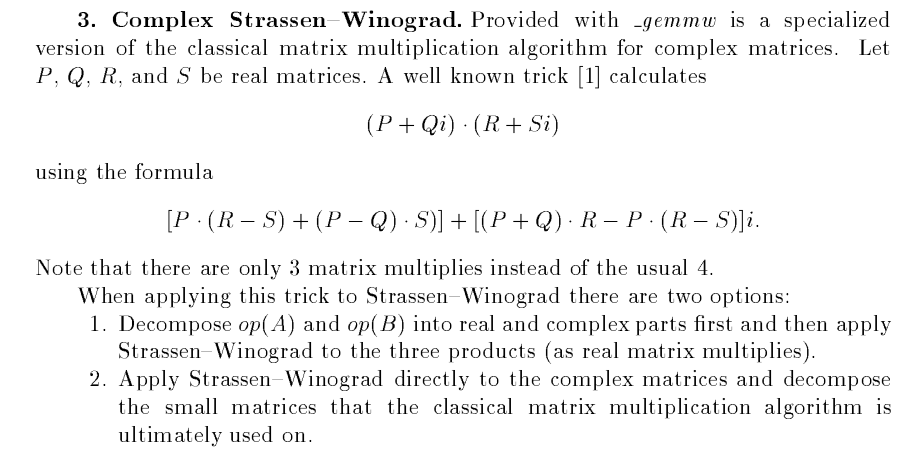

I think I want to add fallback implementations of complex gemm into matrixmultiply. That might get a ball rolling and should perform ok-ish to start with. Reusing dgemm seems like good way to go too, but in practice I think it has a problem with the general matrix multiply and it's alpha muliplier? What's to be done if imag(alpha) != 0? Then it needs extra steps after calling dgemm, if I'm not mistaken. For some reason I came across this, that some libraries reuse their dgemm by using a multiplication reduction algorithm. That's interesting but seems like quite the project. (paper link). Just using it naively has massive memory overhead. |

|

This PR would be fine without addressing matrixmultply or fallback much at all, of course. Just saying that anything extra can be a separate PR. |

|

Yeah I think I will leave the followup to another PR. I've fixed the small mutability issue so I think this is ready for another round of review. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

looks good to me, only minor comments

|

Matrixmult complex wip is at bluss/matrixmultiply#58 performance for f32 + FMA with auto vectorization is not too bad (maybe it reaches 1/2-1/3 of the potential :). Matrixmultiply as a crate doesn't need to be so important. |

|

I edited the description to say it's not WIP. I think it's ready. I'd usually say we need to edit the PR description before merge, but it seems like github has gotten to the point where it doesn't even include it in the merge in git, so it's not so important anymore (did github change here?) (I pushed to fix merge conflicts and do very small edits) |

Great! I can try to take a look at that a bit later.

I think(?) the message is only added if there is a single commit or depending on the kind of merge you do maybe. |

|

Thanks for finally closing this one :) I think the use dgemm-for-zgemm idea still can give good benefits compared with matrixmultiply (in its current state). Handling the general case with complex alpha, beta is a bit tricky, I don't know what the best performing solution is. But alpha != 1 or beta != 0 is the uncommon case. |

This uses BLAS zgemm/cgemm to do complex matrix matrix multiplication.

I know there probably should be many more tests (the ones I added were more just a brief correctness check if nothing else) but I thought I'd open this to get feedback on whether or not something like this approach seems reasonable.

I'm mainly focusing on matrix matrix products as that is what I am using, but I can follow up with matrix vector and vector vector product implementation if there is interest.

Fixes #158