Welcome to the Knowledge Base App, a professional-grade solution designed to serve as a comprehensive knowledge base for a specific domain. This application features a robust backend for efficient document ingestion and storage within a vector database. The frontend leverages the power of GPT-4 and Langchain to deliver a sophisticated chatbot interface for interacting with the stored documents.

Here's an index for the provided README file with clickable links:

Please note that the links are based on the section headings in the README file, and they will navigate to the corresponding section when clicked.

The backend component of our software encompasses a suite of cutting-edge features carefully developed to ensure optimal performance and reliability. Key components of our SaaS system include:

Our system excels at efficiently ingesting large volumes of reference data into the vector database. This reference data, which remains static and unmodifiable, forms the bedrock of our application, providing a foundational dataset for all subsequent operations.

We have meticulously designed our SaaS system using a multi-tenant architecture to enable concurrent access for multiple users. Each user enjoys secure and separate access to their uploaded documents while retaining the ability to search and interact with the reference data. This architecture ensures scalability, optimal performance, and robust data privacy for all users.

To deliver the extensive functionality described above, our solution incorporates the following essential components:

-

Pinecone - A powerful vector database specifically tailored for storing documents as embeddings. Our system utilizes separate namespaces for reference data and each user, guaranteeing efficient and secure document storage.

-

Search API - The Search API forms the backbone of our system's search capabilities, enabling users to perform comprehensive searches across both the reference data and their uploaded documents. This API ensures lightning-fast and accurate search results.

-

Concurrent Access - The Knowledge Base App boasts a highly concurrent system that efficiently handles simultaneous access from multiple users. This capability guarantees an exceptional user experience, even during peak usage periods.

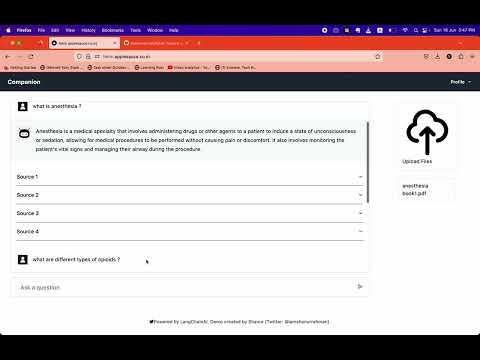

The frontend of our application presents an intuitive and user-friendly interface, seamlessly integrating GPT-4 and LangChain to deliver a state-of-the-art chatbot experience.

How to Use:

-

Add PDF files: Simply drag and drop your PDF files into the designated area.

-

Ingest Documents: Click on the "Ingest" button to process and embed the uploaded documents.

-

Search and Interact: Use the chat interface to ask questions and interact with the knowledge base.

The Knowledge Base App utilizes the following cutting-edge technologies:

For any troubleshooting needs or technical difficulties, kindly refer to the issues section of this repository.

To streamline the deployment process, we provide a Docker Compose file located in the backend directory of this repository. Additionally, a deploy script is available to facilitate the deployment of the code to a virtual machine. Prior to executing the script,

please ensure that the necessary dependencies for Docker and Docker Compose are installed on your system. The deploy script can be found at the following path: backend/deploy.

We kindly request you to consider starring this repository if you find it useful. Your support is greatly appreciated!

To start and scale the app defined in the given Docker Compose file and Nginx configuration, you can follow the steps outlined below:

Before starting, make sure you have the following prerequisites:

- Docker installed on your system

- Docker Compose installed on your system

-

Create a directory for your project and navigate to it in your terminal.

-

Create a file named

docker-compose.ymland copy the contents of the given Docker Compose file into it. -

Create a file named

nginx.confin the same directory and copy the contents of the given Nginx configuration file into it. -

In the

docker-compose.ymlfile, adjust any necessary paths or configurations according to your project structure and requirements. For example, you might need to update thecontextanddockerfilepaths under thenextjs_appservice to match your frontend setup. -

Open a terminal in the project directory and run the following command to start the app:

docker-compose up -dThis command will build the Docker images defined in the Compose file, create the containers, and start them in detached mode.

-

Wait for Docker to pull the necessary images, build the app containers, and start the services. You can monitor the progress and see the logs by running:

docker-compose logs -f -

Once the services are up and running without any errors, you should be able to access your app. In a web browser, navigate to

http://localhostto access the Next.js app andhttp://localhost/_apito access the backend API.

To scale your app horizontally by adding more instances of the services, you can use the docker-compose up command with the --scale option.

For example, to scale the fahm_backend service to run three instances, run the following command:

docker-compose up -d --scale fahm_backend=3

This command will create two additional containers running the fahm_backend service.

You can similarly scale the nextjs_app service or any other service defined in your docker-compose.yml file by specifying its name and the desired number of instances.

Scaling your app allows you to handle increased traffic and distribute the load across multiple containers.

Remember to monitor the resource usage of your system and adjust the scaling based on your server's capacity and performance requirements.

That's it! You now have the app up and running with the ability to scale it as needed.

Guidelines to Prevent CORS Issues:

-

Nginx Configuration:

-

When running the application in a Docker container, the Nginx configuration should include CORS headers. This ensures that cross-origin requests are allowed. Use the following Nginx configuration:

add_header 'Access-Control-Allow-Origin' '*' always; add_header 'Access-Control-Allow-Methods' 'GET, POST, PUT, DELETE, OPTIONS' always; add_header 'Access-Control-Allow-Headers' 'Authorization, Content-Type' always;

-

-

NestJS Configuration:

-

When running the application locally, NestJS needs to enable CORS explicitly. This is done by adding the following code snippet to your NestJS app configuration, typically in the

main.tsfile:if (process.env.NODE_ENV !== 'prod') { app.enableCors(); }

-

-

Set NODE_ENV Environment Variable:

- To ensure that the correct environment-specific configurations are applied, set the

NODE_ENVenvironment variable accordingly. - When running the application in a Docker container, set

NODE_ENVto "prod". - When running the application locally, ensure that

NODE_ENVis not set to "prod".

- To ensure that the correct environment-specific configurations are applied, set the

-

Frontend .env Configuration:

- In the frontend directory, take care of the

.envfile. - For the local environment, set

API_URLtohttp://localhost:8080/_api. - For the production environment, set

API_URLtohttp://localhost/_api.

- In the frontend directory, take care of the

-

Docker-Compose Considerations:

- If you are using an M1 Silicon-based machine, use the

jlenartjwp/jwt-nginximage for the Nginx container in your Docker Compose file. - For Linux-based virtual machines (VMs), use the

ghcr.io/max-lt/nginx-jwt-module:latestimage for the Nginx container in your Docker Compose file.

- If you are using an M1 Silicon-based machine, use the

By following these guidelines, you can prevent CORS issues and ensure that requests from the browser are allowed properly, both in Docker containers and local environments.