-

Notifications

You must be signed in to change notification settings - Fork 2.3k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[GUI] ImGUI, Separating backend and frontend, zero-transfer presentation, and GUI Python API #2326

Comments

|

I think 1 (ImGUI) and 3 (zero-copy presentation, see also #1922) can be very meaningful improvements. There have been quite a lot of discussions on ImGUI recently within the Taichi team. Regarding 2 (Separating GUI backend and frontend), while I strongly agree with the separation, I'm not sure if it makes sense to introduce a fully-featured GUI/Node graph system within Taichi. Maybe a better way is to keep Taichi small as an execution engine, and create another library for a GUI system that can interact with Taichi. I would also like to add

The current GUI class in Python was designed in a rush and we clearly need more deliberate considerations on the API. How it is used: Lines 107 to 128 in a4279bd

When creating a new GUI system with keyboard/mouse inputs, the current GUI system is rather hard to use. We need a systematic redesign - perhaps introducing a immediate mode GUI system help with this, but I'm no GUI API design expert so I'd listen to the community on how this should be refactored :-) |

|

What I'm thinking is provide a simple 2D draw list API, which is enough for

other UI frontend to be integrated. The reason why we may want to ship a 2D

draw list API is to have people display things when they are on a VM or a

remote server, which is common in research communities

…On Thu, May 6, 2021, 5:18 PM Yuanming Hu ***@***.***> wrote:

I think 1 (ImGUI) and 3 (zero-copy presentation, see also #1922

<#1922>) can be very meaningful

improvements. There have been quite a lot of discussions on ImGUI recently

within the Taichi team.

Regarding 2 (Separating GUI backend and frontend), while I strongly agree

with the separation, I'm not sure if it makes sense to introduce a

fully-featured GUI/Node graph system *within* Taichi. Maybe a better way

is to keep Taichi small as an execution engine, and create another library

for a GUI system that can interact with Taichi.

I would also like to add

1. The current Python GUI API (especially the event system) needs a

redesign

The current GUI class in Python

<https://github.com/taichi-dev/taichi/blob/a4279bd8e1aa6b7d0d269ff909773d333fab5daa/python/taichi/misc/gui.py>

was designed in a rush and we clearly need more deliberate considerations

on the API.

How it is used:

https://github.com/taichi-dev/taichi/blob/a4279bd8e1aa6b7d0d269ff909773d333fab5daa/examples/mpm128.py#L107-L128

When creating a new GUI system with keyboard/mouse inputs, the current GUI

system is rather hard to use. We need a systematic redesign - perhaps

introducing a immediate mode GUI system help with this, but I'm no GUI API

design expert so I'd listen to the community on how this should be

refactored :-)

—

You are receiving this because you authored the thread.

Reply to this email directly, view it on GitHub

<#2326 (comment)>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/ACY7Q5AXB4XPLYLA3DFSTJTTMMWU5ANCNFSM44HVSCWQ>

.

|

|

Another thing is do we want to roll our own libraries to interface with the windowing system? Do we want to ship a GLFW / SDL based backend as well? Or do we only ship SDL / GLFW backends? (GLFW back end may not be ideal since it does not work well across X11 forwarding, SDL can work but requires a flag to disable hardware acceleration) |

This is a list of quite a few different improvements that can be made to the GUI.

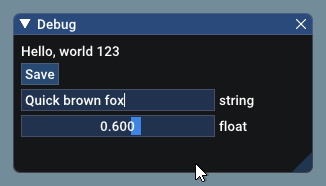

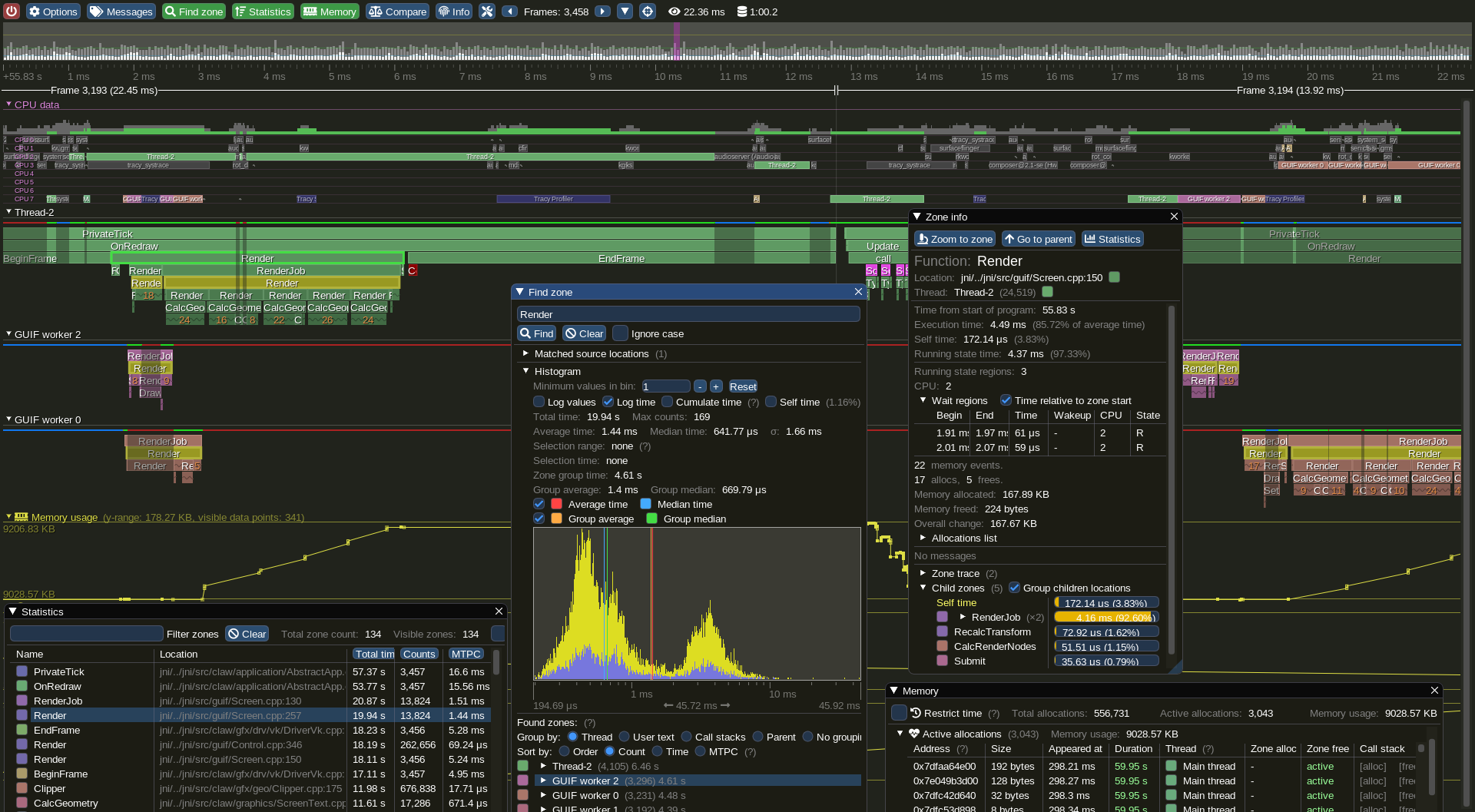

ImGUI is a widely used and well supported UI framework for graphics application. It allows custom backend with no extra dependency if you roll your own backend. This means that ImGUI will work on any platform. There are many more good things about ImGUI, and lots of engines and frameworks are using ImGUI as a basis for their frontend. (e.g. all sorts of games, Google Filament, Nvidia Omniverse, etc.)

Example in action:

Currently the GUI rendering code, the canvas, and the windowing system is tightly coupled and determined at compile time. This introduces bad separation / abstraction in API, and it makes it very hard to support multiple windowing system on the same platform. For example, on Linux a user may use X11 or Wayland, on Windows a user may want to present to an DirectX surface instead of a Win32 window.

The best solution is to have the backend (windowing system, querying input states, rendering of list of basic primitives, and present image). Then we can have different frontend as well, this can be the existing Taichi GUI widgets, ImGUI, or other things.

If we have the backend and frontend separated, we can have multiple GUI backend options like Vulkan or OpenGL. This also means with the right combination of APIs or API inter-ops, we can have the GUI backend directly present an image from Taichi, or we can have the copy / image transform done in GPU. Currently the image is transferred to the CPU and eventually the windowing system (Windows, X11, Mac Quartz, etc.) will need to transfer the plane back to GPU again. This is an extremely time consuming and wasting process. (The cornell_box example runs at 1200 samples per second if the transfer happens per 5000 samples, but only runs at 300 samples per second if the transfer happens per 10 samples)

Since this is quite an involved request, I can't just have everything built and send a pull request, therefore I'm requesting for comments from the community!

The text was updated successfully, but these errors were encountered: