-

Notifications

You must be signed in to change notification settings - Fork 1k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Pretrained model? #5

Comments

|

I'm talking to the company about whether it's okay to release a pre-trained model. |

I train the model in my own dataset. The result looks not very well. Hopefully you share your pre-trained model @taki0112 |

|

We want to make anime, please |

|

If you open a patreon or something, we can subscribe for your pre-trained model 🗡 |

|

Alternatively, it would be amazing if you could share the |

See issue #6 |

|

Can't wait to want a pre-trained model~ please~ |

|

Let's hope the company will allow you to place the model |

|

It would be really helpful if you could release some existing model for our reference. Please~ |

|

Guys just chill for a moment. Taki already said that they're talking to the company about it. It's been 2 days. Calm down and wait. They know that we want this, flooding won't help. In the meantime, why don't you try something yourself? You can use Microsofts Azure or Amazon AWS to train this type of network. Maybe you can even come up with something better! Who knows right? |

|

The thing we need to understand is that no one likes begging and pleading. These people have worked hard on something, and it's completely up to them if they choose to release their models or datasets. I appreciate the fact that they open-sourced their code. Personally, I wouldn't mind even paying for their models and dataset. In the meantime let's stop flooding this thread and wait for @taki0112 's response. |

|

If you don't want to share, and I can understand. You should create a website that offer the possibility to convert photo into anime. The website will become very popular. And you can get some money with the publicity |

|

I have published a pre-trained model for It takes 4+ days to train cropped face dataset and 16+ days to train cropped body dataset on Nvidia GPUs (estimates). Since it takes many days to train the dataset once, and it takes many iterations of training it will take some time but eventually many people will publish and share their pre-trained models in the weeks to come. Datasets can be found at DRIT. For |

|

@thewaifuai Hello! Would you like to publish the pre-trained model of selfie2anime in future? Thanks. |

Yes |

|

FYI, I'm using a quickly-assembled, crappy dataset and a relatively slow cloud GPU machine. Also, I reduced the resolution to 100x100 pixels (256 just takes too long for me). The results look like this after one day of training: Not too bad, but still a lot of room for improvement :) What I can recommend if you'd like to create a better one:

|

Can you share your training dataset? Or Pretrained model? Thanks Very Much!~ This is my email:liushaohan001@gmail.com |

@thewaifuai I'm not sure why but your cat2dog kaggle link doesn't work? |

Oops kaggle datasets are private by default, I had to manually make it public. It is now public and should work. |

Should the images in trainA and trainB be of same sizes? the selfies are 306x306 but my anime faces were 512x512 mixed pngs and jpgs. I did run into some errors. |

|

This is on a 4x P100 with 11 GB VRAM on each trainA is selfie dataset and trainB is http://www.seeprettyface.com/mydataset_page2.html + 1k dump of male anime from gwern's TWDNEv2 website. I guess, if I reduce the batch size? then I can quickly train and release the pre-trained models. |

|

@tafseerahmed the size and format of the images shouldn't matter. They get resized anyway AFAIK. The error you're getting is |

|

@tafseerahmed use the |

Someone is using 2 GPU's right now but I still have over 256GB of RAM available. |

wouldn't that reduce the quality of final results? |

Yes |

lol thanks its training now |

|

The light version significantly reduces the capacity of the model. I haven't trained for long but I don't think it's worth trying. With that hardware, you really should not have any memory issues. Maybe the dataset is too big and already takes up most the memory? I don't know but I think you should investigate / experiment more. |

the batch size was set to 1 by default (that's ineffective when you have a GPU), so I can't imagine that the hardware was an issue. I will debug more and let you guys know, in the meantime, I am training on the light model. |

|

Tell me please. If I start training, will the model improve or will another model be created? |

I download the "selfie2anime checkpoint (100 epoch)" from google drive, upload it to baidu drive. |

|

We've created a web site that can be used to try the 100 epoch model out. |

@t04glovern Thanks for making this more reachable to the general public! But because you're using the pre-trained model directly open source by the authors, maybe you guys would want to give more credits to the authors including @taki0112 and add some direct link to their GitHub repo and paper on the website. (there is no link to the original fantastic work by the authors right now). |

Hey @leemengtaiwan, sorry; we had the links in a non-cached version of cloudfront. If you refresh now they should be listed instead of our repo. Definitely don't want to undermine any of their amazing work! |

|

@t04glovern Thanks! I finally got a chance to try this model! Ohh... It's cyberpunk... 😂 |

|

I also make a self2anime website using the official pretrain model. |

exciting! |

|

I'm having trouble extracting it, too 🤔 If someone who managed to do so could re-archive the file as |

Hi Creke, it seems like your website works pretty good. Are you using the 100-epoch official pretrain model? |

=================

|

|

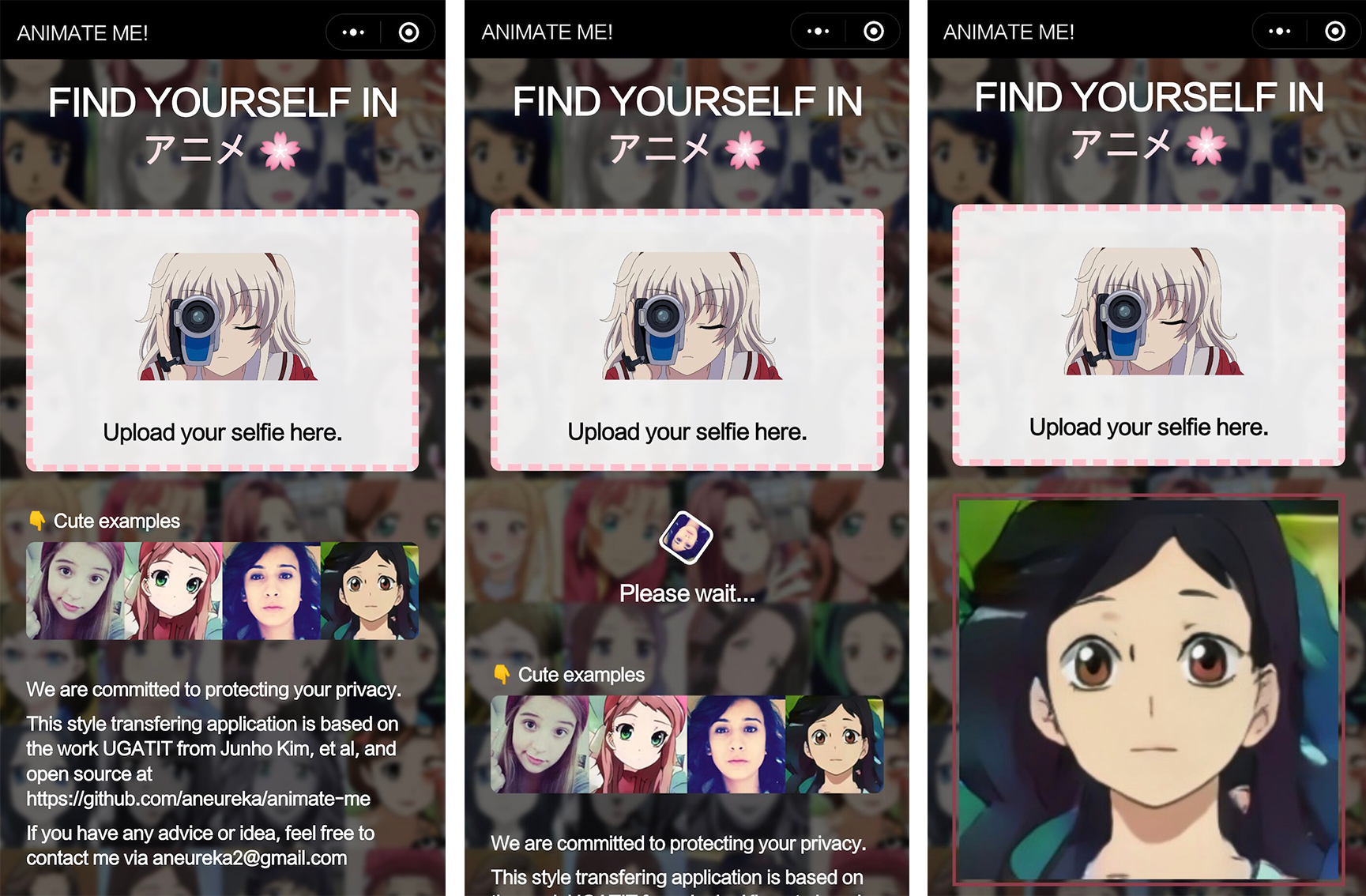

Hi there, I have built a wechat miniapp based on UGATIT and its 100-epoch pretrained model. Hope you will love it, and I will also add more features later. Here's the screenshot 🤣 And the miniapp code: The miniapp and back-end codes are open-source: It typically spends several seconds on inferring. Thank you all! |

|

@Aneureka I tried your app, well done! |

|

I trained my own model using the celebA dataset and a large dataset I found somewhere on here. Trained for 100 epochs. It's more diverse than the existing model, but it can often give poor results. Also, unfortunately it seems to have picked up jpg artifacts. It also goes the other way round reasonably well (but you have to match the art style properly). Download here: Feel free to use in any web-apps or anything. |

|

@EndingCredits that's amazing!! Thanks so much for sharing. A pity that it picked up the JPG-artifacts... |

How do you use multiple GPUs, because that feature is not implemented in the repo. I need it for training on my own dataset, and I am getting error from memory. I have 2x half k80 gpus 2x12gb Vram, but the model is using only one half. |

Amazing, can you tell that which anime datasets are picked??? |

Looks Like better than the author. Could you tell me how get the anime (cartoon) dataset? Thank you. |

you can find in tensorboard |

|

I don't remeber exactly where I found the dataset, but it appears to be from Getchu. If it's necessary, I can reupload, but I don't really want to do that unless it's a last resort. |

|

Does anyone succeed to compress the model size and keep comparable performance. the whole size is up to 1GB, which really prevent UGATIT to be deployed widely! |

|

@dragen1860 also interested in this were you able to find a solution, maybe https://github.com/mit-han-lab/gan-compression |

Well done! At my training, the discrimintator loss did not goes down, do you get any idea about it ? |

Well done! At my training, the discrimintator loss did not goes down, do you get any idea about it? |

Thanks for your sharing!! Have you calculated the Flops of this UGATIT model? |

No, I have not. |

Do you have any pretrained model weights? I currently can't train something like this so was curious if you had anything pretrained available.

The text was updated successfully, but these errors were encountered: