The code for the Husky AI server and model files are here.

- Getting the hang of machine learning

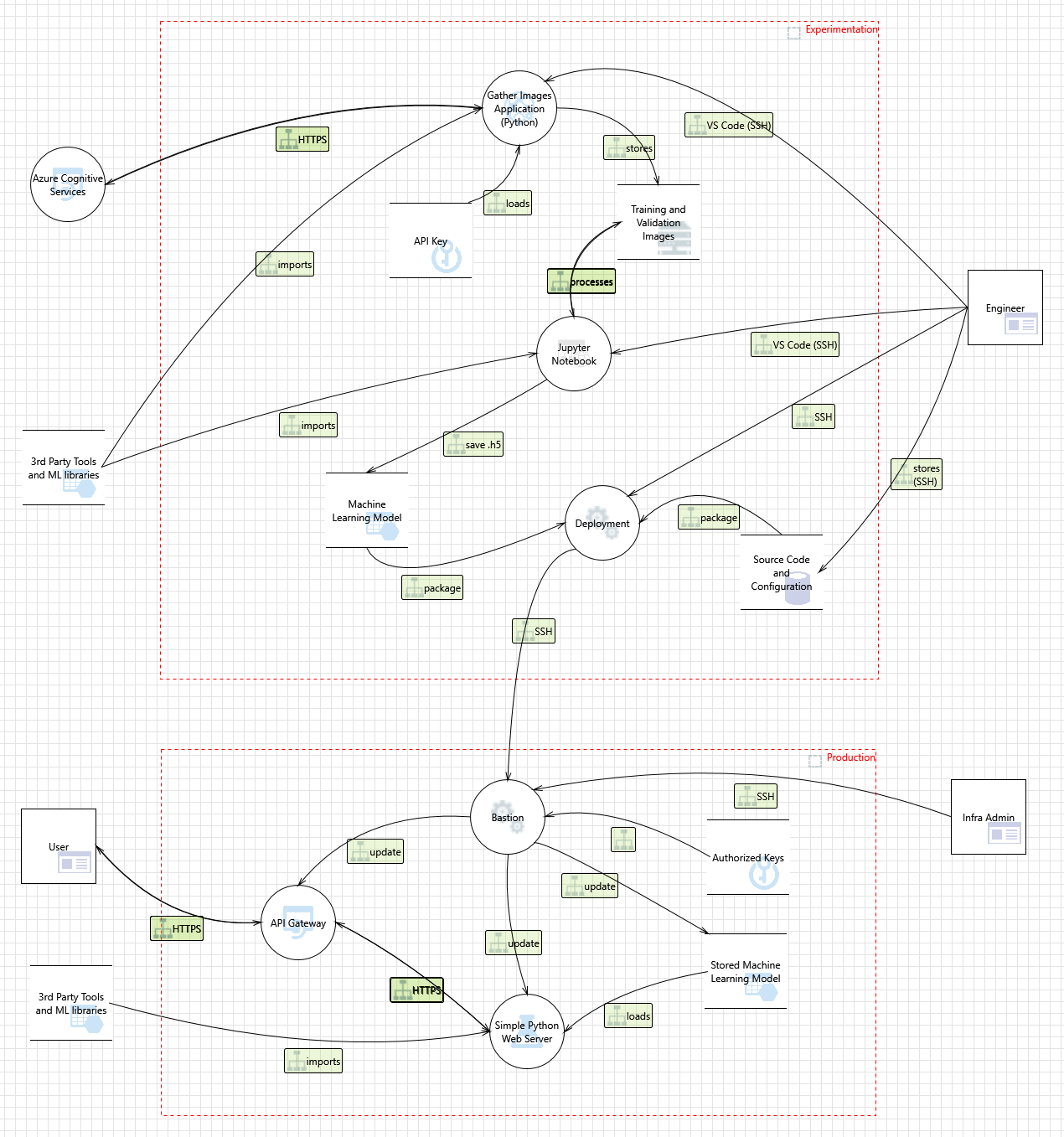

- The machine learning pipeline and attacks

- Husky AI: Building a machine learning system

- MLOps - Operationalizing the machine learning model

- Threat modeling a machine learning system

- Grayhat Red Team Village Video: Building and breaking a machine learning system

- Assume Bias and Responsible AI

- Brute forcing images to find incorrect predictions

- Smart brute forcing

- Perturbations to misclassify existing images

- Adversarial Robustness Toolbox Basics

- Image Scaling Attacks

- Stealing a model file: Attacker gains read access to the model

- Backdooring models: Attacker modifies persisted model file

- Repudiation Threat and Auditing: Catching modifications and unauthorized access

- Attacker modifies Jupyter Notebook file to insert a backdoor

- CVE 2020-16977: VS Code Python Extension Remote Code Execution

- Using Generative Adversarial Networks (GANs) to create fake husky images

- Using Azure Counterfit to create adversarial examples

- Backdooring Pickle Files

- Participating in the Microsoft Machine Learning Security Evasion Competition - Bypassing malware models by signing binaries

- Husky AI Github Repo

Reminder: Penetration testing requires authorization from proper stakeholders. Information is provided for research and educational purposes to advance understanding of attacks and improve countermeasures.