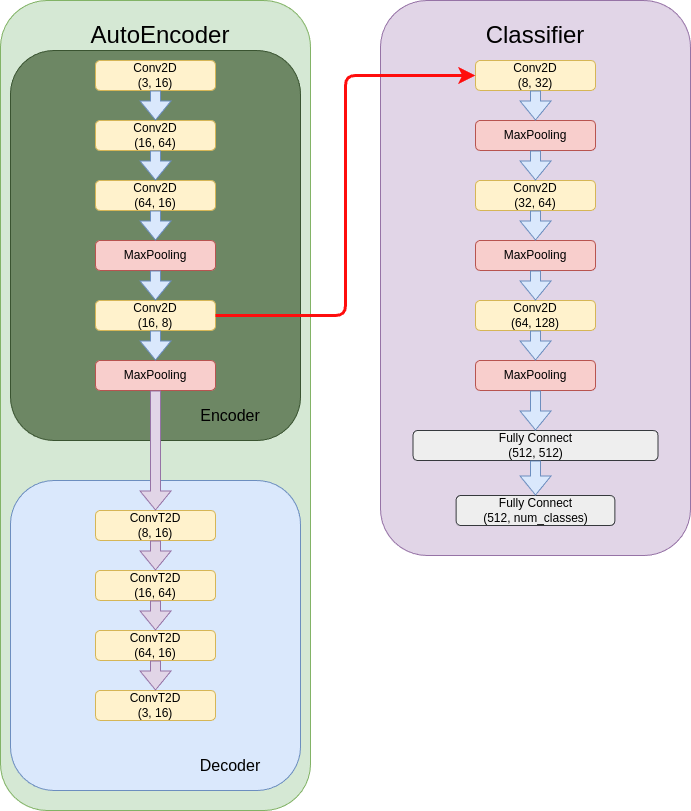

This is a two step image classification solution.

First I use unsuperviser method trained a AutoEncoder model,

in second step I training a Classifier to complete this task.

Nvidia RTX 4060

cuda version 12.2

PyTorch version 1.81+cu111

Although I using Python3.9, but i guess if you can successful install PyTorch 1.81+cu111, this repo should be work for you.

Because I didn't use any unique function of Python3.9 or Syntactic sugar :)

Here I take the Dogs vs. Cats dataset from Kaggle as an example demonstration.

conda create --name AGC python=3.9

conda activate AGC

git clone https://github.com/wuyiulin/AGC.git

cd AGC

pip install -r requirements.txt

├── Train dataset

│ └── fold(include all class training image, w/o label.)

└── Test dataset

├── Class 1 image fold(only include class1 images.)

:

:

:

└── Class N image fold(only include classN images.)

python AutoEncoder.py train

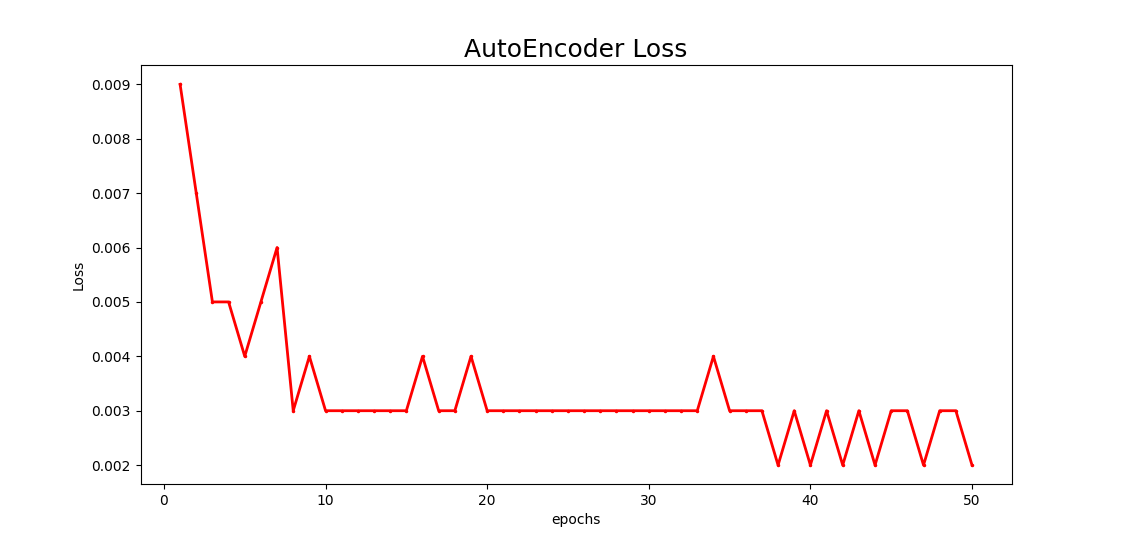

python VisionLoss.py

You can check training loss here, if loss curve too ridiculous to use?

Kick this model weight to the Death Star, it deserved.

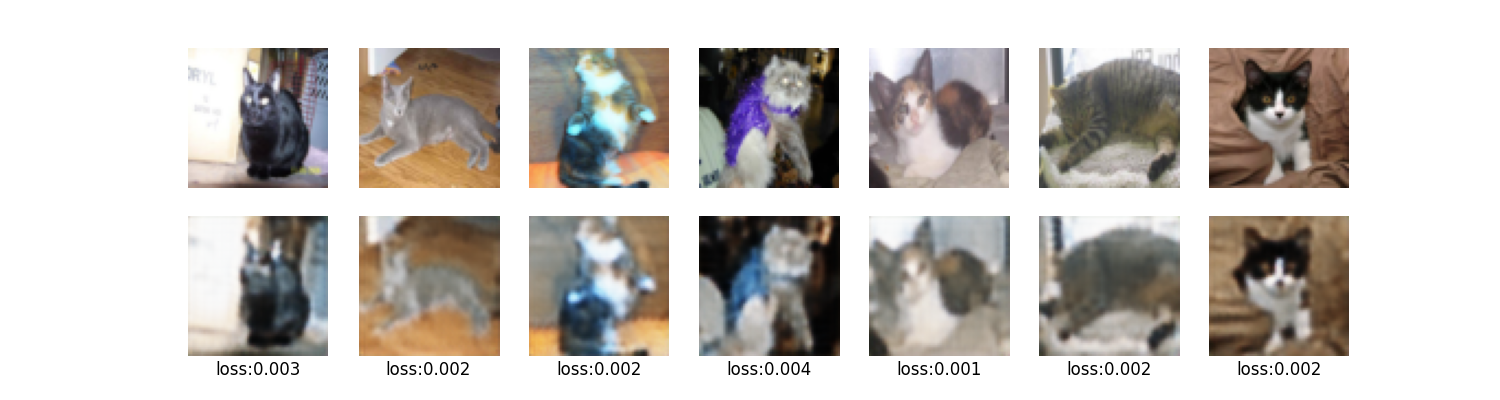

python AutoEncoder.py vis

So you must check out those lovely kitties<3

If, unfortunately, they generated something ugly, you know what to do ^Q^

python Classifier.py train

python Classifier.py test

Test Loss: 0.7738, Accuracy: 84.58%

You can check final accuracy of this task,

it seems not a perfect score in Dogs vs. Cats dataset.

But in another dataset with natural lighting interference and multiple noises, this model achieved very high scores.

Test Loss: 0.0009, Accuracy: 99.94%

So I have full confidence that this model has certain usability in optical inspection in industrial or simpler environmental settings.😤

For my convenience, the program will automatically clear the /checkpoints and /log directories where model weights and loss records are stored in step 1-1.

For my convenience, the program will automatically clear the /checkpoints and /log directories where model weights and loss records are stored in step 1-1.

Please refrain from storing any treasure there

(e.g. exceptionally well-trained model weights). <3

If you wish to modify this behavior, please go to edit the train function in AutoEncoder.py and Classifier.py.

Further information please contact me.