-

Notifications

You must be signed in to change notification settings - Fork 15

The New Turing Omnibus Chapter 11 Search Trees

We welcomed new members to the group and enjoyed home-made scones thanks to Winna and macarons made from Aquafaba thanks to Tom.

We began by talking through the chapter, highlighting some of the potentially confusing terminology (the multiple meanings of "name") but appreciating the two different low-level implementations of search trees.

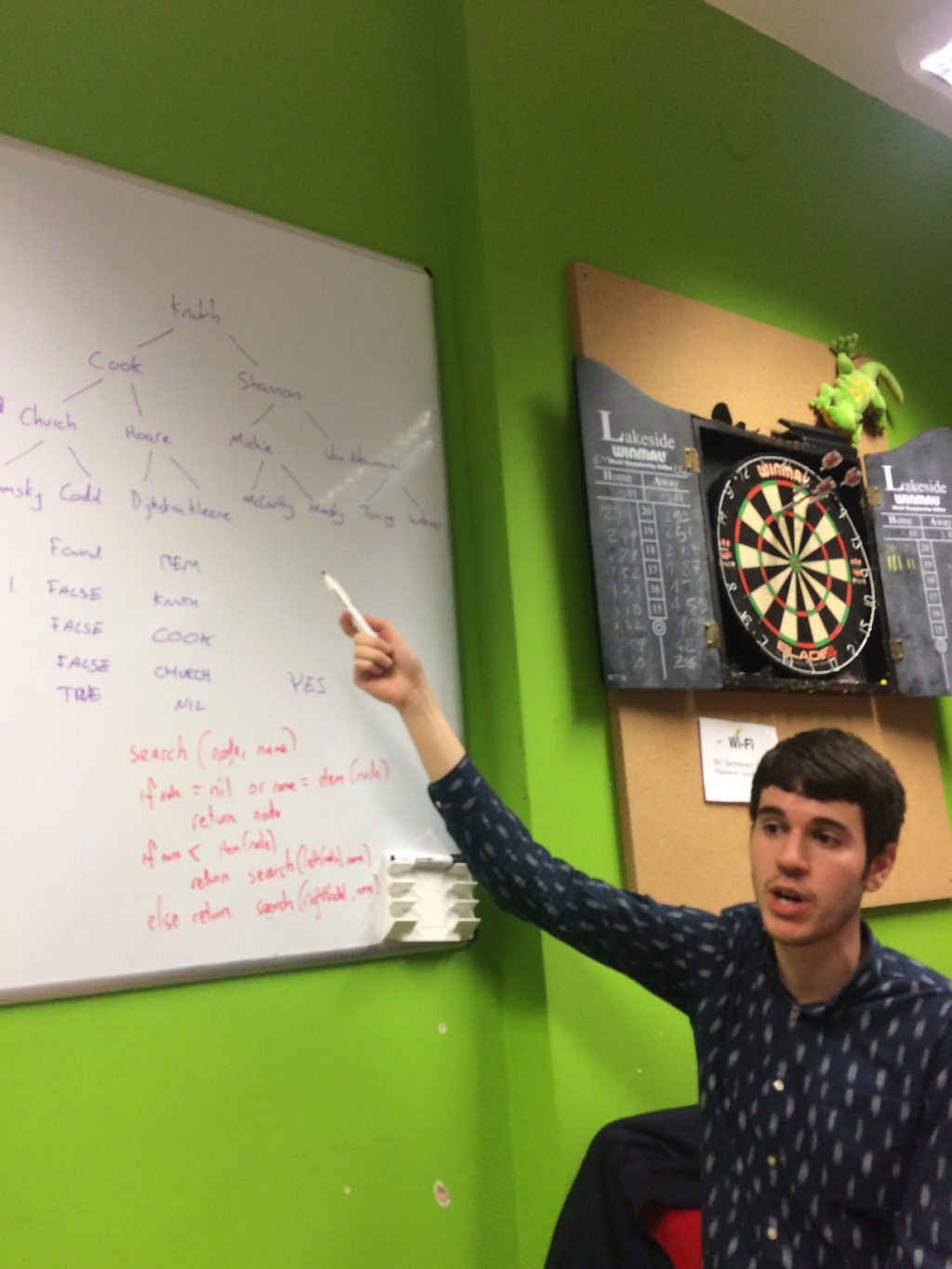

We discussed the search algorithm described in the text with Chris L stepping through an example on the whiteboard. Paul then offered an alternate, recursive algorithm from Cormen et al's "Introduction to Algorithms" which we translated to fit the book's notation:

def search(node, name)

return node if node == nil || name == item(node)

if name < item(node)

search(left(node), name)

else

search(right(node), name)

end

endWe then turned to the first exercise in the chapter: what would be the worst shape for a binary search tree?

apple

\

banana

\

cherry

\

durian

\

elderberry

\

fig

We quickly decided the answer would be a tree (as above) that leans entirely to one side (e.g. with each node to the right of its parent), giving us a worst-case O(n) complexity when searching.

The second part of the exercise asked us to develop an expression for the average complexity (in Big O notation) to search for any item in any arbitrary tree. We were a little confused by this and decided to discuss the best-case complexity given in the chapter in some detail:

There were varying levels of familiarity with Big O notation and computation complexity theory so we attempted a brief summary but felt it might deserve dedicating a future meeting to the topic (particularly as it is a later chapter in the book). We touched on the common complexities:

-

O(1): algorithm takes constant time regardless of size of input -

O(n): algorithm grows linearly with the size of input -

O(log n): algorithm grows increasingly slowly with the size of input -

O(n^2): algorithm grows exponentially with the size of input

(We didn't touch on O(n log n).)

With that in mind, we turned back to the best-case complexity given in the book:

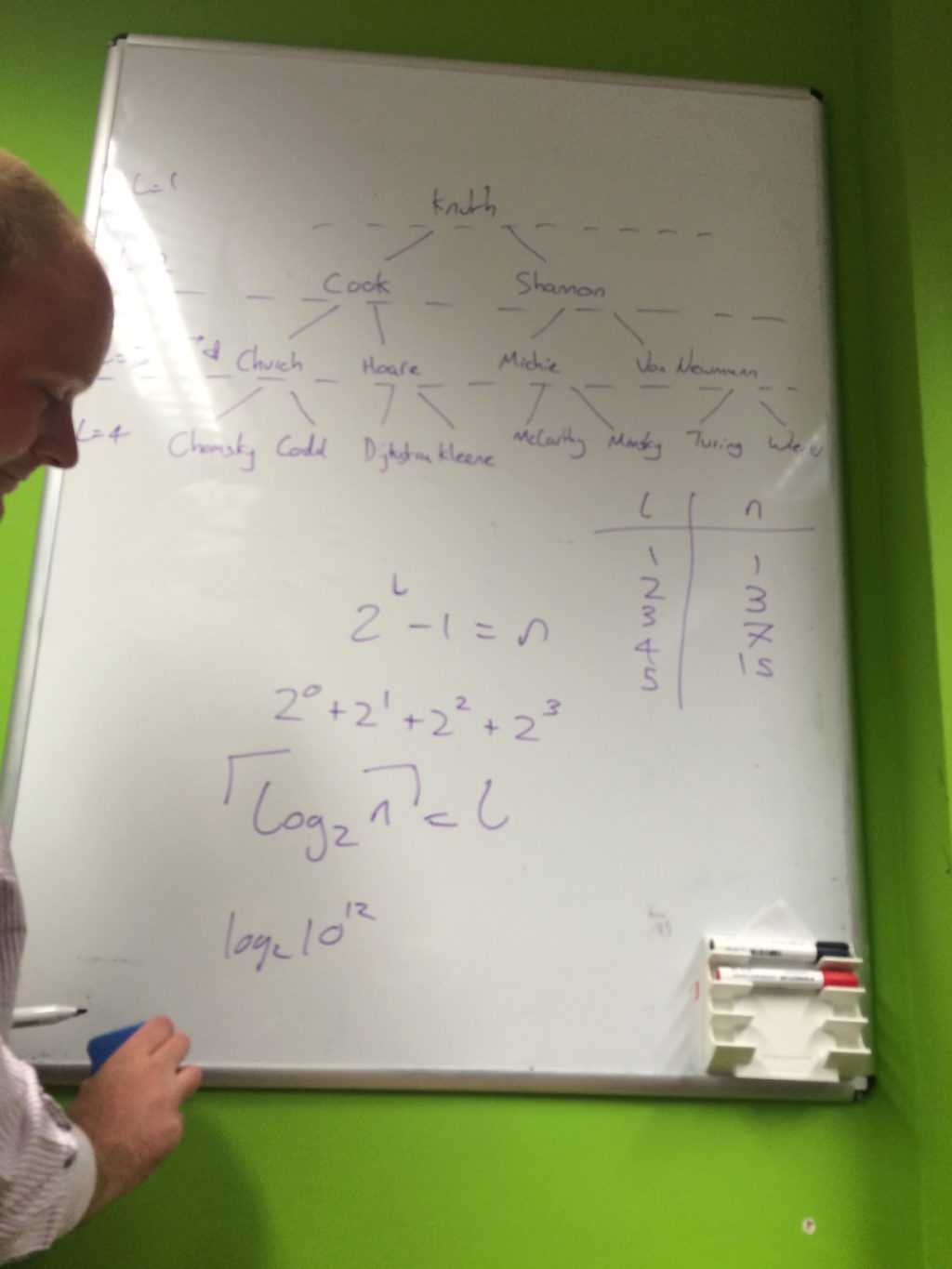

l = ceil(log2 n)

Here, l is the number of levels of the tree and n is the number of nodes in the tree. Given that this only described full, balanced trees, we tried to understand this relationship between levels and nodes and why the ceil is required.

Chris drew up the following table to explore this relationship further and Tom expanded it into the sum of powers in the rightmost column.

| number of levels (l) | number of nodes (n) | |

|---|---|---|

| 1 | 1 | 20 |

| 2 | 3 | 20 + 21 |

| 3 | 7 | 20 + 21 + 22 |

| 4 | 15 | 20 + 21 + 22 + 23 |

As this began to take up quite a bit of time (and involved quite a bit of frantic whiteboard action), we decided to move on to the insertion and deletion algorithms.

Insertion was regarded as relatively straightforward as we could use the search algorithm from earlier but modify the last visited node, setting the new node to the left or right appropriately. We worked through this on the whiteboard and then turned to the rather trickier case of deletion.

We worked through a deletion example together on the whiteboard, trying to enumerate the different cases when deleting a node:

- The node is a leaf (viz. has no left or right subtrees)

- The node has only one subtree (either the left or right)

- The node has both left and right subtrees

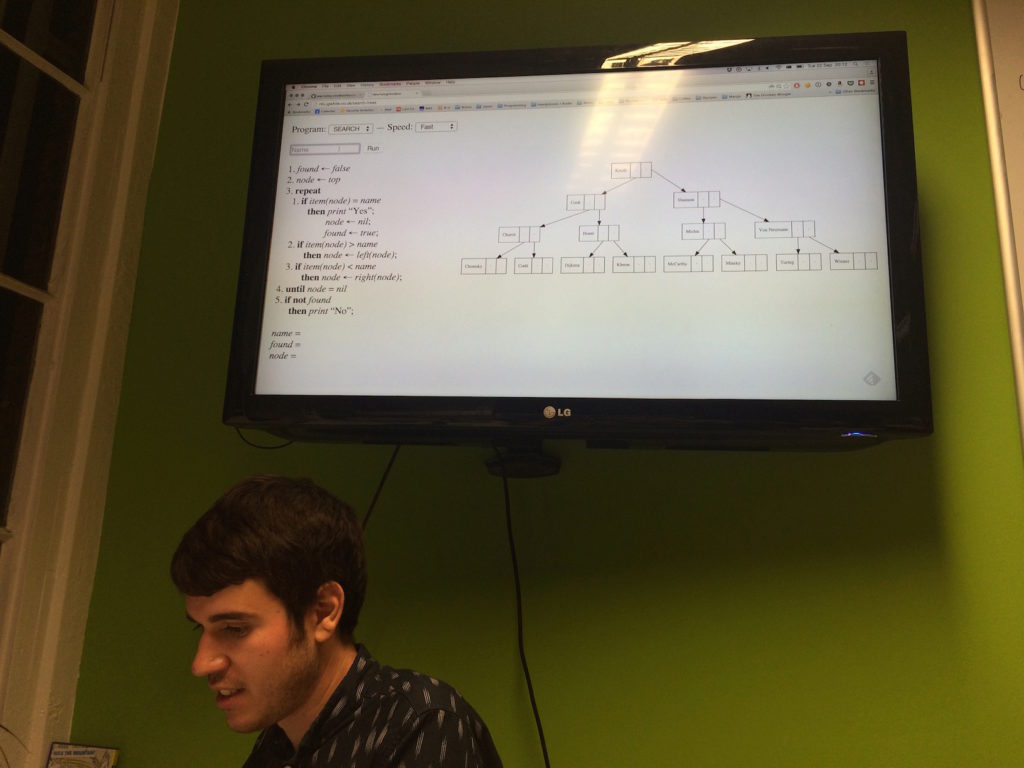

We talked through the cases and then decided to turn to Jamie's excellent visual implementation to help our understanding.

This allowed us to see arbitrary searches, insertions and (some) deletions occurring in real-time, comparing the program's progress with the state of the tree.

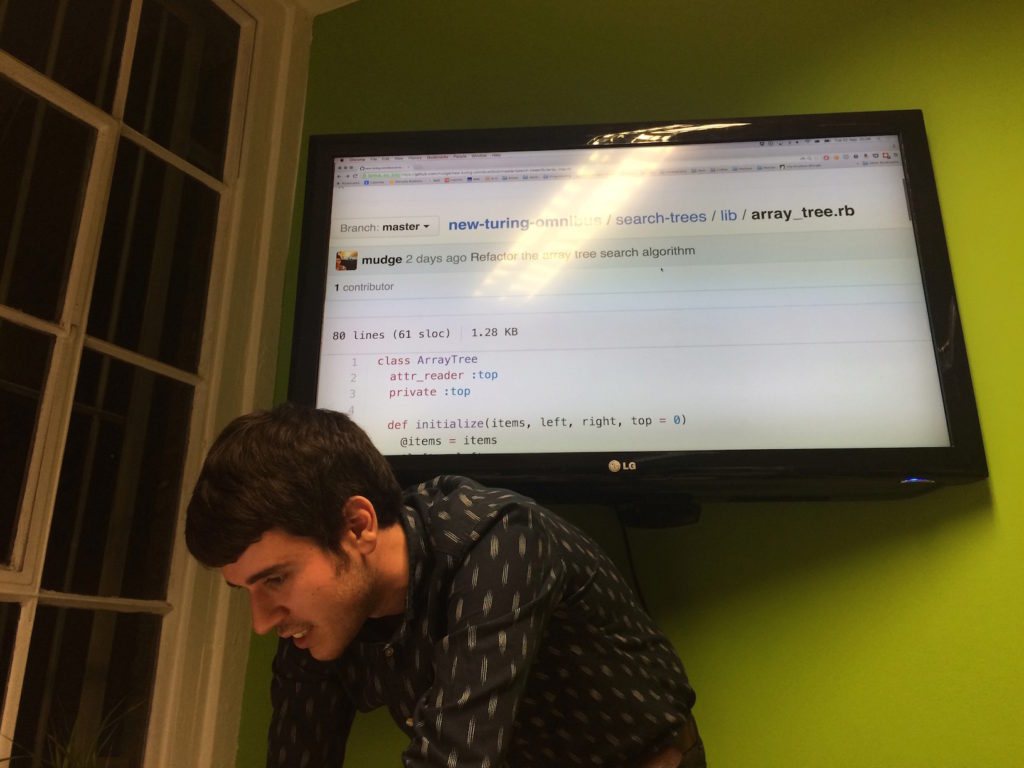

Paul then compared this with his own implementation in Ruby, attempting to use the method described in the book of using three arrays (item, left and right) to store the entire tree.

Paul also discussed an possible immutable version of a tree where insertions and deletions return whole new trees rather than modifying the original. While searching, depth-first and breadth-first ordering were relatively straightforward, the challenge of efficiently returning a new tree on insertion actually requires an exploration of persistent data structures (e.g. techniques such as path copying) which we might revisit in the future.

Following the last book's retrospective, we made sure to discuss how the meeting had gone and whether we wanted to make any changes. Overall, the focus on understanding and tying our reading to the specific exercises was a good thing and we were right to be mindful of not overly criticising the material (unless offering a constructive alternative).

As there were clearly many follow-on topics to discuss, we wondered whether to dive deeper into the subject but a good argument was made to explore more varied subjects in future meetings. After all, one of the reasons we chose this book was for its breadth of topics and we wanted to make the club easier to drop in and out of.

Thanks to Leo and Geckoboard for hosting, to Jamie for his visual implementation of search trees and to Winna and Tom for sharing their baked goods.

- Home

- Documentation

- Choosing a Topic

- Shows & Tells

- Miscellaneous

- Opt Art

- Reinforcement Learning: An Introduction

- 10 Technical Papers Every Programmer Should Read (At Least Twice)

- 7 More Languages in 7 Weeks

- Lua, Day 1: The Call to Adventure

- Lua, Day 2: Tables All the Way Down

- Lua, Day 3

- Factor, Day 1: Stack On, Stack Off

- Factor, Day 2: Painting the Fence

- Factor, Day 3: Balancing on a Boat

- Elm, Day 1: Handling the Basics

- Elm, Day 2: The Elm Architecture

- Elm, Day 3: The Elm Architecture

- Elixir, Day 1: Laying a Great Foundation

- Elixir, Day 2: Controlling Mutations

- Elixir, Day 3: Spawning and Respawning

- Julia, Day 1: Resistance Is Futile

- Julia, Day 2: Getting Assimilated

- Julia, Day 3: Become One With Julia

- Minikanren, Days 1-3

- Minikanren, Einstein's Puzzle

- Idris Days 1-2

- Types and Programming Languages

- Chapter 1: Introduction

- Chapter 2: Mathematical Preliminaries

- Chapter 3: Untyped Arithmetic Expressions

- Chapter 4: An ML Implementation of Arithmetic Expressions

- Chapter 5: The Untyped Lambda-Calculus

- Chapters 6 & 7: De Bruijn Indices and an ML Implementation of the Lambda-Calculus

- Chapter 8: Typed Arithmetic Expressions

- Chapter 9: The Simply-Typed Lambda Calculus

- Chapter 10: An ML Implementation of Simple Types

- Chapter 11: Simple Extensions

- Chapter 11 Redux: Simple Extensions

- Chapter 13: References

- Chapter 14: Exceptions

- Chapter 15: Subtyping – Part 1

- Chapter 15: Subtyping – Part 2

- Chapter 16: The Metatheory of Subtyping

- Chapter 16: Implementation

- Chapter 18: Case Study: Imperative Objects

- Chapter 19: Case Study: Featherweight Java

- The New Turing Omnibus

- Errata

- Chapter 11: Search Trees

- Chapter 8: Random Numbers

- Chapter 35: Sequential Sorting

- Chapter 58: Predicate Calculus

- Chapter 27: Perceptrons

- Chapter 9: Mathematical Research

- Chapter 16: Genetic Algorithms

- Chapter 37: Public Key Cryptography

- Chapter 6: Game Trees

- Chapter 5: Gödel's Theorem

- Chapter 34: Satisfiability (also featuring: Sentient)

- Chapter 44: Cellular Automata

- Chapter 47: Storing Images

- Chapter 12: Error-Correcting Codes

- Chapter 32: The Fast Fourier Transform

- Chapter 36: Neural Networks That Learn

- Chapter 41: NP-Completeness

- Chapter 55: Iteration and Recursion

- Chapter 19: Computer Vision

- Chapter 61: Searching Strings

- Chapter 66: Church's Thesis

- Chapter 52: Text Compression

- Chapter 22: Minimum spanning tree

- Chapter 64: Logic Programming

- Chapter 60: Computer Viruses

- Show & Tell

- Elements of Computing Systems

- Archived pages