C++: isChiForAllAliasedMemory recursion through inexact Phi operands

#2917

+1

−1

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

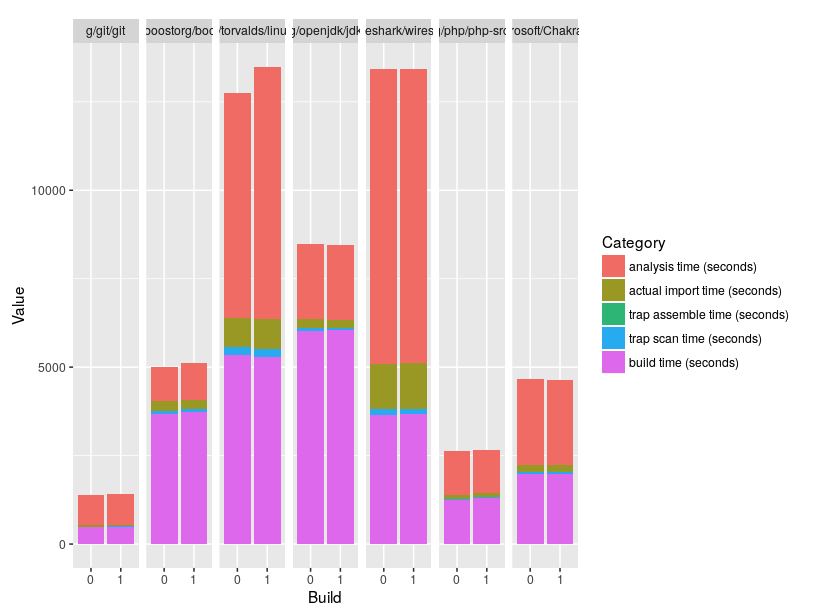

On some projects (notably

git/git) it can happen that aPhiInstructionhas inexact operands (See https://github.com/github/codeql-c-analysis-team/issues/30):This causes

isChiForAllAliasedMemoryto fail because it expects theChichain to have exact operands forPhiInstructions.The real fix is most likely to avoid this situation altogether, but this easy workaround PR modifies the definition of

isChiForAllAliasedMemoryto allow recursion through inexactPhiInstructionoperands. I haven't observed any need to modify the case forChiInstructions, so I'll leave that one to only recurse through exact operands.