-

-

Notifications

You must be signed in to change notification settings - Fork 7.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Image processing requires lots of memory #5220

Comments

|

This belongs in the Forum or in the Hugo Themes repo Issues tracker if this theme is listed on the website. It is recommended that you commit the |

|

@onedrawingperday why does this belong into the forum? I just used my shortcode to demonstrate a general issue that is present in Hugo. It is not template specific. It is specific to the image generation process and the way it frees ressources. |

|

In addition: If you have a page with e.g. 100 images and generate multiple versions of each image. You will not get to the point where you can commit the ressources directory. At least not if hugo has to generate all of them in a single run. This will not work on machines with less than XX GB of memory. |

I'm pretty sure we're not doing that, but it's worth checking. That said, we do

So, if you start out with lots of big images -- it will be memory hungry even if Go's Garbage Collector gets to do its job properly. But I will have a look at this. |

|

@bep this sounds reasonable. So maybe the parallel processing is the problem than. In that case it might be a good idea to have a setting where you can limit / configure this so the generation works on small machines. jfyi: I tried to automated the build process using google cloud build. The standard machines have only 2 GB of memory. |

|

@Noki This issue was re-opened obviously. But there is a technique for low memory machines and I am using one for my 1 core VM and posted about it here I am working with image intensive sites so I know what you're talking about. But whether this can be addressed in Hugo or if this is from upstream I don't know. |

We currently do this in parallel with number of Go routines = I will consider adding a more flexible setting for this. |

|

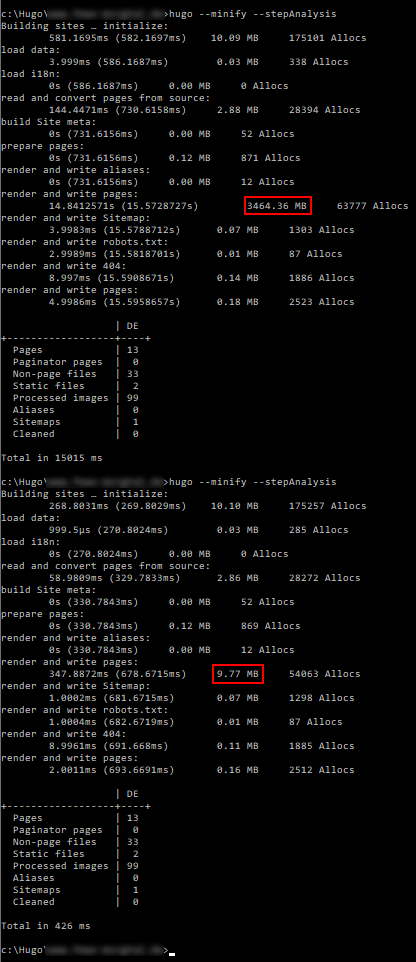

@bep Any update on this one? I think it would really help having an image processing queue and a flag to limit the number of images processed in parallel. Here is a screenshot showing the memory-usage on my machine. |

|

@Noki I don't think the ▶ gtime -f '%M' hugo --quiet

670793728

go/gohugoio/hugoDocs master ✗ 15d ✖ ⚑ ◒

▶ rm -rf resources && gtime -f '%M' hugo --quiet

918601728The Hugo docs isn't "image heavy", but the above should be an indicator. The above shows "maximum resident set size of the process during its lifetime, in Kbytes." I will try to add a "throttle" to the image processing and see if that has an effect. Will report back. |

|

I have added a PR that I will merge soon that will boldly close this issue as "fixed" -- please shout if it doesn't. |

|

@bep Thanks for fixing this! Image processing works now for me with a peak memory usage of ~230 MB. This is great and allows me to build on small cloud instances. As expected the build time was quite long (>60 seconds) and I had to increase the timeout setting in the Hugo config to a value higher than this. Before I increased the timeout setting Hugo did not finish building and was stuck and I had to manually terminate the process. Turning on debug showed the following as last lines before getting stuck: So there seems to be an additional problem here. Expected behaviour would be to quit instead of getting stuck. In addition the new color highlighting of "WARN" matches "WARN" in "WARNING" as well. ;-) |

If you could do a When it is stuck, the above should provide a stack trace. Also, when you say "building on small cloud instances" I have to double check: You know that you can commit the processing result in (and I will fix the WARN s WARNING) |

|

Here is the stacktrace:

I know I could. But It would increase the size of my git repo by a lot because currently I generate 4 additional versions for each image. In addition I don't consider this to be best practice. I only want to keep the original images in my repo. |

|

This issue has been automatically locked since there has not been any recent activity after it was closed. Please open a new issue for related bugs. |

Hi!

I'm currently using Hugo 0.48. I created my own figure shortcode that generates a number of different image sizes for responsive images. I noticed that using the shortcode increased the memory usage by a lot. To generate a couple of simple pages with 10-20 images on each page uses a couple gigabytes of memory.

Here is my shortcode: https://github.com/Noki/optitheme/blob/master/layouts/shortcodes/figure.html

It looks like Hugo keeps all images in memory when creating them instead of freeing memory once an image is created. The only way to generate the website on machines with small amounts of memory is to cancel the generation process and start it multiple times. Each time a couple of images are written to the ressources directory. Once all images are there the generation works fine.

Expected behaviour would be that I could easily generate a website with a couple of hundred images and my shortcode on a small machine with 1 GB of memory.

Best regards

Tobias

The text was updated successfully, but these errors were encountered: