Persistent memory and hierarchical compression for development environments

Continuo is a persistent memory system that provides semantic search and storage capabilities for development workflows. By separating reasoning (LLM) from long-term memory (Vector DB + hierarchical compression), the system maintains knowledge indefinitely, circumventing context window limitations.

- Persistent Memory - Store and retrieve development knowledge across sessions

- Semantic Search - Find relevant information using natural language queries

- Hierarchical Compression - N0 (chunks) → N1 (summaries) → N2 (meta-summaries)

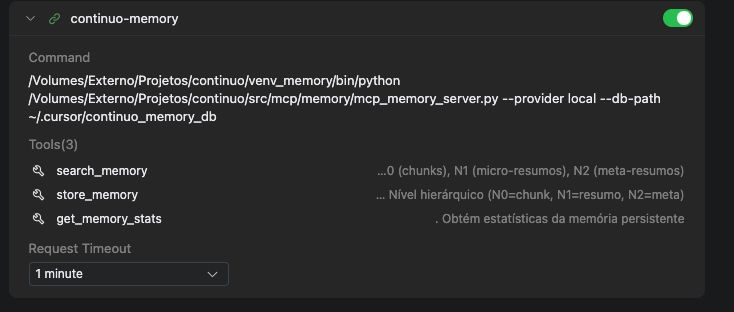

- MCP Integration - Seamless integration with IDEs via Model Context Protocol

- Cost Effective - 100% local (free) or hybrid (low-cost) deployment options

- FastMCP - Built on the modern MCP server framework

git clone https://github.com/GtOkAi/continuo-memory-mcp-memory-mcp.git

cd continuo

./scripts/setup_memory.sh- Start the memory server:

./scripts/run_memory_server.sh- Configure your IDE (Qoder/Cursor):

Create .qoder/mcp.json (or .cursor/mcp.json):

{

"mcpServers": {

"continuo-memory": {

"command": "/absolute/path/to/continuo/venv_memory/bin/python",

"args": [

"/absolute/path/to/continuo/src/mcp/memory/mcp_memory_server.py",

"--provider",

"local",

"--db-path",

"/absolute/path/to/memory_db"

]

}

}

}- Use in your IDE:

@continuo-memory search_memory("authentication implementation")

@continuo-memory store_memory("Fixed JWT validation bug", {"file": "auth.py"})

@continuo-memory get_memory_stats()

IDE Chat ──► MCP Adapter ──► Memory Server ──► ChromaDB

▲ ▲ │ │

│ └──── tools ◄─────┘ │

└───── response ◄──── context ◄───────────────┘

- Memory Server - ChromaDB + sentence-transformers for embeddings

- MCP Adapter - FastMCP server exposing

search_memoryandstore_memorytools - Hierarchical Compression - Multi-level context optimization (N0/N1/N2)

- Autonomous Mode - Optional automation with Observe → Plan → Act → Reflect cycle

python src/mcp/memory/mcp_memory_server.py \

--provider local \

--db-path ./memory_dbpython src/mcp/memory/mcp_memory_server.py \

--provider openai \

--api-key sk-your-key \

--db-path ./memory_dbsearch_memory(query: str, top_k: int = 5, level: str | None = None) -> str

- Semantic search in persistent memory

- Returns relevant documents with similarity scores

store_memory(text: str, metadata: dict | None = None, level: str = "N0") -> str

- Store content in persistent memory

- Supports metadata tagging and hierarchical levels

get_memory_stats() -> str

- Get memory statistics (total documents, levels, etc.)

- N0 - Raw chunks (code snippets, conversations)

- N1 - Micro-summaries (5-10 chunks compressed)

- N2 - Meta-summaries (5-10 summaries compressed)

See the examples/memory/ directory:

basic_usage.py- Simple store/retrieve operationshierarchical_demo.py- Multi-level compression examplesauto_mode_demo.py- Autonomous mode demonstration

- Setup Guide - Detailed installation instructions

- Architecture Specification - Complete technical documentation

- Code of Conduct - Community guidelines

- Python 3.9+ - Core implementation

- ChromaDB - Vector database for embeddings

- Sentence Transformers - Local embedding generation (all-MiniLM-L6-v2)

- FastMCP - MCP server framework

- Model Context Protocol - IDE integration standard

| Provider | Storage | Search | Monthly (1000 queries) |

|---|---|---|---|

| Local (sentence-transformers) | Free | Free | $0 |

| OpenAI embeddings | Free | ~$0.0001/query | ~$0.10 |

| Use Case | License | Cost |

|---|---|---|

| Individual/Research | AGPL v3 | Free |

| Startup (<$1M, <10 employees) | AGPL v3 | Free |

| Non-profit/Education | AGPL v3 | Free |

| Commercial (≥$1M OR ≥10 employees) | Commercial | From $2,500/year |

See COMMERCIAL_LICENSE.md for details.

Contributions are welcome! Please read CONTRIBUTING.md for guidelines.

Continuo Memory System is dual-licensed:

FREE for:

- ✅ Individual developers and researchers

- ✅ Non-profit organizations and educational institutions

- ✅ Companies with <$1M revenue AND <10 employees

- ✅ Development, testing, and evaluation

- ✅ Open source projects (AGPL-compatible)

Requirements: Share source code of modifications under AGPL v3

See LICENSE for full AGPL v3 terms.

REQUIRED for:

- ❌ Companies with ≥$1M revenue OR ≥10 employees

- ❌ Proprietary/closed-source products

- ❌ SaaS offerings without source disclosure

Benefits:

- ✅ No AGPL copyleft obligations

- ✅ Proprietary use rights

- ✅ Priority support (optional)

- ✅ Custom deployment assistance (optional)

Pricing: From $2,500/year (Bronze) to custom Enterprise

See COMMERCIAL_LICENSE.md for pricing and details.

- Sustainable Development: Commercial users fund ongoing maintenance

- Open Source Protection: AGPL prevents proprietary forks

- Fair Use: Small teams and non-profits use free indefinitely

- Community First: Core features always open source

Contact: gustavo@shigoto.me for commercial inquiries

Built using:

- Model Context Protocol - Protocol specification

- MCP Python SDK - MCP implementation

- ChromaDB - Vector database

- Sentence Transformers - Embedding models

- D.D. & Gustavo Porto

Note: This project implements the architecture described in continuo.markdown. For academic context and detailed specifications, refer to that document.