-

Notifications

You must be signed in to change notification settings - Fork 6.4k

Removing autocast for 35-25% speedup. (autocast considered harmful).

#511

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

4bc7ee5 to

70abbb7

Compare

|

The documentation is not available anymore as the PR was closed or merged. |

| prompt = "a photo of an astronaut riding a horse on mars" | ||

| with autocast("cuda"): | ||

| image = pipe(prompt).images[0] | ||

| image = pipe(prompt).images[0] |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Are you sure that this is faster? Using autocast gives currently (before this PR) a 2x boost in terms of generation speed.

Will also test a bit locally on a GPU tomorrow

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This is extremely surprising but I am also measuring a 2x speedup with autocast on f32.

I am looking into it, I see to copies but not nearly the same amount, there's probably a device-to-host /host-to-device somewhere that kills performance but I haven't found it yet.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Okay, I figure it out.

autocast will actually use fp16 for some ops by doing some heuristics. https://pytorch.org/docs/stable/amp.html#cuda-op-specific-behavior

So it's faster because it's running on fp16 even if the model was loaded in f32.

So without it it's slower just because it's actually running f32.

If we enable real fp16 with a big performance boost I feel like we shouldn't need it f32 (but that does make it slower but also "more" correct.). Some ops are kind of dangerous to actual run in fp16 but we should be able to tell them apart (and for now it seems the generations are actually still good enough even when running everything in f16)

But it's still a nice way to get f16 running "for free". The heuristics they use seem OK (but as they mention, they probably wouldn't work for gradients, I guess because fp16 overflows faster)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Commented extensively here: #511

Should we maybe change the example to load native fp16 weights then?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

So replace:

pipe = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4", use_auth_token=True)by

pipe = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4", torch_dtype=torch.float16, revision="fp16")

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That seems OK. Is fp16 supported by enough GPU cards at this point ? That would be my only point of concern.

But given the speed difference, advocating for fp16 is definitely something we should do !

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes I think fp16 is widely supported now

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Overall this looks very good to me, I'm not 100% sure whether we're getting a big speed-up though if everything is in fp32 - will test tomorrow

|

Do you think it would be practical to take something from I've always been reluctant to try to write performance tests when CI is made out of The Cloud, but if you can trust the tests will run somewhere with a consistent execution environment, then I think it could be very informative. |

100% agree, I was not thinking about performance, merely correctness (the pipeline works in fp16 without autocast. Edit: Added a slow test. |

|

I've been successfully using some code based on this patch. The one change I've had to make is to the stochastic schedulers such as DDIM. Their use of |

|

I think it included most of the changes here sorry I'm also laggy on my GH notifications. For I still advocate removing |

|

@NouamaneTazi do you time by any change to rebase this PR on current main and see if there is anything we forgot to add in your PR: #371? Also, I agree with @Narsil that we should updated the docs / readmes to not have "autocast" if pure |

| device=latents_device, | ||

| dtype=text_embeddings.dtype, | ||

| ) | ||

| if self.device.type == "mps": |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

@pcuenca could you take a look here?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, I commented in the other conservation, I think it's ok like this.

| diff = np.abs(image_chunked.flatten() - image.flatten()) | ||

| # They ARE different since ops are not run always at the same precision | ||

| # however, they should be extremely close. | ||

| assert diff.mean() < 2e-2 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

this test tolerance is a bit high to me... => will play around with it a bit!

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

It's roughly exactly the same difference as in #371 for pure fp16 run.

Running some ops in f32 directly instead of f16 (which autocast will do) does change some patches.

This is less than 2% total variance in images, so it really doesn't show visually. (Much less than some other changes in #371 where I think some visual differences were visible).

|

@Narsil I checked ran a quick benchmark which I think is in line with your findings: The following script: #!/usr/bin/env python3

from torch import autocast

from diffusers import StableDiffusionPipeline

import torch

from time import time

pipe_fp32 = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4").to("cuda")

pipe_fp16 = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4", torch_dtype=torch.float16, revision="fp16").to("cuda")

prompt = 4 * ["Dark, eerie forest with beautiful sunshine."]

def benchmark(name, do_autocast, pipe, generator):

print(name)

start = time()

if not do_autocast:

image = pipe(prompt, generator=generator).images[0]

else:

with autocast("cuda"):

image = pipe(prompt, generator=generator).images[0]

image.save(name + ".png")

end = time()

print("time", end - start)

print(50 * "-")

torch_device = "cuda"

name = "FP32 - Autocast"

generator = torch.Generator(device=torch_device).manual_seed(0)

benchmark(name, True, pipe_fp32, generator)

name = "FP32"

generator = torch.Generator(device=torch_device).manual_seed(0)

benchmark(name, False, pipe_fp32, generator)

name = "FP16 - Autocast"

generator = torch.Generator(device=torch_device).manual_seed(0)

benchmark(name, True, pipe_fp16, generator)

name = "FP16"

generator = torch.Generator(device=torch_device).manual_seed(0)

benchmark(name, False, pipe_fp16, generator)gives these results on a TITAN RTX: The saved images are all identical - in general it doesn't seem like fp16 is hurting performance really (see: https://huggingface.co/datasets/patrickvonplaten/images/tree/main/to_delete) => so should we maybe change all of the docs to advertise native FP16 indeed meaning we'll advertise the following in all our examples: from diffusers import StableDiffusionPipeline

import torch

from time import time

pipe = StableDiffusionPipeline.from_pretrained("CompVis/stable-diffusion-v1-4", torch_dtype=torch.float16, revision="fp16").to("cuda")

image = pipe(prompt).images[0]It seems to make most sense given that we already advertise everywhere that it should be run on GPU no? What do you think @Narsil @pcuenca @patil-suraj @anton-l ? |

|

@patrickvonplaten I agree! But just to be on the safe side I think we need to throw a custom informative error when users try to use the |

Perfectly in line. The other kind of optimization |

|

Merging now and then updating the docs in a follow up PR |

|

For the Testla T4, on Google Colab, this results in a 2-second gain in time, from 28s to 30s (this timing the actual process itself, not the time the pipe gives you). Using PNDM, 50 steps, guidance scale 13.5 (usual I use), resolution 512x768 (Portrait) |

|

@WASasquatch Most of the speedups were already included in #371 as their was some duplicated efforts here. If you're interested you can even try https://github.com/huggingface/diffusers/tree/optim_attempts where we went a bit further. |

I'll check it out. The speed I got after Attention Slicing PR (over a month ago) was 28 seconds, all through-out these changes (I use current github branch and make changes as they happen). With this PR it's increased to 30 seconds. Could it be that |

Most likely. Attention slicing is for memory reduction, not inference speed. You could also try using pytorch profiler to see what's wrong. Maybe the slow part lies somewhere else. |

@Narsil Thanks for that. I assume I'm looking at the profiler bit. Thanks again. |

…ful). (huggingface#511) * Removing `autocast` for `35-25% speedup`. * iQuality * Adding a slow test. * Fixing mps noise generation. * Raising error on wrong device, instead of just casting on behalf of user. * Quality. * fix merge Co-authored-by: Nouamane Tazi <nouamane98@gmail.com>

…ful). (huggingface#511) * Removing `autocast` for `35-25% speedup`. * iQuality * Adding a slow test. * Fixing mps noise generation. * Raising error on wrong device, instead of just casting on behalf of user. * Quality. * fix merge Co-authored-by: Nouamane Tazi <nouamane98@gmail.com>

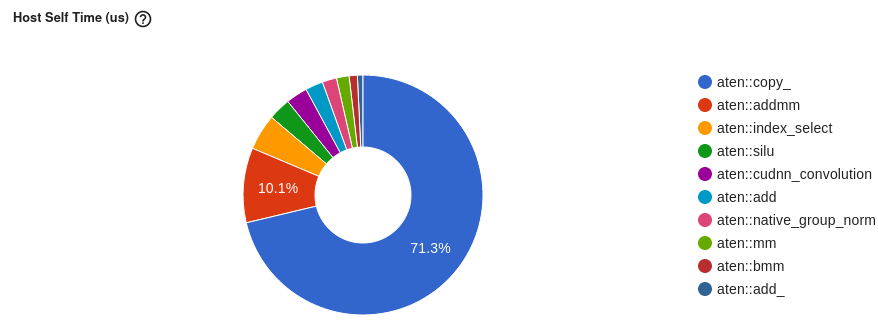

While investigating performance of

diffusersI figured out a relatively simple way to get 35% speedups on the default stable AI.Simply by removing

autocast.Happy to add any test that might be worthwhile, or move some of the casting to different places.

Ran on Titan RTX:

Before

After

The reason why

autocastadds so much overhead, si that a few tensors were still infp32and EVERY single operation afterwards would downcast to fp16 for the op, and upcast back tofp32afterwards leading to insane amounts of copies of tensors.Since it seems very easy to have inefficient code with it I took the liberty of removing it in the lib itself.

If that's ok with the maintainers I would like to remove it from the docs too.