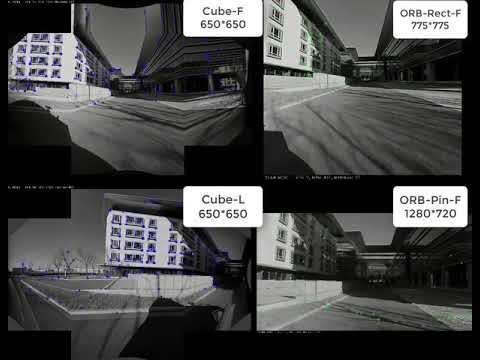

CubemapSLAM is a real-time SLAM system for monocular fisheye cameras. The system incorporates the cubemap model into the state-of-the-art feature based SLAM system ORB-SLAM to utilize the large FoV of the fisheye camera without introducing the distortion. The system is able to compute the camera trajectory and recover a sparse structure of the environment. It is also able to detect loops and relocalize the camera in real time. We provide examples on the Lafida dataset and the self-collected data sequences. Like ORB-SLAM, we also provide a GUI to change between a SLAM Mode and Localization Mode, please refer to the document for details.

CubemapSLAM is released under a GPLv3 license. For a list of all code/library dependencies (and associated licenses), please see Dependencies.md.

If you use CubemapSLAM in your work, please consider citing:

@inproceedings{wang2018cubemapslam,

title={CubemapSLAM: A Piecewise-Pinhole Monocular Fisheye SLAM System},

author={Wang, Yahui and Cai, Shaojun and Li, Shi-Jie and Liu, Yun and Guo, Yangyan and Li, Tao and Cheng, Ming-Ming},

booktitle={Asian Conference on Computer Vision},

pages={34--49},

year={2018},

organization={Springer}

}

@article{murTRO2015,

title={{ORB-SLAM}: a Versatile and Accurate Monocular {SLAM} System},

author={Mur-Artal, Ra\'ul, Montiel, J. M. M. and Tard\'os, Juan D.},

journal={IEEE Transactions on Robotics},

volume={31},

number={5},

pages={1147--1163},

doi = {10.1109/TRO.2015.2463671},

year={2015}

}

@article{murORB2,

title={{ORB-SLAM2}: an Open-Source {SLAM} System for Monocular, Stereo and {RGB-D} Cameras},

author={Mur-Artal, Ra\'ul and Tard\'os, Juan D.},

journal={IEEE Transactions on Robotics},

volume={33},

number={5},

pages={1255--1262},

doi = {10.1109/TRO.2017.2705103},

year={2017}

}

@article{urban2016multicol,

title={MultiCol-SLAM-a modular real-time multi-camera slam system},

author={Urban, Steffen and Hinz, Stefan},

journal={arXiv preprint arXiv:1610.07336},

year={2016}

}

The system has been tested in Ubuntu 16.04, but it should be easy to compile in other platforms. A powerful computer will ensure real-time performance and provide more stable and accurate results. Since we use the new thread and chrono functionalities, it should be compiled with C++11.

We use Pangolin for visualization and user interface. Dowload and install instructions can be found at: https://github.com/stevenlovegrove/Pangolin.

We use OpenCV to manipulate images and features. Download and install instructions can be found at: http://opencv.org. We have tested our system with OpenCV 2.4.11 and OpenCV 3.2.

Required by g2o (see below). Download and install instructions can be found at: http://eigen.tuxfamily.org. Required at least 3.1.0.

We use the modified versions from ORB-SLAM of the DBoW2 library to perform place recognition and g2o library to perform non-linear optimizations. Both modified libraries (which are BSD) are included in the Thirdparty folder.

Clone the repository:

git clone https://github.com/nkwangyh/CubemapSLAM

We provide a script build.sh to build the Thirdparty libraries and CubemapSLAM. Please make sure you have installed all required dependencies (see section 2). Execute:

cd CubemapSLAM

chmod +x build.sh

./build.sh

This will create libCubemapSLAM.so at lib folder and the executables cubemap_fangshan, cubemap_lafida in bin folder.

-

Download the sequences from https://www.ipf.kit.edu/lafida_datasets.php

-

Substitude the data paths of the scripts in the Scripts folder and run the script.

cd Scripts

sudo chmod +x ./runCubemapLafida.sh

./runCubemapLafida.sh

-

We currently release the loop2 and the parkinglot sequences. Download the sequences from Google drive: loop2_front, loop2_left, parkinglot_front and parkinglot_left.

-

Substitude the data paths of the scripts in the Scripts folder and run the scripts, for example,

cd Scripts

sudo chmod +x ./runCubemapParkinglotFront.sh

./runCubemapParkinglotFront.sh

To process your own sequences, you should first create your own setting file like the others in the Config folder. To do that, you should calibrate your fisheye camera with the toolbox from Prof. Davide Scaramuzza and fill in the intrinsic parameters as well as the image resolution and camera FoV. You can also change the face resolution of the cubemap. After that, you need to create an image name list to feed the system. Besides, a mask is needed in the feature extraction process to guarantee the performance.

You can change between the SLAM and Localization mode using the GUI of the map viewer.

This is the default mode. The system runs in parallal three threads: Tracking, Local Mapping and Loop Closing. The system localizes the camera, builds new map and tries to close loops.

This mode can be used when you have a good map of your working area. In this mode the Local Mapping and Loop Closing are deactivated. The system localizes the camera in the map (which is no longer updated), using relocalization if needed.