-

Notifications

You must be signed in to change notification settings - Fork 16

Apply resources limits to wls 14.1.1.0 to solve the evicted pod issue. #103

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

Signed-off-by: galiacheng <haixia.cheng@microsoft.com> Changes to be committed: new file: weblogic-azure-aks/src/main/arm/scripts/applyGuaranteedQos.sh modified: weblogic-azure-aks/src/main/bicep/mainTemplate.bicep new file: weblogic-azure-aks/src/main/bicep/modules/_deployment-scripts/_ds-apply-guaranteed-qos.bicep Fix script update update update update introspectorJobActiveDeadlineSeconds debug update script remove debug code fix script fix timestamp fix domain uid fix interval create global const for JVM args. Signed-off-by: galiacheng <haixia.cheng@microsoft.com> Changes to be committed: modified: weblogic-azure-aks/src/main/arm/scripts/common.sh modified: weblogic-azure-aks/src/main/arm/scripts/genDomainConfig.sh

Signed-off-by: galiacheng <haixia.cheng@microsoft.com> Changes to be committed: modified: weblogic-azure-aks/pom.xml

| version="${version##*\#version\#\:}" # match #version#:, this is a special mark for the version output, please do not change it. | ||

| echo_stdout ${version} | ||

|

|

||

| if [ "${version#*WebLogic Server 14.1.1.0}" != "$version" ]; then |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have not tried to fully understand this script so I could be wrong, but even so, I have two comments:

- To future proof, assuming the problem could be in all future 14.x versions, should the version check be a "greater than or equal"? I think you can leverage

sort --version-sortfor this. - Would the check be better off running before deploying the domain resource? E.g. a

kubectl runequivalent ofdocker run SOME_IMAGE_NAME sh -c 'source $ORACLE_HOME/wlserver/server/bin/setWLSEnv.sh > /dev/null 2>&1 && java weblogic.version'.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for your review @tbarnes-us Wish you a Merry Christmas though it's a late greeting.

For #1, you are right, we should consider future version, while I would like to only apply the workaround to 14.1.1.0 now, as we can not make sure the issue will happen in the future version, and we may have other configuration to resolve the issue after we find out the root cause. Also cc @edburns

For #2, we can check the version before deploying the domain resource. Considering the usage of post deployment for data source set up, we separate the processes of basic cluster set up and other configuration that configured via configmap which requires a restart. Current work flow is:

- Deploy the basic without data source

- [Optional if the customer select data source] deploy configmap for data source and patch the cluster, this will cause a restart

- Apply resource limits to WLS 14c.

The domain deployment will be much slower by applying resource limits (2min/pod to 8min/pod). So we apply the setting after there is not further restart(rolling update) process.

For getting version using docker run, this is a great idea, without executing the script using kubectl exec.

We have to run the command in a machine with docker deamon, I will check and add another commit.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For #2 I was thinking of kubectl run. This implicitly launches a temporary pod in the k8s cluster, and so has the advantage of using the same docker image cache as the k8s cluster. One issue is that it may need a pull secret - not sure how that'd be done.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For #2:

while I would like to only apply the workaround to 14.1.1.0 now

That's fine by me - as long as one of us remembers in the case that we still need the work-around once 14.1.2 comes out.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For #2:

while I would like to only apply the workaround to 14.1.1.0 now

That's fine by me - as long as one of us remembers in the case that we still need the work-around once 14.1.2 comes out.

We can create an issue to track that, let's discuss with Ed when he is back next week.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For #2 I was thinking of

kubectl run. This implicitly launches a temporary pod in the k8s cluster, and so has the advantage of using the same docker image cache as the k8s cluster. One issue is that it may need a pull secret - not sure how that'd be done.

We have the domain running when running the shell script to apply resources limits, I enhanced the script using java weblogic.version, please find 5523347

Signed-off-by: galiacheng <haixia.cheng@microsoft.com>

6ab60f0 to

5523347

Compare

Signed-off-by: galiacheng <haixia.cheng@microsoft.com>

| version=${stringArray[2]} | ||

| echo_stdout "WebLogic Server version: ${version}" | ||

|

|

||

| if [ "${version#*14.1.1.0}" != "$version" ]; then |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please include this comment:

# Temporary workaround for https://github.com/oracle/weblogic-kubernetes-operator/issues/2693

| resources: | ||

| requests: | ||

| cpu: "${cpu}m" | ||

| memory: "${memoryRequest}" |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

As far as I can tell, we are not modifying the memoryRequest from its previous value, retrieved on line 64, though we are modifying the cpu value. Is that what we want? I thought the essence of the workaround was to increase the cpu and memory requests and limits?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hello @edburns per my observations, 1.5Gi is enough, so will not increase the memory in this workaround.

| export constClusterName='cluster-1' | ||

| export constClusterT3AddressEnvName="T3_TUNNELING_CLUSTER_ADDRESS" | ||

| export constDefaultJavaOptions="-Dlog4j2.formatMsgNoLookups=true -Dweblogic.StdoutDebugEnabled=false" # the java options will be applied to the cluster | ||

| export constDefaultJVMArgs="-Djava.security.egd=file:/dev/./urandom -XX:MinRAMPercentage=25.0 -XX:MaxRAMPercentage=50.0 " # the JVM options will be applied to the cluster |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Are we sure we want to do this for all wls versions? The other script only applies to 14c, but this change applies unconditionally. Is that what we want?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes, you are right, we should specify -Xms256m -Xmx512m for lower version. Thanks for the finding.

| } | ||

|

|

||

| /* | ||

| * Apply resource limits to WebLogic Server 14.1.1.0. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Include this in the comment.

* Temporary workaround for https://github.com/oracle/weblogic-kubernetes-operator/issues/2693

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Please review my comments before merging. I assume you will consider them and apply them if necessary.

Signed-off-by: galiacheng <haixia.cheng@microsoft.com>

…56m -Xmx512m. Signed-off-by: galiacheng <haixia.cheng@microsoft.com>

…zure into fix-wls14c-evicted

Signed-off-by: galiacheng <haixia.cheng@microsoft.com> Changes to be committed: modified: weblogic-azure-aks/src/main/arm/scripts/applyGuaranteedQos.sh modified: weblogic-azure-aks/src/main/bicep/mainTemplate.bicep modified: weblogic-azure-aks/src/main/bicep/modules/_deployment-scripts/_ds-apply-guaranteed-qos.bicep

|

Hello @edburns @tbarnes-us There is a bug, querying the version from admin pod causes failure, there is no admin pod for slim image, but we can make sure there is at least one managed server pod, as the UI definition requires at least 1 replicas. I fixed it by running the command in one of the managed server pod, see 0082b26. |

Description

This pr is a workaround for the evicted issue on WLS 14c. We have discussion on this Slack thread

The WLS14c admin pod become evicted at age 2/3 days with error like

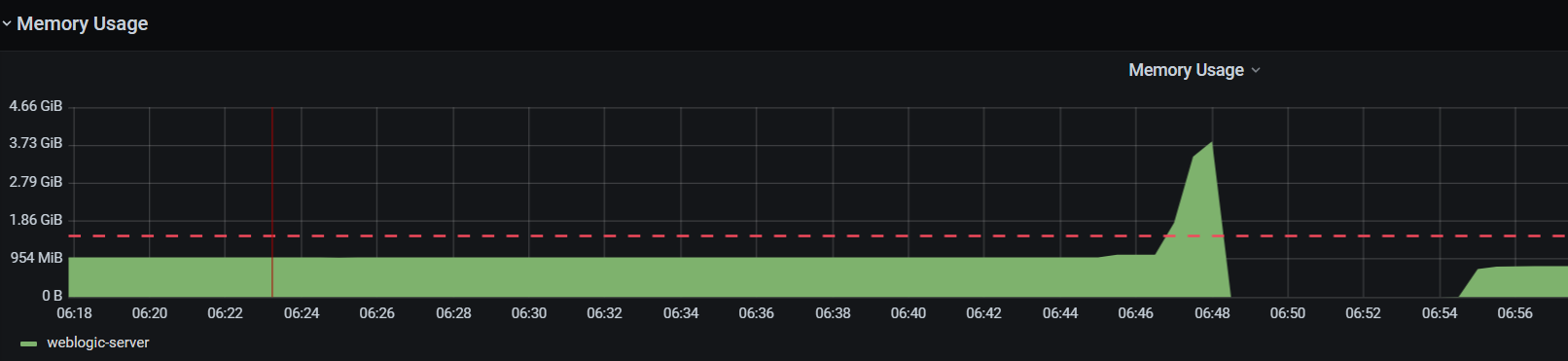

Container weblogic-server was using 3300040Ki, which exceeds its request of 1610612736.The admin pod used memory more than the request studently, see the monitoring screenshot

I am working with Anil to find out the root cause.

Per our observation, applying the resources.limits can resolve the issue, we decided to apply the settings by default.

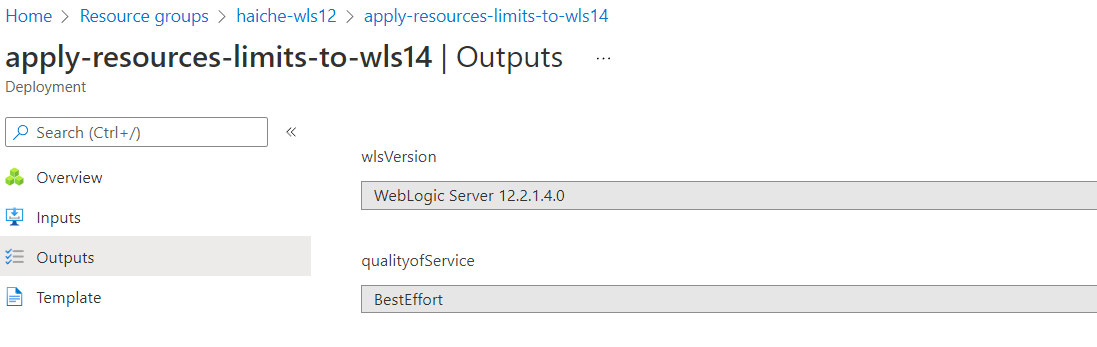

As the issue does not happen to 12c, so we agree to only apply to 14c, otherwise it will cause the deployment slower.

Test

Test on WLS14c: https://github.com/galiacheng/weblogic-azure/actions/runs/1628854471

Test on WLS 12c: