The notebooks referenced in deep-utils repository are saved in this repository.

For more information about deep-utils please check the link below:

deep-utils: https://github.com/pooya-mohammadi/deep_utils

This repository contains the most frequently used deep learning models and functions. Deep_Utils is still under heavy development, so take into consideration that many features may change in the future and make sure to install the latest version using pypi.

- About The Project

- Installation

- Vision

- Utils

- Tests

- Contributing

- Licence

- Collaborators

- Contact

- References

- Citation

Many deep learning toolkits are available on GitHub; however, we couldn't find one that would suit our needs. So, we created this improved one. This toolkit minimizes the deep learning teams' coding efforts to utilize the functionalities of famous deep learning models such as MTCNN in face detection, yolov5 in object detection, and many other repositories and models in various fields. In addition, it provides functionalities for preprocessing, monitoring, and manipulating datasets that can come in handy in any programming project.

What we have done so far:

- The outputs of all the models are standard numpy

- Single predict and batch predict of all models are ready

- handy functions and tools are tested and ready to use

# pip: recommended

pip install -U deep-utils

# repository

pip install git+https://github.com/pooya-mohammadi/deep_utils.git

# clone the repo

git clone https://github.com/pooya-mohammadi/deep_utils.git deep_utils

pip install -U deep_utils - minimal installation:

pip install deep-utils

- minial vision installation

pip install deep-utils[cv]

- tensorflow installation:

pip install deep-utils[tf]

- torch installation:

pip install deep-utils[torch]

We support two subsets of models in Computer Vision.

- Face Detection

- Object Detection

We have gathered a rich collection of face detection models which are mentioned in the following list. If you notice any model missing, feel free to open an issue or create a pull request.

- After Installing the library, import deep_utils and instantiate the model:

from deep_utils import face_detector_loader, list_face_detection_models

# This line will print all the available models

print(list_face_detection_models())

# Create a face detection model using MTCNN-Torch

face_detector = face_detector_loader('MTCNNTorchFaceDetector')- The model is instantiated, Now let's Detect an image:

import cv2

from deep_utils import show_destroy_cv2, Box, download_file, Point

# Download an image

download_file(

"https://raw.githubusercontent.com/pooya-mohammadi/deep_utils/master/examples/vision/data/movie-stars.jpg")

# Load an image

img = cv2.imread("movie-stars.jpg")

# show the image. Press a button to proceed

show_destroy_cv2(img)

# Detect the faces

result = face_detector.detect_faces(img, is_rgb=False)

# Draw detected boxes on the image.

img = Box.put_box(img, result.boxes)

# Draw the landmarks

for landmarks in result.landmarks:

Point.put_point(img, list(landmarks.logs()), radius=3)

# show the results

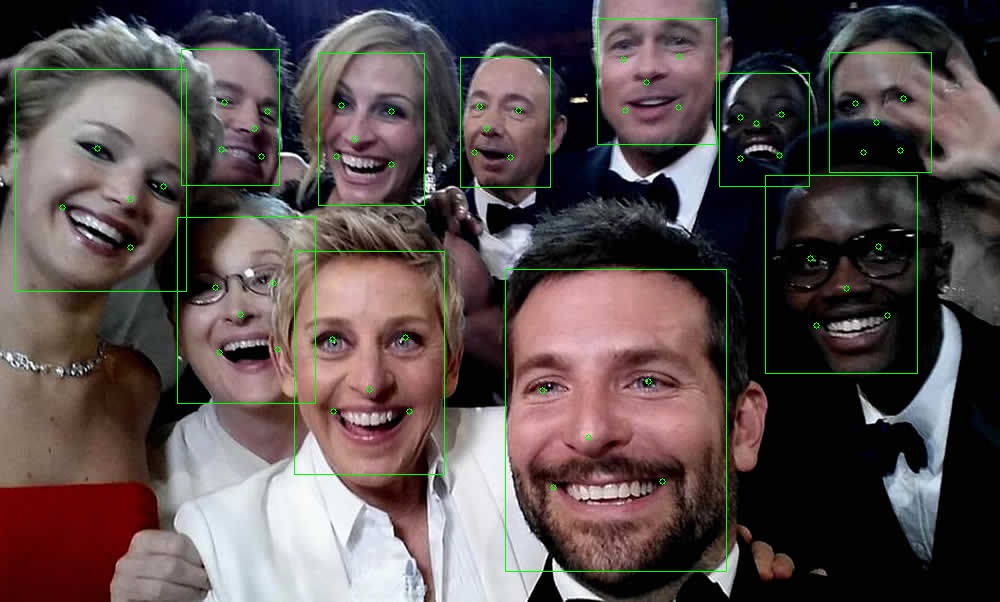

show_destroy_cv2(img)The result:

YoloV5 by far is one of the top-5 most used object detection models. The training process is straight forward and the results

are spectacular. However, using a trained model can be very challenging because of several files that yolov5's model needs in production.

To tackle this issue we have wrapped yolov5's models in a simple module whose usage will be illustrated in the following section.

- After Installing the library, import deep_utils and instantiate the model:

# import the model

from deep_utils import YOLOV5TorchObjectDetector

# instantiate with the default parameters

yolov5 = YOLOV5TorchObjectDetector()

# print the parameters

print(yolov5)- Download and visualize the test image

import cv2

from deep_utils import Box, download_file, Point, show_destroy_cv2

from PIL import Image

# Download an image

download_file("https://raw.githubusercontent.com/pooya-mohammadi/deep-utils-notebooks/main/vision/images/dog.jpg")

# Load an image

base_image = cv2.imread("dog.jpg")

# pil.Image is used for visualization

Image.fromarray(base_image[...,::-1]) # convert to rgb

# visualize using oepncv

# show_destroy_cv2(base_image) The result:

- Detect and visualize Objects

# Detect the objects

# the image is opened by cv2 which results to a BGR image. Therefore the `is_rgb` is set to `False`

result = yolov5.detect_objects(base_image, is_rgb=False, confidence=0.5)

# Draw detected boxes on the image.

img = Box.put_box_text(base_image,

box=result.boxes,

label=[f"{c_n} {c}" for c_n, c in zip(result.class_names, result.confidences)])

# pil.Image is used for visualization

Image.fromarray(img[...,::-1]) # convert to rgb

# visualize using oepncv

# show_destroy_cv2(img)In this custom data type, we have added the methods of the Dict type to the NamedTuple type. You have access to .get(), .values(), .items() alongside all of the functionalities of a NamedTuple. Also, all the outputs of our models are DictNamedTuple, and you can modify and manipulate them easily. Let's see how to use it:

from deep_utils import dictnamedtuple

# create a new object

dict_object = dictnamedtuple(typename='letters', field_names=['firstname', 'lastname'])

# pass the values

instance_dict = dict_object(firstname='pooya', lastname='mohammadi')

# get items and ...

print("items: ", instance_dict.items())

print("keys: ", instance_dict.keys())

print("values: ", instance_dict.values())

print("firstname: ", instance_dict.firstname)

print("firstname: ", instance_dict['firstname'])

print("lastname: ", instance_dict.lastname)

print("lastname: ", instance_dict['lastname'])

# results

items: [('firstname', 'pooya'), ('lastname', 'mohammadi')]

keys: ['firstname', 'lastname']

values: ['pooya', 'mohammadi']

firstname: pooya

firstname: pooya

lastname: mohammadi

lastname: mohammadi

Tests are done for python 3.8 and 3.9. Deep-Utils will probably run without any errors on lower versions as well.

Note: Model tests are done on CPU devices provided by GitHub Actions. GPU based models are tested manually by the authors.

Contributions are what make the open source community such an amazing place to learn, inspire, and create. Any contributions you make are greatly appreciated.

If you have a suggestion that would make this toolkit enhanced, please fork the repo and create a pull request. You can also simply open an issue with the tag "enhancement". Don't forget to give the project a ⭐️! Thanks again!

- Fork the Project

- Create your Feature Branch (

git checkout -b feature/AmazingFeature) - Commit your Changes (

git commit -m 'Add some AmazingFeature') - Push to the Branch (

git push origin feature/AmazingFeature) - Open a Pull Request

Distributed under the MIT License. See LICENSE for more information.

The LICENSE of each model is located inside its corresponding directory.

|

Pooya Mohammadi Kazaj |

Vargha Khallokhi |

Dorna Sabet |

Menua Bedrosian |

Alireza Kazemipour |

Pooya Mohammadi:

- LinkedIn www.linkedin.com/in/pooya-mohammadi

- Email: pooyamohammadikazaj@gmail.com

Project Link: https://github.com/pooya-mohammadi/deep_utils

- Tim Esler's facenet-pytorch repo: https://github.com/timesler/facenet-pytorch

Please cite deep-utils if it helps your research. You can use the following BibTeX entry:

@misc{deep_utils,

title = {deep_utils},

author = {Mohammadi Kazaj, Pooya},

howpublished = {\url{github.com/pooya-mohammadi/deep_utils}},

year = {2021}

}