-

-

Notifications

You must be signed in to change notification settings - Fork 3.5k

Much faster picture taking on Android, configurable #2505

base: master

Are you sure you want to change the base?

Conversation

…picture taking on Android

|

There is a world of difference between <200ms and ~2 seconds in how a user experiences photo capture in an app. Thanks so much for the contribution! Could you please mention which device your benchmark was performed on? |

|

@bkDJ Values were obtained on a Nokia 7.1 Android one. |

|

This is very interesting. Is there a lot of overhead for having the preview running? Also, was the measured time done with Camera1 implementation? Camera1 is quite fast right now, almost instant with the latest changes that removed unnecessary focus attempts. |

|

@cristianoccazinsp The overhead here comes from processing the frame preview (YUV to RGB and resizing). The overhead is minimal in eco and fast modes, bigger in boost. The proposed implementation works with Camera1 only. |

|

@Boris-c thanks for the update! My question about the overhead was mostly about the phone being usable (at least on average phones) while the preview is running. I have an application that overlays a bunch of stuff on top of the camera and it is critical for the UI/JS code to remain efficient even with the preview running. About Camera1, I do understand it is Camera1 only. My question was, did you run the tests with master? Because I'm far from seeing a 1.7s captures even on cheap devices, more like 0.5-1s at most since it now captures right away what's on the preview. I will give this branch a test if I have time later today. |

|

@cristianoccazinsp I did all my test using release 3.4.0. At what resolution do you get 0.5-1 second per capture? |

|

@Boris-c default resolution, and I'm even doing post processing with image resizing afterwards with another library. |

|

@cristianoccazinsp I think no overhead can be perceived by the user in eco and fast modes |

|

I've been testing on a Moto G5 (a very, very slow device, I expect this to be much faster with prod build) with debug/dev mode on:

Not sure where are the crashes coming from, did I do something wrong? All crashes are due to the same. Crash log Code snippet of how I'm using the Camera: |

|

It seems your crash happens in ResolveTakenPictureAsyncTask.java, in the section of the code that handles the option I developed that improvement last year, before that option was available. skipProcessing relies on receiving a byte array in Try to remove |

|

Still getting the same crash with skipProcessing={false}. I'm using your master branch directly from github, so I should be using exactly your code. |

|

Oh, but I see, they did something wrong, they just check Try to remove the option instead of setting it to false!... |

|

It should be |

|

You are absolutely right, that's actually a good catch/bug. I now see where you were getting such high times, if skipProcessing is not used, the camera is definitely very slow, but it is quite fast with skipProcessing. It is really not Android's camera API fault's but rather all the processing done by the library itself. New times, without skipProcessing: none: 2.4 seconds (against 1.1, holy cow skipProcessing makes a huge difference) I've noticed, however, picture quality is considerably lower with anything that's not set to "none". It is darker, blurrier, and lower resolution (a few hunder kbs lower) Are you willing to do fixes so it also works with skipProcessing? Have you also tried with back/front/wide lense cameras? Your code is not up to date to master so I can't test with the camera selection options. |

|

There's also another subtlety. When using any mode != 'none', on Android 10, the camera no longer makes the capture sound. I believe Android 10 is now copying iOS and any app that uses use of the camera API is forced to make a sound. Testing on a Pixel 2 I've also got: none / no skipProcessing: 1.1 seconds Again, the picture quality is severely reduced with eco/fast/boost (mostly light and size). I've gotta say it's a good trade off if you need very quick photos. The API might get a bit complicated with so many options (skip processing, fix orientation, scan mode). |

|

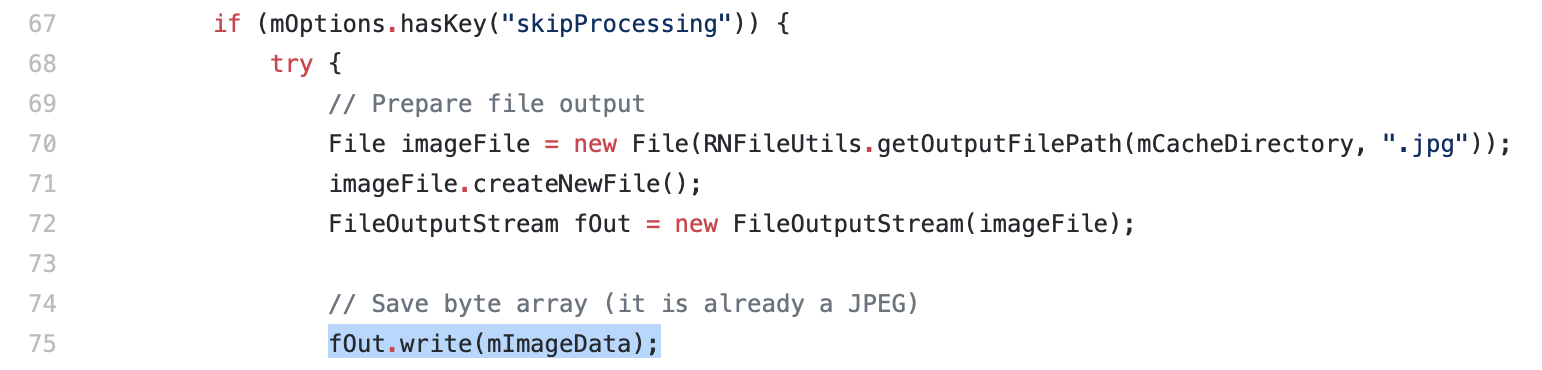

You can try, around line 75 of ResolveTakenPictureAsyncTask.java: ... as a replacement of ... (that's to test skipProcessing with eco, fast or boost) |

|

As for the quality of the picture, no idea. I get very good pictures on my device. |

|

Most likely related that one mode takes the picture from the camera, and another one just captures from the preview. The preview is always lower quality than the final capture. The change above fixes the crash with skipProcessing which is good. Do you think you can add that fix to your branch, and rebase from master to include all the latest updates? I would also definitely mention the differences when using any of those scan modes. Picture quality might suffer, there will be no shutter sound performed automatically by Android, and there might be a slight overhead. |

…act-native-camera * 'master' of https://github.com/react-native-community/react-native-camera: chore(release): 3.6.0 [skip ci] feat(android): Support to enumerate and select Camera devices (react-native-camera#2492) chore(release): 3.5.0 [skip ci] fix(android): Update Camera1 to not crash on invalid ratio (react-native-camera#2501) feat(ios): videoBitrate option for iOS (react-native-camera#2504) Fix jitpack.io maven link (react-native-camera#2497) Use a more appropriate orientation change event

…setting it to true

|

I didn't rebase, since I didn't put my changes in a branch. But I pulled from master. Then I implemented the changes needed to get the image as base64 even in "skipProcessing" mode, and allowed deactivating file saving in that mode. I also fixed the check of Regarding the missing shutter sound you mentioned, there is a prop, |

|

Last thing, we have an Android app used by hundreds of people (for research), who uploaded thousands of pictures of their food in various light conditions and with a whole range of devices, and we didn't notice any problems with picture quality in real life, using the implementation proposed in that PR. |

|

Thanks for the updates @Boris-c !. About picture quality, the difference is really not that significant. It becomes more obvious when using the focus feature since that makes a whole lot of changes to the lightning capture. Again, I think the difference is not too significant, but if you test it is noticeable and will vary from device to device based on the camera features. I'm still not sure where the difference comes from, but is definitely there! |

|

I don't have experience with telephoto and ultra wide cameras, nor did I test the front/back camera switch. But the code mainly hooks in I didn't do a side by side comparison like you did on Android. But I'll do one now and post it. |

|

Apparently, the same thing happens as on Android, i.e. the lack of post-processing is visible. It's currently very dark in my home, and the difference is noticeable when the picture is zoomed. The good news is that the similarity on both platforms will make it easier for the documentation ;) |

|

That's good to know. Do you have any timing samples? Remember to use skipProcessing as well since that flag makes a huge difference. |

|

Hey @Boris-c , I've found a few issues while testing on an iPhone 7.

Black photos on the first picture that is taken. Back and front cameras: |

|

Hi @cristianoccazinsp, here are my comments:

In Should I rewrite the logic here, I'd rather have a function that checks the need for the video data output, does the job of setting up a new one or removing an existing one, and call it from different places. The code that logs "failing real check" probably shouldn't be there in the first place, since didOutputSampleBuffer should never be called unless we want it to be called.

|

|

Hi @Boris-c , I'm not sure what's the best way to fix the "failing real check" issue. I personally don't quite understand the logic behind text/barcode recognition neither nor why those checks are needed. It would be great if you could fix it. The black screen, really happens all the time. Can you try to reproduce it? Make sure you're using all the camera features (camera switching, zoom, white balance, touch to focus, video recording, etc.). I'm also using |

|

@Boris-c sorry for the spam!. I've taken a video that shows the issue of a black screen. Now that you've added sound, it is even stranger! Looks like the picture is taken twice, but it is not the code or me taking the picture twice since you can see only one picture is added, and the same code captures only once with "none" mode. Even stranger, the issue doesn't happen when in landscape mode! "fast mode" on: "none" mode: Second issue. The pictures taken with this mode do not seem to be using the capture preset configuration very well. On the videos above, I'm also testing capturing a photo with the default settings, but also with the "High" UPDATE: Looks like the iOS code crops the image to match the preview size here (https://github.com/react-native-community/react-native-camera/blob/master/ios/RN/RNCamera.m#L670). This would explain the difference in image sizes when using one method or the other. "fast mode" / default and "High" pictureSize (look at the lamp): "none mode" / default and high pictureSize: RN Camera code that was used: |

* commit '0158ebfd39e053cdaf2385e7088074b375fa268d': chore(release): 3.8.0 [skip ci] doc(tidelift): add tidelift for enterprise feat(android): support null object of androidPermissionOptions to avoid request window (react-native-camera#2551) feat(ci): CircleCI Fix & Optimizations (react-native-camera#2550) feat(readme): improve tidelift feat(torch): Torch fixes for iOS and a few nil checks. (react-native-camera#2543) fix(ios): Honor captureAudio flag by not requesting audio input if set to false. (react-native-camera#2542) chore(release): 3.7.2 [skip ci] fix(ios): Remove flickering due to audio input (react-native-camera#2539)

|

@cristianoccazinsp I fixed the dark image issue, that was caused by the call to set video orientation (that triggers a light, white balance and focus check by the camera apparently). I also merged 3.8.0 |

|

@Boris-c were you also able to review the other issue related to image cropping? Do you think the time difference might be really just that the original take picture does that image cropping first? If you were to add that to the fast mode, would it still be faster? I'm not sure what's the best to do there, but I'm guessing that both fast and none modes should take identical pictures. |

|

@Boris-c Also, do you have an idea if these changes for Android might cause issues if the run/stop steps are moved to non UI threads? I'm testing some changes to improve the camera startup time and deal with ANRs when coming from background here: https://github.com/react-native-community/react-native-camera/compare/master...cristianoccazinsp:android-ui-thread?expand=1 Lastly, I'm also trying to test issues on iOS related to audio interruptions, do you think these changes might break when the session is updated due to audio interruptions? Changes here: https://github.com/react-native-community/react-native-camera/compare/master...cristianoccazinsp:ios-interruptions?expand=1 |

|

I don't think the time difference comes from images not being cropped. My guess is that something bad is happening in Android's public camera API (since the Camera app itself performs much faster than Avoiding the cropping of the preview frames to what the preview itself is showing is, in my opinion, impossible. Regarding audio interruptions, no idea!... |

|

The cropping is for iOS, not android.

For Android, I think the capture speed is the same for the native camera

apps. The difference is that RN code waits for the final picture to be

returned, while native apps use all the 3 or 4 events provided by by

android and release the camera for a new picture as soon as the first event

fires, and let's the rest of the processing to work in background. At least

that's how the one from Google works.

El mar., 22 de octubre de 2019 20:57, Boris Conforty <

notifications@github.com> escribió:

… I don't think the time difference comes from images not being cropped. My

guess is that something bad is happening in Android's public camera API

(since the Camera app itself performs much faster than takePicture()).

Avoiding the cropping of the preview frames to what the preview itself is

showing is, in my opinion, impossible.

Regarding audio interruptions, no idea!...

—

You are receiving this because you were mentioned.

Reply to this email directly, view it on GitHub

<#2505>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/ALU263ETTTIMFLQIDAMSQELQP6HNXANCNFSM4I2JRGWQ>

.

|

|

About Android, I was referring to the speed, in the sense that the huge time it takes to get the result from takePicture() is probably caused by some implementation peculiarity on Android’s side, not to the picture cropping. And when I mean the Camera app, I mean the app provided with the system, which takes pictures much faster than what we can do (in native apps and RN apps, since the bottleneck is the native call to takePicture(), in RN as well). Some while ago I benchmarked the whole process in detail, from RN’s capture call to the callback in RN. takePicture() is the bad guy in the chain. That’s how I came to the idea of capturing the frame preview. |

|

is anyone in charge on this PR ? this also happened for my app now |

|

does this solves this issue #2577? |

I believe that PR will make things a bit easier (since there's no skip processing to worry about, it was in fact @Boris-c who suggested removing it), but this PR still makes capture much faster on Android since it skips the take picture calls altogether and uses a much different approach. Still think this would be a very good addition, as long as it is not the default behaviour due to all the extra caveats it might add. |

|

It would be nice if it could be merged into the master. |

|

Why is this not being merged? It's been over an year. |

|

we need to fix conflicts and have more people testing this |

|

@sibelius are there any discussions outside this pr for what should be tested before it goes do master? I'm willing to help. |

|

looks like there are conflicts |

|

the first thing would be to create a new PR without conflicts |

|

Hi @Boris-c , why the width and height result of takePictureAsync in your version |

Summary

Android's native camera takePicture() is very slow. I wasn't happy with it and many people complain too (see #1836 for instance).

Camera1 allows capturing frame previews. By processing frame previews we can avoid using takePicture() altogether.

The previews are in YUV and need to be transformed to RGB. The proposed implementation uses a render script for fast processing.

4 modes are proposed (as a RN prop, "camera1ScanMode"): "none", "eco", "fast" and "boost".

In my app, on my device, the benchmark is the following (average of 3 pictures):

These measurements were done in React Native, from the call to

capture()to the result being received after conversion to base64 and bridge transfer. The speed increase of the proposed change, if seen at a lower level (AndroidtakePicture()vs frame previews processing), is even more dramatic.I use the library to get pictures in base64. My tests did not cover all possible uses of the library.

Note that the min SDK upgrade to version 17 is needed for the render script.

Test Plan

I'll leave it to more experienced users of the library to test every possible use case.

What's required for testing (prerequisites)?

What are the steps to reproduce (after prerequisites)?

Compatibility

Checklist

README.mdCHANGELOG.mdexample/App.js)