-

Notifications

You must be signed in to change notification settings - Fork 0

Getting started with SinaraML CV

After completion of this tutorial you will create SinaraML Server, setup 2 example ML pipelines and train model. After model training than you will create model images:

- Model image with binary model's artifacts that can be extracted.

- Model image with REST service.

SinaraML CV components can be run on Linux. Following software should be installed:

- Unzip

- Python 3.6 or later (standard libs and pip)

- Proprietary Nvidia GPU driver (noveau driver doesn't support CUDA)

- Nvidia container toolkit

- Docker

While provisioning VM in most cases you can have a couple of options: choosing VM with GPU preset where drivers are already installed or VM with GPU passthrough where you will install drivers yourself by using corresponding manual. You should contact your cloud/on-prem support for details.

Use official manual at https://git-scm.com/download/linux

Ubuntu and Debian:

sudo apt-get install unzip

- Use NVidia official installation manual for your Linux distribution at https://docs.nvidia.com/datacenter/tesla/tesla-installation-notes/index.html#abstract

Only driver version 515 and above is suitable for running SinaraML with NVIDIA CUDA support

- Use NVidia official installation manual for your Linux distribution at https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html#installing-the-nvidia-container-toolkit

- Download and install docker using package manager of your Linux distribution

- Official guides at https://docs.docker.com/engine/install/#supported-platforms

You don't need to change default docker container runtime to "NVidia" for SinaraML Server to work, all available GPUs will be connected to container automatically.

Use SinaraML CLI for SinaraML server management - https://pypi.org/project/sinaraml/

pip install sinaramlif you see a warning in the end of the installation process:

WARNING: The script sinara is installed in '/home/testuser/.local/bin' which is not on PATH.

Consider adding this directory to PATH or, if you prefer to suppress this warning, use --no-warn-script-location.

Add path to $HOME/.local/bin to your $PATH environment variable to enable cli commands.

export PATH=$PATH:/some/new/path:$HOME/.local/binYou may need reload shell or reboot your machine after installation to enable CLI commands.

Perform Linux post-installation steps for Docker Engine to run SinaraML CLI commands without sudo:

sudo groupadd -f docker

sudo usermod -aG docker $USERAfter installing SinaraML CLI you can use 'sinara' command in terminal.

First, create a SinaraML personal server. If you plan to run your server on local desktop, execute:

Note

use --shm_size SHM_SIZE option to set docker shared memory size. For optimal performance it recommended to set 1/6 of computer's RAM (since VRAM is 1/2 of RAM and batch size should be about 1/3 of VRAM).

sinara server create

Or, if you use a remote machine create SinaraML remote server to run it:

sinara server create --platform remote

For additional details check Remote Platform

When asked "Please, choose a Sinara for 1) ML or 2) CV projects:" - input "2"

CV option will be selected and your server will be able to utilize all available NVIDIA GPUs

Second, start SinaraML server:

sinara server start

Note

When on creating and removing SinaraML by default setup scripts creates docker volumes for data, code and temporary data. For day to day usage its recommended to use folder mapping on local disk using create command with option:

sinara server create --runMode b

You will be asked to enter host path where to store server's '/data', '/tmp' and '/work' folders.

Important

Please read Known Issues to address inconvenience that you can experience while running pipelines.

Demonstration of an example of a quick-start cv pipeline for training a neural network model using the mmdetection framework.

Binary Serving assumes that BentoService with model artifact files only will be created. REST API of that bentoservice will be not usable.

An example of cv pipeline for training a neural network detector from the mmdetection framework (YoloX) on open data from the COCO dataset.

The model is trained on a part of the COCO validation dataset - for the following types of objects: "person", "bicycle", "car", "motorcycle", "bus", "truck".

For a quick start of training, only the validation part of the COCO dataset is used, due to its small size (~1GB).

Following example shows how to build a model serving pipeline from a raw dataset to a ML-Model docker container without REST API.

ML-pipeline is based on SinaraML Framework and tools. Created docker image will contain only bentoservice artifacts - BentoArchive.

Pipeline includes 5 steps, which must be run sequentially:

- Data Load

- Data Preparation

- Model Train

- Model Pack

- Model Eval

Warning

Creating new pipeline from examples are not recommended since examples can be outdated and use old version if the SinaraML Library. Please see Creating New Pipeline Tutorial

This step downloads a COCO-dataset from the internet and converts it to SinaraML Archive that can be read by Apache spark later in efficient way

This step used to load external raw data to the platform and convert it to correct versioned store structure - SinaraML Archive

This step is not portable across platforms. In general, it needs to be rewritten for a specific instance of the platform.

To run the step do:

-

Clone git repository:

git clone --recursive https://github.com/4-DS/obj_detect_binary-data_load.git cd obj_detect_binary-data_load -

Run step:

python step.dev.py

This step checks and cleans the dataset, transforming the markup, divides dataset samples into train, valid and test, reviews and processes data from the the Data load step.

To run the step do:

-

Clone git repository:

git clone --recursive https://github.com/4-DS/obj_detect_binary-data_prep.git cd obj_detect_binary-data_prep -

Run step:

python step.dev.py

This step trains a model using sets made by the data prep step.

To run the step do:

-

Clone git repository:

git clone --recursive https://github.com/4-DS/obj_detect_binary-model_train.git cd obj_detect_binary-model_train -

Run step:

python step.dev.py

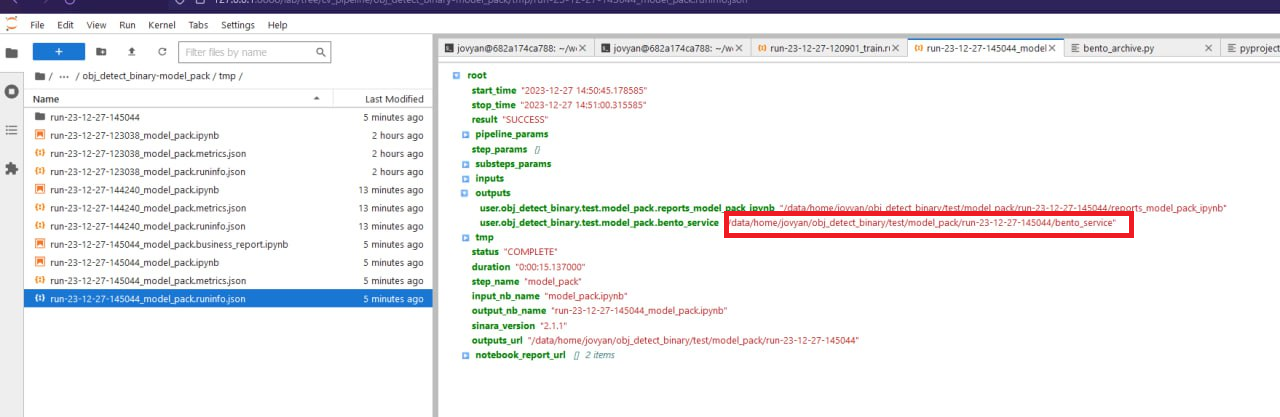

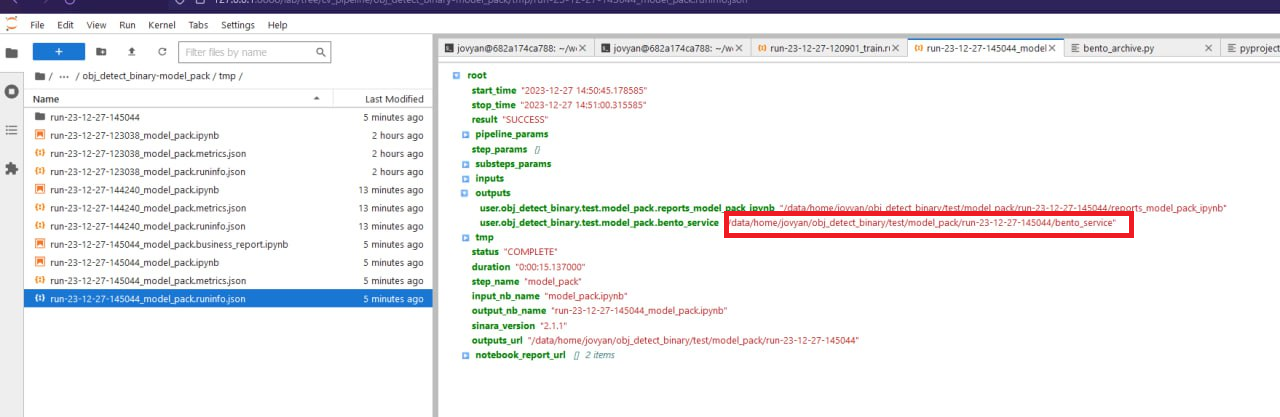

This step packs model to a BentoArchive using BentoML library and SinaraML Library Binary Artifacts.

To run the step do:

-

Clone git repository:

git clone --recursive https://github.com/4-DS/obj_detect_binary-model_pack.git cd obj_detect_binary-model_pack -

Run step:

python step.dev.py

This step tests the model loaded from BentoArchive, builds metrics and graphs.

To run the step do:

-

Clone git repository:

git clone --recursive https://github.com/4-DS/obj_detect_binary-model_eval.git cd obj_detect_binary-model_eval -

Run step:

python step.dev.py

After running all 5 steps we are ready to build a docker image without REST API. So the model image will act as an archive which holds the bento service with artifacts, which we can later extract and use.

To build model image do the following:

- SinaraML CLI should be installed

- Create docker image for model service - execute:

sinara model containerize

- Enter a path to bento service's model.zip inside your running dev Jupyter environment

- Enter repository url to push image to (enter "local" to use local docker repository on machine where docker is installed)

- When docker build command finishes running it will output a model image name

To extract artifacts from image to a folder on host system, run:

sinara model extract_artifacts

When asked give docker image's full name with tag and path where to place extracted artifacts

Please read Known Issues to address inconvenience that you can experience while running pipelines.

Demonstration of an example of a quick start cv pipeline for training a neural network model using the mmdetection framework.

REST Serving assumes that BentoService with model artifacts and REST API will be created. REST Serving tutotial based on the Binary Serving tutorial first 3 steps (data_load, data_prep and model_train) are almost common.

Warning

Creating new pipeline from examples are not recommended since examples can be outdated and use old version if the SinaraML Library. Please see Creating New Pipeline Tutorial

This step downloads a COCO-dataset from the internet and converts it to SinaraML Archive that can be read by Apache spark later in efficient way

This step used to load external raw data to the platform and convert it to correct versioned store structure - SinaraML Archive

This step is not portable across platforms. In general, it needs to be rewritten for a specific instance of the platform.

To run the step do:

-

Clone git repository:

git clone --recursive https://github.com/4-DS/obj_detect_rest-data_load.git cd obj_detect_rest-data_load -

Run step:

python step.dev.py

This step checks and cleans the dataset, transforming the markup, divides dataset samples into train, valid and test, reviews and processes data from the the Data load step.

To run the step do:

-

Clone git repository:

git clone --recursive https://github.com/4-DS/obj_detect_rest-data_prep.git cd obj_detect_rest-data_prep -

Run step:

python step.dev.py

This step trains a model using sets made by the data prep step.

To run the step do:

-

Clone git repository:

git clone --recursive https://github.com/4-DS/obj_detect_rest-model_train.git cd obj_detect_rest-model_train -

Run step:

python step.dev.py

This step packs model to a BentoService using BentoML library and SinaraML Library ONNX Artifacts.

To run the step do:

-

Clone git repository:

git clone --recursive https://github.com/4-DS/obj_detect_rest-model_pack.git cd obj_detect_rest-model_pack -

Run step:

python step.dev.py

This step tests the model loaded from bentoservice, builds metrics and graphs.

To run the step do:

-

Clone git repository:

git clone --recursive https://github.com/4-DS/obj_detect_rest-model_eval.git cd obj_detect_rest-model_eval -

Run step:

python step.dev.py

This step tests the REST API from bentoservice.

To run the step do:

-

Clone git repository:

git clone --recursive https://github.com/4-DS/obj_detect_rest-model_test.git cd obj_detect_rest-model_test -

Run step:

python step.dev.py

Important

Following commands should be executed in the host terminal.

After running all 6 steps we are ready to build a docker image REST API.

To build model image do the following:

- SinaraML CLI should be installed

- Create docker image for model service - execute:

sinara model containerize

- Enter a path to bento service's model.zip inside your running dev Jupyter environment

- Enter repository url to push image to (enter "local" to use local docker repository on machine where docker is installed)

- When docker build command finishes running it will output a model image name

TODO: run model service container and call REST method from python test file

To create your own pipeline please read Creating New Pipeline Tutorial