-

Notifications

You must be signed in to change notification settings - Fork 466

Improve Mean Average Precision performance #1389

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Improve Mean Average Precision performance #1389

Conversation

|

wow, very nice boost! |

I marked it as WIP for now because there is sill something wrong with it. The initial results could be wrong due to some internal error that was not picked up. For instance, some tensors being on the CPU and other on the GPU. I’m looking into it right now. |

3f495d0 to

2063f18

Compare

2063f18 to

13e4f43

Compare

10ddd03 to

65292ed

Compare

|

My apologies, @Borda. It seems that when I rebased with master and force-pushed, a lot of other things came up to the PR. It looks a bit overwhelming now to review. Could have also been the pre-commit I ran. |

|

@wilderrodrigues no problem :) |

|

Just a quick update on this PR:

|

4089fcb to

1ea26b2

Compare

|

Can this one get some love, @Borda @SkafteNicki @justusschock @tchaton :) |

|

@wilderrodrigues sorry for the long return time in this review. Your PR looks good to me! |

Thanks, @Borda . Been also a bit away in the last few days, a lot of work to catch up with before the holidays season. I will rebase the PR and wait for the merge to happen. :) |

403e8cc to

8b7bbb6

Compare

|

A second approval and we can merge this one, @Borda . |

could we pls first fix the failing tests :) |

Oh yeah, sure. I haven't seen them. |

89e464e to

afadcc5

Compare

for more information, see https://pre-commit.ci

|

Hi @wilderrodrigues 👋 Out of curiosity: any chance you could run a substantial map calculation through py-spy and post the flamegraph here? |

That's really great! |

Hi @ddelange , Sure. I'm busy with some last things in terms of speed and will run it once I push the last commits. |

|

We can sequence it out to another PR, let's just get this green... last one is: |

|

@wilderrodrigues, we value your work and thank you for your contribution 💜 could you pls revisit this PR with respect to the recently merged revert to COCO implementation #1327 and setting the torch version as an Internal option? 🐰 |

Pull request was converted to draft

|

Once again thank you for all your effort! 💜 |

What does this PR do?

Fixes #1024

Update: The initial PR contained erroneous information about the running time due to a silent issues that was happening, killing the run because some tensors were in a different device: CPU instead of GPU and vice-versa. The changes are still interesting as the performance increased a lot.

It seems that we faced some regression between version 0.6.0 and the latest releases. With the refactor of moving away from pycocotools and the addition of new operations, the appropriate device was not being used. That behaviour lead to unnecessary transfer of computation between GPU and CPU.

Based on the code provided in the issue #1024 discussion, I also added a couple of unit tests to cover for computation with GPUs and CPUs. Since the results might vary a bit, depending on the GPU/CPU model, I added a relative tolerance of 1.0 trying to cover for the gap.

Some results:

Before (GPU):

After (GPU):

At it has been pointed out, those computations when performed on the CPU might be faster. The important part is making sure that the device is properly allocated to the tensors. When running the same tests on CPU I got the following results:

I will run some tests with our production model and share the deltas here.

Before submitting

PR review

Anyone in the community is free to review the PR once the tests have passed.

If we didn't discuss your PR in Github issues there's a high chance it will not be merged.

Did you have fun?

Yep! This was fun. After trying different versions and commit hashes, I just decided to clone, fix and push the PR. It will help us a lot and I'm sure it will help out the community.

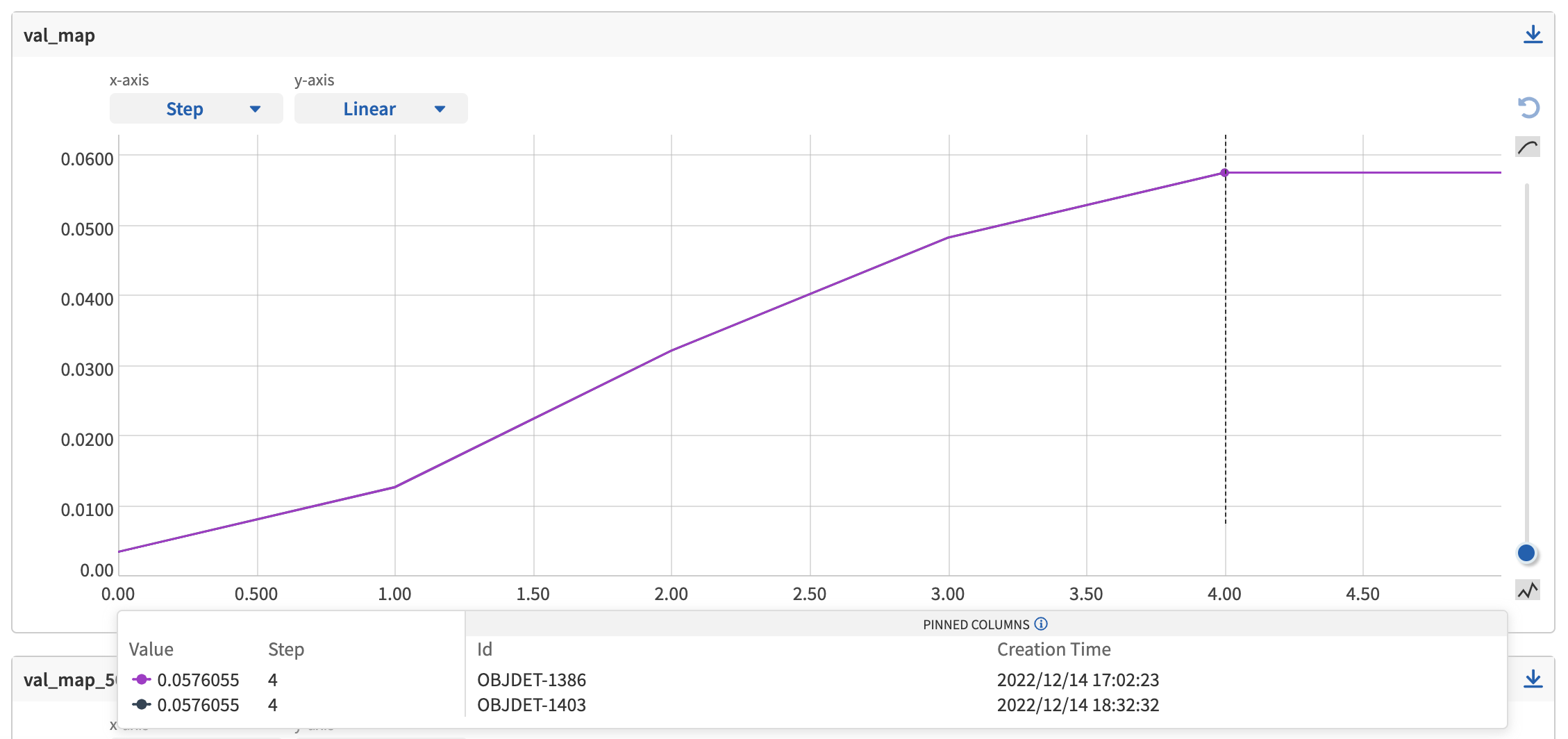

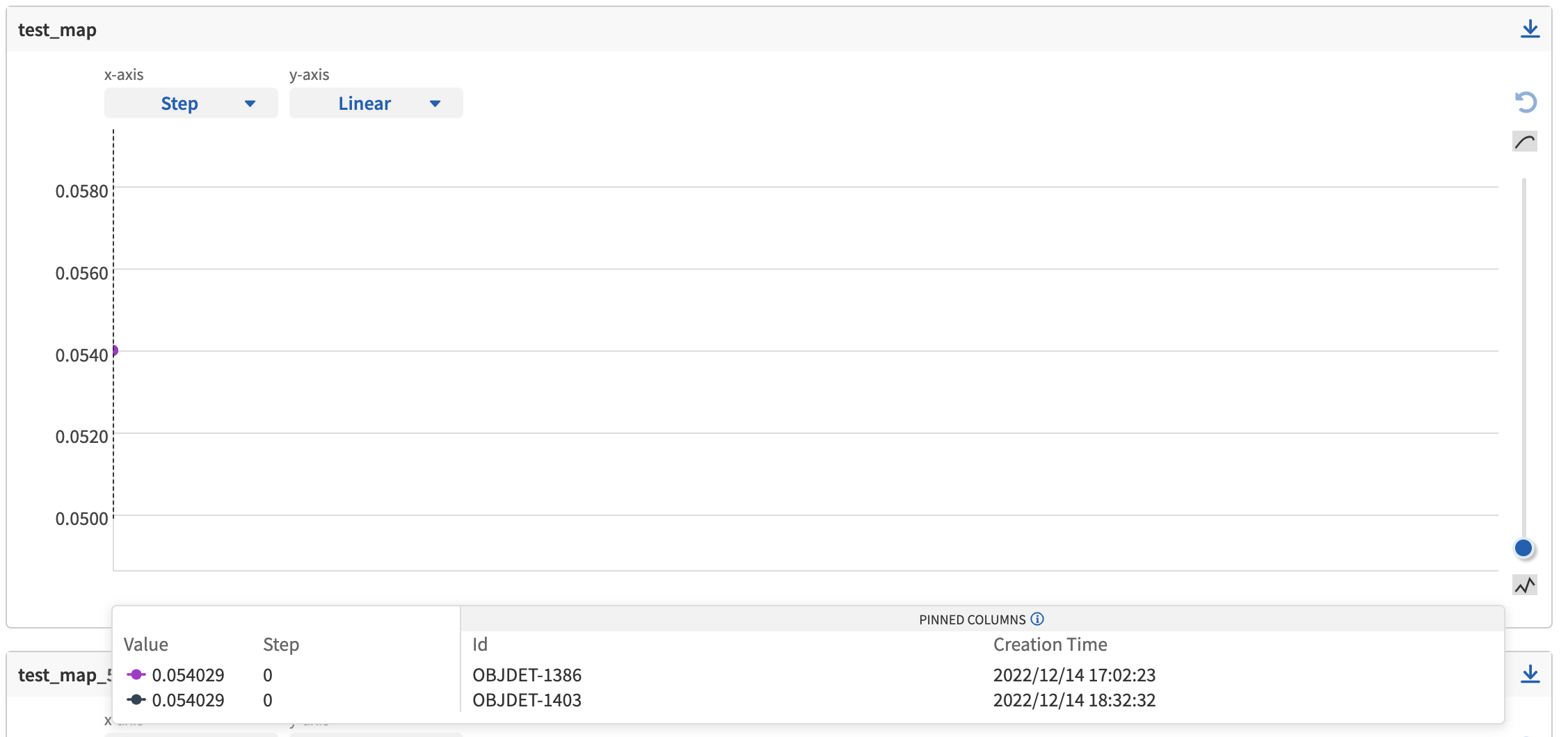

Test with real data

The pink line is the result with the same run using the master branch (which is faster than the latest 0.11 release)

Don't bother about the flat line at the end of each run. That's just PyCharm holding the GPU busy before I clicked on stop.