-

Notifications

You must be signed in to change notification settings - Fork 2.3k

Description

Environment

TensorRT Version: 7.1.3.0

GPU Type:

Nvidia Driver Version:

CUDA Version: 10.2.89

CUDNN Version: 8.0

Operating System + Version: Jetson Xavier Ubuntu 18.04 + Jetpack Revision:4.4 BOARD: t168ref EABI: aarch64

Python Version (if applicable): 3.6

TensorFlow Version (if applicable):

PyTorch Version (if applicable): 1.7.0

Baremetal or Container (if container which image + tag):

Description

Output mismatch between PyTorch prediction and TensorRT predictions.

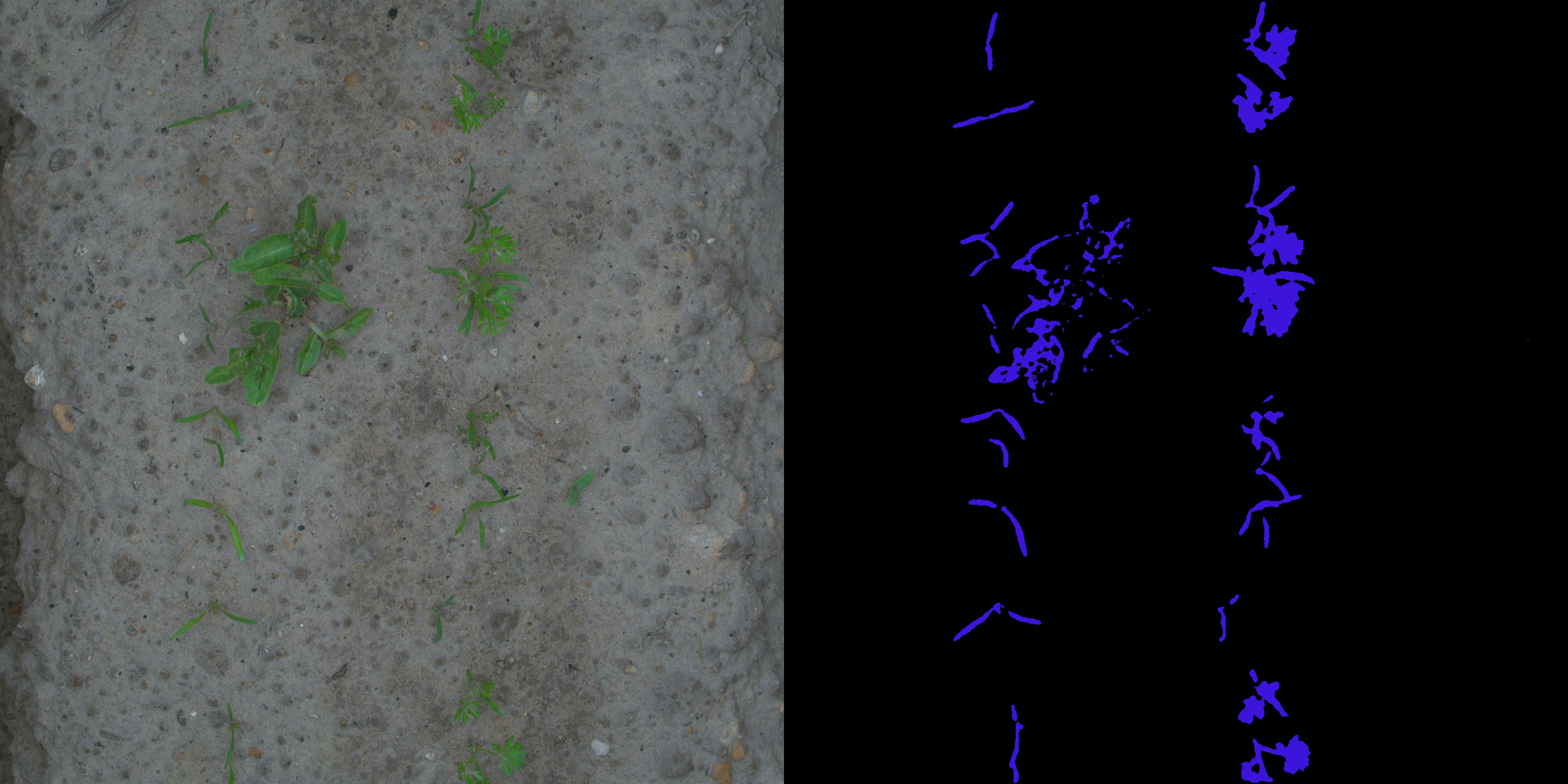

I have trained a semantic segmentation model consisting of mobilenetv2 encoder and aspp_residual decoder. The outputs from PyTorch don't match the ones we get from converting onnx model to tensorrt. PyTorch is producing correct predictions but TensorRT predictions are all same. I have done a comparision of pytorch and onnx outputs using onnx runtime for different tolerance values. They seem alright.

Relevant Files

Attaching predictions from PyTorch and TensorRT along with scripts, prediction images, and trained models to re-run the model. Also, attached the results of PyTorch and onnx prediction (for 3 tolerance values 1e-1, 1e-2, and 1e-3).

Drive Link: https://drive.google.com/drive/folders/1RG2kWEpROgFQI7YbQBig13Rozi41RHr0?usp=sharing

Steps To Reproduce

- PyTorch prediction: python infer_img -l logs_pytorch/ -p models -i carot_2074.jpg --backend pytorch

- TensorRT prediction: python infer_img -l logs_tensorrt/ -p models -i carot_2074.jpg --backend tensorrt

Comparison

PyTorch Prediction

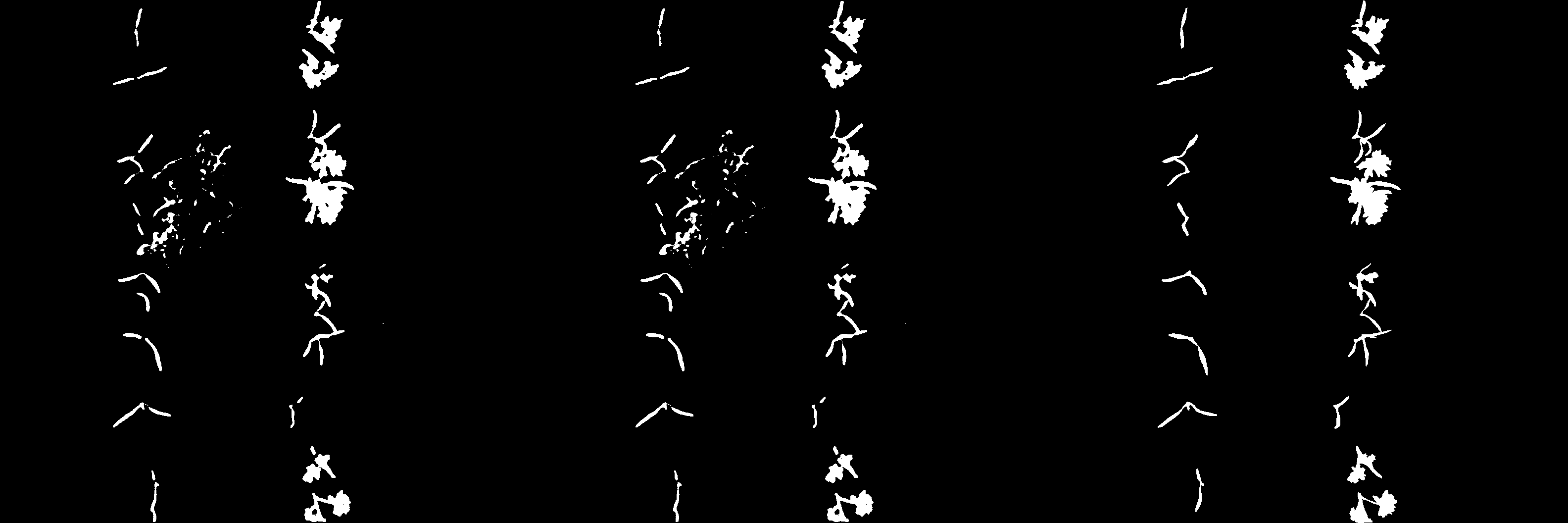

Pytorch -- Onnx --- Ground Truth predictions (for 1e-1 tolerance)