-

Couldn't load subscription status.

- Fork 2.3k

Description

Description

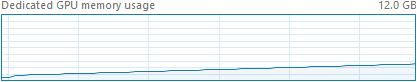

GPU memory footprint of program keeps growing when running a strided, valid-padded convolution with dynamic input shape.

Environment

TensorRT Version: 7.0.0.11

GPU Type: Titan X

Nvidia Driver Version: 436.30

CUDA Version: 10.0

CUDNN Version: 7.6.3.30

Operating System + Version: Windows 10 1903

Python Version (if applicable): 3.7.6

TensorFlow Version (if applicable): 1.14.0

PyTorch Version (if applicable): N/A

Baremetal or Container (if container which image + tag): N/A

Relevant Files

Steps To Reproduce

- Create a TF model

import tensorflow as tf

input = tf.placeholder(tf.float32, [1, None, None, 1], name='x')

layer = tf.nn.conv2d(input, tf.zeros((2, 2, 1, 1)), (1, 2, 2, 1), 'VALID')

output = tf.reduce_sum(layer, axis=[1, 2, 3], name='y')

with tf.Session() as sess:

g = tf.get_default_graph().as_graph_def()

tf.train.write_graph(g, ".", "leak.pb", as_text=False)- Convert to ONNX

python -m tf2onnx.convert --input leak.pb --output leak.onnx --inputs x:0 --outputs y:0 --opset 11

- Build engine

trtexec --onnx=leak.onnx --saveEngine=leak.trt --explicitBatch --minShapes='x:0':1x2x2x1 --optShapes='x:0':1x128x128x1 --maxShapes='x:0':1x256x256x1

- Run inference

# include <array>

# include <fstream>

# include <random>

# include <cuda_runtime_api.h>

# include <NvInfer.h>

# include <samples/common/buffers.h>

class MyLogger : public nvinfer1::ILogger

{

void log(Severity severity, char const * msg) override

{

std::cout << msg << std::endl;

}

} logger;

int main()

{

std::ifstream input("leak.trt", std::ios::binary);

std::vector<unsigned char> model(std::istreambuf_iterator<char>(input), {});

auto runtime = nvinfer1::createInferRuntime(logger);

auto engine = runtime->deserializeCudaEngine(model.data(), model.size());

auto context = engine->createExecutionContext();

auto x_idx = engine->getBindingIndex("x:0");

auto y_idx = engine->getBindingIndex("y:0");

samplesCommon::ManagedBuffer x{ nvinfer1::DataType::kFLOAT, nvinfer1::DataType::kFLOAT };

samplesCommon::HostBuffer y_host(1, nvinfer1::DataType::kFLOAT);

samplesCommon::DeviceBuffer y_device(1, nvinfer1::DataType::kFLOAT);

std:random_device rd;

std::mt19937 gen(rd());

std::uniform_int_distribution<> dis(1, 30);

std::array<void *, 2> buffer;

while (true)

{

auto x_shape = nvinfer1::Dims4(1, dis(gen) * 2, dis(gen) * 2, 1);

x.hostBuffer.resize(x_shape);

std::fill_n(static_cast<float*>(x.hostBuffer.data()), samplesCommon::volume(x_shape), 0.f);

x.deviceBuffer.resize(x_shape);

cudaMemcpy(x.deviceBuffer.data(), x.hostBuffer.data(), x.hostBuffer.nbBytes(), cudaMemcpyHostToDevice);

context->setBindingDimensions(x_idx, x_shape);

buffer[x_idx] = x.deviceBuffer.data();

buffer[y_idx] = y_device.data();

context->executeV2(buffer.data());

}

return 0;

}This results in a slow but steady increase of GPU memory.

Note that I don't have a leak no more

- if the convolution padding is changed to

'SAME', - or if the stride is changed to

(1, 1, 1, 1).

For the reasons above, it is possible that this issue is different from #351 , which has been fixed, since #351 is demonstrated on a non-strided, padded convolution.