-

Notifications

You must be signed in to change notification settings - Fork 9.2k

YARN-11448 [Federation] Stateless Router Secret Manager #5443

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

YARN-11448 [Federation] Stateless Router Secret Manager #5443

Conversation

…448-router-stateless-secret-manager

|

@goiri @slfan1989 can you please help review this PR ? |

|

@krishan1390 Thank you very much for your contribution, I will take time to look at this pr. I took a quick look at your description.

Multiple Routers will share and store the Delegation token, there is no updated across all router instances.

MemeoryStateStore is only used for verification and should not be used for production. SQLServerFederationStateStore will not swallow exceptions, and the client cannot complete verification with the old token.

We only cache tokens in MemeoryStateStore, but MemeoryStateStore is not a distributed storage and can only be used for test verification. It is recommended to use ZKFederationStateStore Or SQLServerFederationStateStore.

getAllToken is only used for test verification. Sorry, I have been busy recently, I will add a design document, DB storage Delegation Token, I refer to the design of Hive storage Delegation Token, this is a stable capability. |

| public abstract | ||

| class AbstractDelegationTokenSecretManager<TokenIdent | ||

| extends AbstractDelegationTokenIdentifier> | ||

| public abstract |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

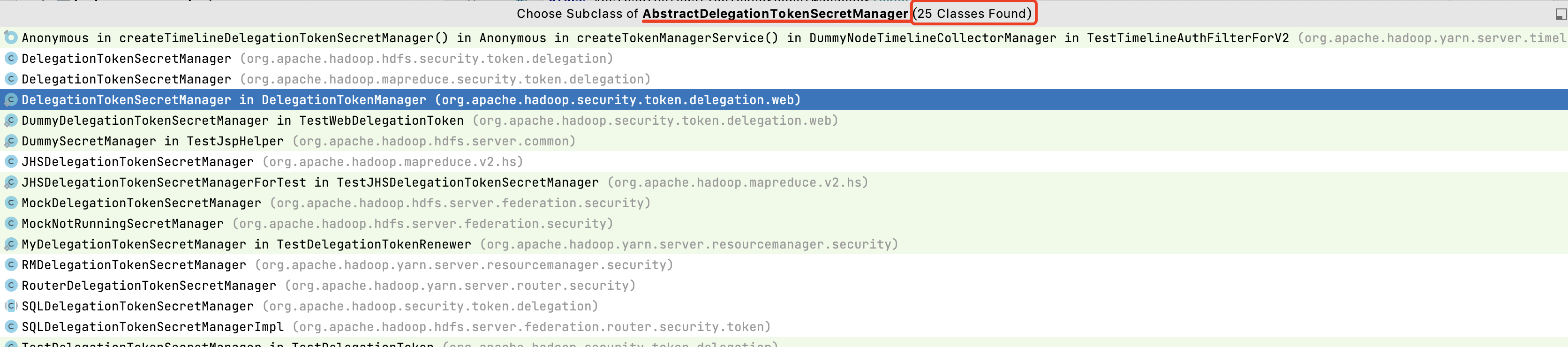

In general, this is a fairly fundamental class in Hadoop in general.

I would propose to do a separate JIRA to clean this class up.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't think any of these changes will affect any other functionality in any sub class - I verified the same .

And the changes are either very generic like encapsulation, exception handling, concurrency handling, etc - let me know if this still warrants a seperate JIRA.

|

💔 -1 overall

This message was automatically generated. |

| int newCurrentId; | ||

| synchronized (this) { | ||

| newCurrentId = incrementCurrentKeyId(); | ||

| newCurrentId = generateNewKeyId(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why should we change the method name?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

the actual requirement is to generate new keys and not to increment keys - this greatly simplifies implementation for various DB systems

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

We use an incremental method to generate new keys. For the database, we use for...update to ensure the increment. For zk, we use the global generator(SharedCount).

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yes but there is no requirement to only increment keys 1 by 1. we only require to generate new unique keys. hence the name change to clearly reflect that requirement.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yes but there is no requirement to only increment keys 1 by 1. we only require to generate new unique keys. hence the name change to clearly reflect that requirement.

The performance of the database (Mysql) should be better, increase one by one, the performance should be ok.

ZK We are applying for multiple ids at one time.

Users can configure the parameter zk-dt-secret-manager.token.seqnum.batch.size to apply for multiple sequenceNum at a time.

ZookeeperFederationStateStore#incrSharedCount

private int incrSharedCount(SharedCount sharedCount, int batchSize)

throws Exception {

while (true) {

// Loop until we successfully increment the counter

VersionedValue<Integer> versionedValue = sharedCount.getVersionedValue();

if (sharedCount.trySetCount(versionedValue, versionedValue.getValue() + batchSize)) {

return versionedValue.getValue();

}

}

}

|

|

||

| @Override | ||

| public synchronized byte[] retrievePassword(TokenIdent identifier) | ||

| public byte[] retrievePassword(TokenIdent identifier) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Why remove synchronized?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

it is not required because currentTokens is a concurrenthashmap so satisfies the happens before memory visibility requirements for this method.

|

Thanks for your feedback @slfan1989 . Please find my response below

That is not the actual behaviour currently. Each router instance has its own set of master keys (allKeys & currentKey - these are setup on service startup through startThreads() & updated in rollMasterKey()). Even though they are stored in database, master key isn't looked up from database but just returned from the in memory variables (allKeys & currentKey). So a router instance can't renew tokens generated from another router instance. And even delegation tokens are not consistently updated across router instances. If a delegation token is present in currentTokens variable in multiple router instances but updated in one router instance (on token renewal), the other router instances will use their own in memory variable currentTokens rather than look up the database and thus can say the token is expired.

Yes. By in memory variables I meant the class instance variables like currentTokens, currentKey, allKeys, etc. Even though SQLFederationStateStore throws an exception, all the current methods in RouterDelegationTokenSecretManager catches these exceptions and either returns or terminates the app rather than throwing it back & handling appropriately.

Yes by in memory variables I meant the class instance variables like currentTokens, currentKey, allKeys. Currently, it doesn't look at all variables in the database.

Yes currently thats the case, but since its a public API its an incorrect design to return the in memory instance variables in case of router - since anyone can rely on this.

No problem. I will provide the test cases to correctly show these issues better My main thought at a high level, is that by design a stateless router should make no assumptions of underlying state management (AbstractDelegationTokenSecretManager functionalities are heavily relying on it being a single source of truth rather than being distributed) |

For the client, the RM is blocked. For the client, the Router is the RM. When implementing this function, we should be close to the realization of the RM. I think Router's implementation is reasonable. RMDelegationTokenSecretManager#storeNewMasterKey RouterDelegationTokenSecretManager#storeNewMasterKey |

RouterDelegationTokenSecretManager#getTokenInfo AbstractDelegationTokenSecretManager#removeExpiredToken

|

In the previous comment, I have already explained that we get data from shared storage, so this described situation should not happen. Example: We have 3 routers, namely

|

|

💔 -1 overall

This message was automatically generated. |

…factoring of Abstract secret manager

| private long tokenMaxLifetime; | ||

| private long tokenRemoverScanInterval; | ||

| private long tokenRenewInterval; | ||

| private volatile DelegationKey currentKey; |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have set it to volatile to make sure currentKey changes are visible across threads

|

|

||

| Set<DelegationKey> rmDTMasterKeyState = routerRMSecretManagerState.getMasterKeyState(); | ||

| if (rmDTMasterKeyState.contains(delegationKey)) { | ||

| Map<Integer, DelegationKey> rmDTMasterKeyState = routerRMSecretManagerState.getMasterKeyState(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have replaced set with a map as we need to lookup objects from their unique key ids, rather than the object itself

| RMDelegationTokenIdentifier tokenIdentifier = | ||

| (RMDelegationTokenIdentifier) storeToken.getTokenIdentifier(); | ||

| Map<RMDelegationTokenIdentifier, RouterStoreToken> rmDTState = | ||

| Map<Integer, RouterStoreToken> rmDTState = |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I have replaced the key for the map with the id (sequence number) of the delegation token rather than the token identifier object itself - because token identifier objects can be generated repeatedly (as part of different requests) for the same sequence number

| * | ||

| * @return CurrentKeyId. | ||

| */ | ||

| int getCurrentKeyId(); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

have removed these methods because they aren't required for a stateless secret manager. they are only required to support recovery in RM / NN like systems with only 1 node generating tokens .

with stateless secret manager, there is no explicit recovery mechanism because by design the secret manager stores all data in database and thus these methods aren't required.

| @@ -1,201 +0,0 @@ | |||

| /** | |||

| * Licensed to the Apache Software Foundation (ASF) under one | |||

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

have moved this class to router.security package rather than router.secure package & refactored tests to test the public methods of router secret manager rather than testing it internally.

these test cases, validate the database storing / retrieval logic internally and this makes the test cases independent of the database implementations..

|

@slfan1989 @goiri let me try to summarise my changes better

This also makes the design more extendible for future use cases where Delegation token object contains more mutable data. I have added a bunch of test cases to showcase these edge cases better. It will be useful to comment on these individual test cases if any concerns.

|

|

💔 -1 overall

This message was automatically generated. |

|

🎊 +1 overall

This message was automatically generated. |

|

@krishan1390 Thank you very much for your contribution, I will take some time to read the code. |

| //Log must be invoked outside the lock on 'this' | ||

| logUpdateMasterKey(newKey); | ||

| synchronized (this) { | ||

| storeDelegationKey(newKey); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

While I understand that we shouldn't update currentKey when some other thread is using it, but does the storeDelegationKey(newKey) also need to be inside the synchronized block?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

actually even updating currentKey needn't be in synchronized block because currentKey is volatile so the update will be visible.

I had originally removed synchronized here but added it back to avoid the change in this PR and to make it seperately.

wanted to keep the PR focused specifically on whats required for reliable stateless setup - in this particular case, trying to store in DB first before updating currentKey

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Won't updating currentKey need to be synchronized? Because if some other thread is using this in createPassword() and in between lines identifier.setMasterKeyId(currentKey.getKeyId()) and createPassword(identifier.getBytes(), currentKey.getKey()); the currentKey changes, won't that result in inconsistencies?

| * Current master key will be stored in memory on each instance and will be used to generate new tokens. | ||

| * Master key will be looked up from the state store for Validation / renewal, etc of tokens. | ||

| * | ||

| * 2) Token Expiry - It doesn't take care of token removal on expiry. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can we also mention about master key expiry?

| public void storeNewToken(RMDelegationTokenIdentifier identifier, | ||

| long renewDate) throws IOException { | ||

| try { | ||

| federationFacade.storeNewToken(identifier, renewDate); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

In the SQLFederationStateStore I see we are making 2 queries, one to add the new token, 2nd again to get the token. Is the 2nd query needed? (Not able to add review comment there so added here)

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yea thats not required. was planning to raise a seperate PR for changes in SQLFederationStateStore - will keep this PR focused on changes required for stateless delegation token management

| public void storeNewMasterKey(DelegationKey newKey) { | ||

| protected void storeNewMasterKey(DelegationKey newKey) throws IOException { | ||

| try { | ||

| federationFacade.storeNewMasterKey(newKey); |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is the federationFacade being initialized with actual conf (that points to Sql state store let's say). Because FederationStateStoreFacade.getInstance(); will always get an instance that is initialized with default conf.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

yea thats fair. let me correct it

| * @throws IOException raised on errors performing I/O. | ||

| */ | ||

| protected DelegationTokenInformation getTokenInfo(TokenIdent ident) { | ||

| protected DelegationTokenInformation getTokenInfo(TokenIdent ident) throws IOException { |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

This method is not just a KV lookup but actually compares all attributes of TokenIdent (maxDate, masterKeyId, owner, etc) - this is important because if we provide just a KV lookup, any user can create a TokenIdent object with a random key (sequence no) & get authenticated (RM just checks for presence of token for authentication).

Corresponding change needs to be done in stateless secret manager

|

@slfan1989 @goiri do you have any concerns on the PR ? we plan to merge it soon unless any concerns. |

|

We're closing this stale PR because it has been open for 100 days with no activity. This isn't a judgement on the merit of the PR in any way. It's just a way of keeping the PR queue manageable. |

Description of PR

JIRA - https://issues.apache.org/jira/browse/YARN-11448

Currently router secret manager requires routers to be stateful & with clients using sticky sessions.

Otherwise, there are several issues mentioned below which lead to the delegation token functionality not working across router instances

Eg:

A more scalable & maintainable framework for Router would be to be design it as a stateless service. Given database KV lookups are in the order of < 10 ms, it doesn't add any latency overhead and makes router easier to maintain. Plus a stateless router setup, with no assumptions of stickiness makes the router framework more generic.

Additionally, some of the functionality around master key ids, delegation token sequence numbers is implemented as globally autoincrement ids which too isn't feasible across all datastores. The actual requirement is to generate unique keys for master key ids / delegation tokens which is a much more simpler & generic solution. Plus certain apis like get sequence no / set sequence no aren't applicable for router and we can avoid providing them to make things much more simpler.

This patch addresses these functional concerns while working within the interfaces of AbstractDelegationTokenSecretManager.

As a later patch, we can create better delegation token interfaces to support both stateful & stateless secret managers.

How was this patch tested?

Unit Tests - Currently implemented 1 test - testNewTokenVerification - adding more test cases