-

Notifications

You must be signed in to change notification settings - Fork 1.2k

Put error message as tag into failed trace. #4000

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

Prior error message doesn't put error tag into failed trace. This is nice to have and useful on trouble shooting within request context via modern UI in Jaegar or Zipkin. With a minor change in primitive action flow which didn't enclose with failed trace. Signed-off-by: Tzu-Chiao Yeh <su3g4284zo6y7@gmail.com>

b66eb43 to

e3c3899

Compare

Codecov Report

@@ Coverage Diff @@

## master #4000 +/- ##

==========================================

- Coverage 85.9% 80.82% -5.09%

==========================================

Files 146 146

Lines 7095 7102 +7

Branches 443 439 -4

==========================================

- Hits 6095 5740 -355

- Misses 1000 1362 +362

Continue to review full report at Codecov.

|

|

Surfacing error state in trace would be useful. @sandeep-paliwal Thoughts? |

|

|

||

| postedFuture andThen { | ||

| case Success(_) => transid.finished(this, startLoadbalancer) | ||

| case Failure(e) => transid.failed(this, startLoadbalancer, e.getMessage, logLevel = ErrorLevel) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Should the error log level be eliminated here? An activation failure doesn't always imply a system failure

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Thanks for reminding, we can use the default warn level. Or I can match exception types for different levels. However, I think logging here is quite redundant b.c. we've already logged this at doInvoke.

Should this get changed as well?

https://github.com/apache/incubator-openwhisk/blob/0242a60aeb976d070abda76a8ac2aa7e3306e393/core/controller/src/main/scala/whisk/core/controller/Actions.scala#L292

BTW, in opentracing, there's no standardized warn tag provided, is this fine?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I think that using the standard warn level would be fine.

The snippet you provided is depicting the system error, so the error log level in that place is fine IMO.

As long as it doesn't disturb anyone else, I'm fine with leaving the err tag for the tracing. My main point was to avoid the log overflood with the messages produced by users, since it could significantly hamper the performance.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Ah yes, it's a system error, thanks!

| } | ||

| } andThen { | ||

| case Success(_) => transid.finished(this, startActivation) | ||

| case Failure(e) => transid.failed(this, startActivation, e.getMessage, logLevel = ErrorLevel) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The same question regarding the log level...

|

@chetanmeh agree. Having error information in trace for failed Invocations will be very useful. |

|

|

||

| private def setErrorTags(span: Span, message: => String): Unit = { | ||

| span.setTag("error", true) | ||

| span.setTag("message", message) |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Tag is usually added to make it easy to search for a specific span. Since key in this case is always going to be "error" do you think its more appropriate case for span.log() ?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hi @sandeep-paliwal, thanks for suggestion.

I think the big difference between tag and log is timestamp; as you mentioned, the tag is much easier to search for a specific span, or make it explicit on what's going on.

I've tried capturing errors guidance in open tracing data model standard. This is not that useful to know that what's really going on in Jaegar.

Some code snippet:

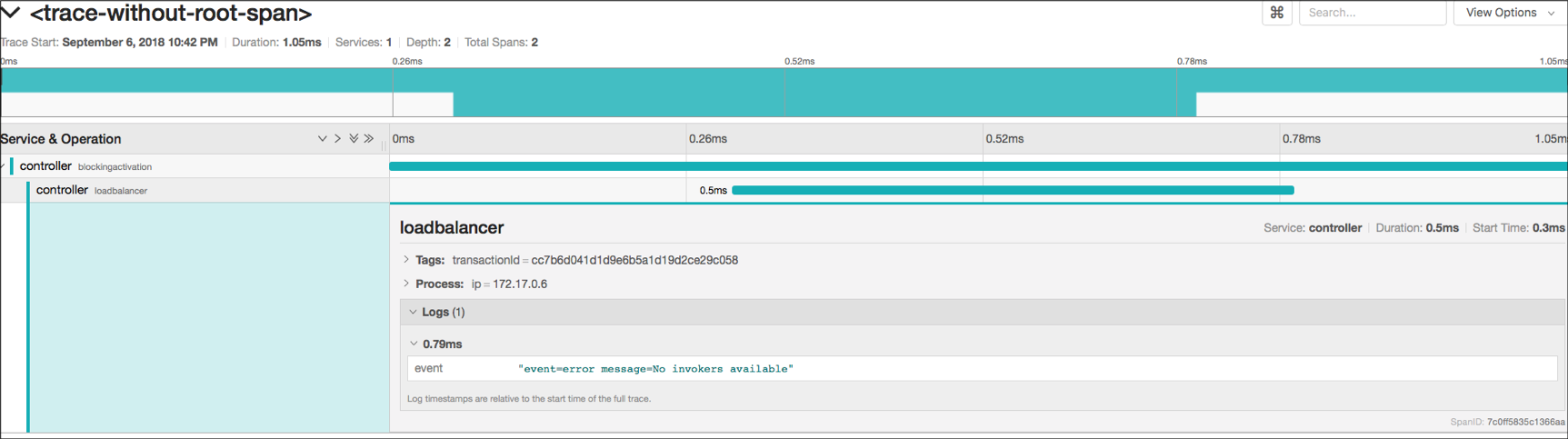

private def setErrorTags(span: Span, message: => String): Unit = {

span.log(Map("event" -> "error", "message" -> message).asJava)

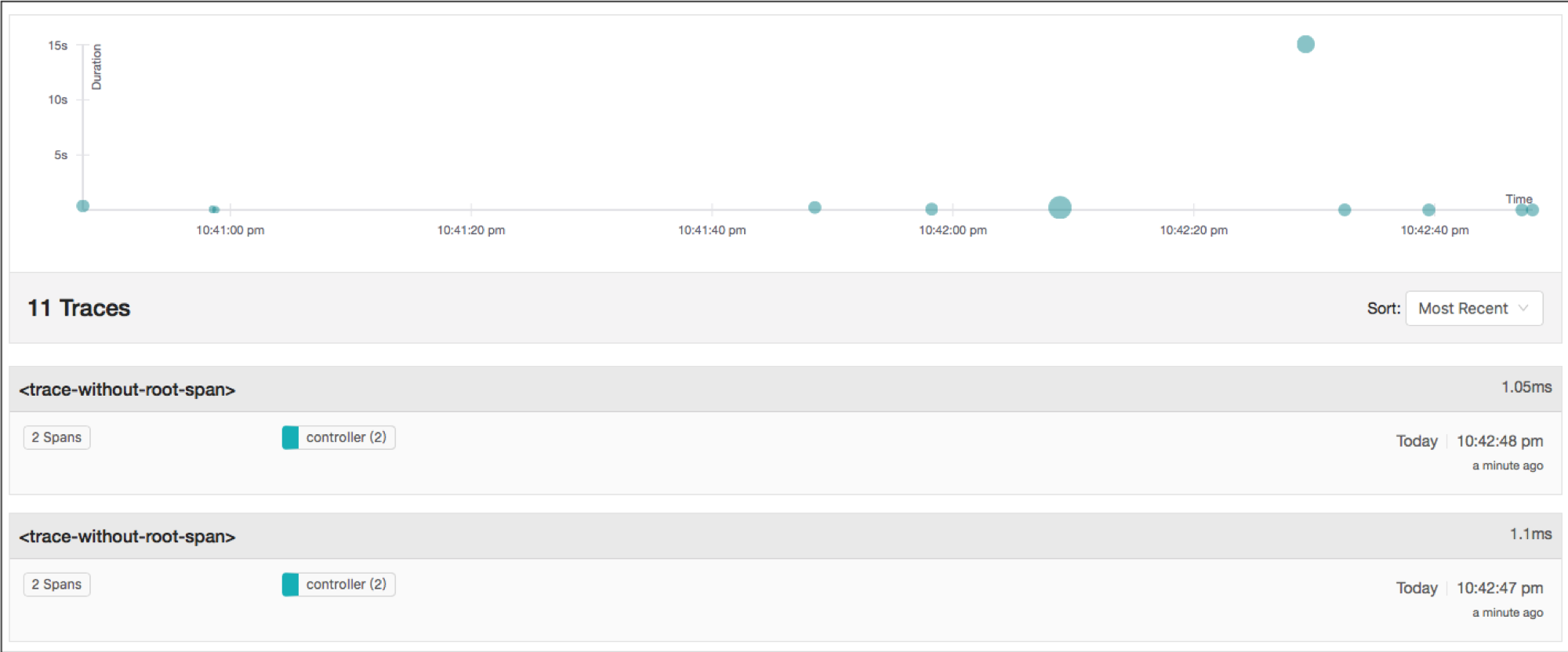

}Snapshot:

The error is not explicit.

Am I missing something? If not, I think an alternative way is still use error as tag, and attach the log that we can eliminate the message tag field, if this is necessary. WDYT?

i.e.

private def setErrorTags(span: Span, message: => String): Unit = {

span.setTag("error", true)

span.log(Map("event" -> "error", "message" -> message).asJava)

}There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah I see that just adding log to trace is not that helpful in UI. I guess using tag has a better option then. Is there any use case to search for all failed invocations with a specific error? In that case error message as tag will also be useful compared to log().

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yeah it might be useful if someone knows internal error messages. One drawback is polluting tracing system if it's mixed with some other systems besides OpenWhisk, but it can be filtered by module though. I think we can keep it as tag for advanced usage as you mentioned.

Signed-off-by: Tzu-Chiao Yeh <su3g4284zo6y7@gmail.com>

f022d8c to

53264d5

Compare

chetanmeh

left a comment

chetanmeh

left a comment

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

LGTM

Put error message as tag into failed trace. This is nice to have and useful on trouble shooting within request context via modern UI in Jaegar or Zipkin.

Prior error message doesn't put error tag into failed trace.

This is nice to have and useful on trouble shooting within request context via modern UI in Jaegar or Zipkin.

With a minor change in primitive action flow which didn't enclose with failed trace.

I'm not sure folks are consistent with tracing system because of conflicting goals that we already have transid in log. IMHO, at least, it shouldn't make traces confused once if user decides to take it into system: whether there's an accurate error message existed or not, we should know which one get failed.

Semantics followed by:

https://github.com/opentracing/specification/blob/master/semantic_conventions.md

Snapshots in Jaegar:

Signed-off-by: Tzu-Chiao Yeh su3g4284zo6y7@gmail.com

Description

Related issue and scope

My changes affect the following components

Types of changes

Checklist: