-

Notifications

You must be signed in to change notification settings - Fork 28.9k

[SPARK-27468][Core][WEBUI] BlockUpdate replication event shouldn't overwrite storage level description in the UI #24398

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

|

Test build #104692 has finished for PR 24398 at commit

|

|

Jenkins, retest this please |

|

Test build #104694 has finished for PR 24398 at commit

|

| var storageLevel: String = weakIntern(info.storageLevel.description) | ||

| var memoryUsed = 0L | ||

| var diskUsed = 0L | ||

| var storageInfo: StorageLevel = new StorageLevel() |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I don't know this part well, but is it redundant with storageLevel above?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

The above was just a string representation of storage level. from StorageInfo we can get individual parameters including replication.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I see, but should we not just replace the field above with this richer object? or should this not use info.storageLevel as the initial value? maybe not, just jumped out at me as a question

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Yes. we can initialize storageInfo = info.storageLevel. But I'm not sure we can get rid of storageLevel, as there is a public method which sets the value. updated the code.

|

|

||

| if (updatedStorageLevel.isDefined) { | ||

| rdd.setStorageLevel(updatedStorageLevel.get) | ||

| // Replicated block update events will have `storageLevel.replication=1`. |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Is this a bug itself?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Needs more check, including impacts. Currently the fix is from UI side.

core/src/main/scala/org/apache/spark/status/AppStatusListener.scala

Outdated

Show resolved

Hide resolved

|

Test build #104708 has finished for PR 24398 at commit

|

|

Retest this please. |

|

Test build #104727 has finished for PR 24398 at commit

|

| // Default value of `storageInfo.replication = 1` and hence if | ||

| // `storeLevel.replication = 2`, the replicated events won't overwrite in the store. | ||

| val storageInfo = rdd.storageInfo | ||

| val isReplicatedBlockUpdateEvent = storageLevel.replication < storageInfo.replication && |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

check if (storageLevel.isValid) before accessing storageLevel.*?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Hi, This line checks the storageLevel is valid or not.

spark/core/src/main/scala/org/apache/spark/status/AppStatusListener.scala

Lines 916 to 920 in d9bcacf

| val updatedStorageLevel = if (storageLevel.isValid) { | |

| Some(storageLevel.description) | |

| } else { | |

| None | |

| } |

updatedStorageLevel will be None. So, it won't come to this line (L-928).Thanks

|

After reading more of the storage code lately, I wonder if this code shouldn't just report the original storage level always. i.g., |

|

Thanks @vanzin . I have updated the code. |

|

Test build #105198 has finished for PR 24398 at commit

|

|

Retest this please |

|

Test build #105205 has finished for PR 24398 at commit

|

|

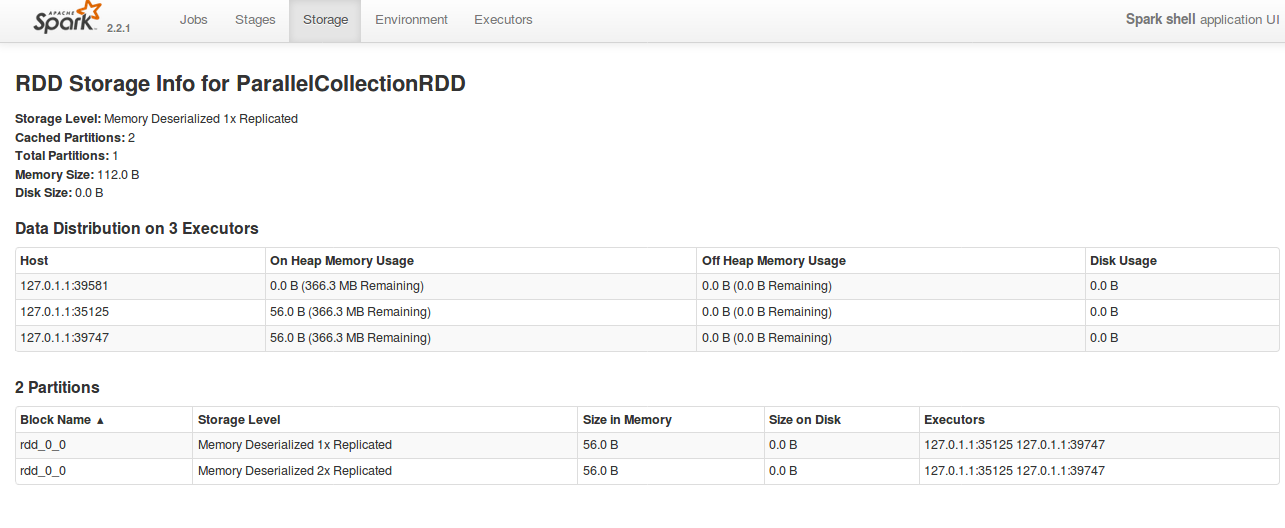

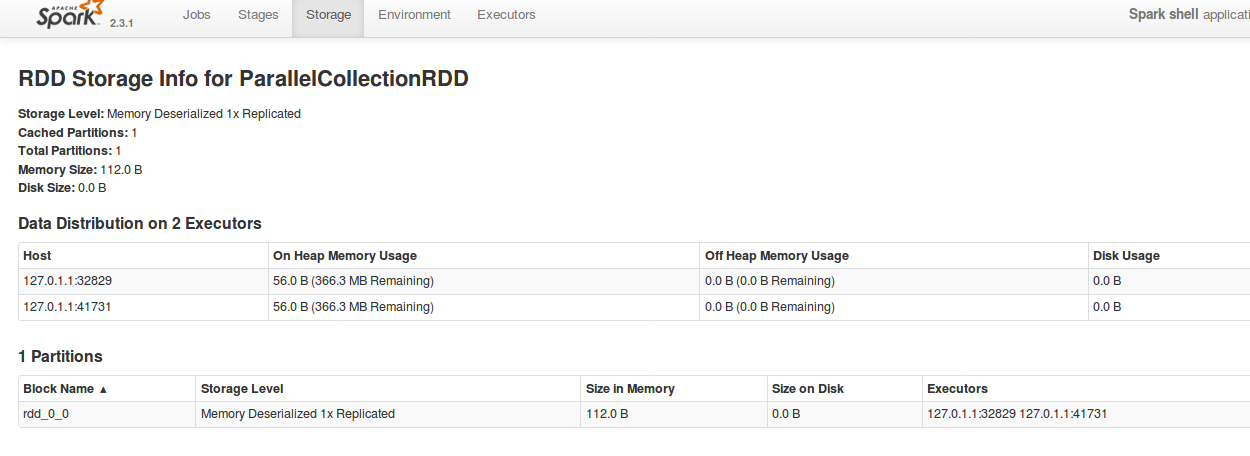

So now the RDD storage level is what the user requested, which is fine. But what about the per-partition storage level? With your change it's just the same as the RDD level. Right thing to do would be to look at the behavior in Spark 2.2 and see how per-partition storage levels worked (unless someone remembers without looking at the code). You may have to propagate the block update's storage level to the partition. |

|

Yes, as I thought, in 2.2 the partition storage level comes from the block update: |

|

@vanzin Yes. The behavior seems different compared to the 2.2 branch. I will update the PR. |

|

I created #25779 with a more complete fix for this, so closing this one. |

What changes were proposed in this pull request?

Test steps to reproduce this:

Events generated are shown like below

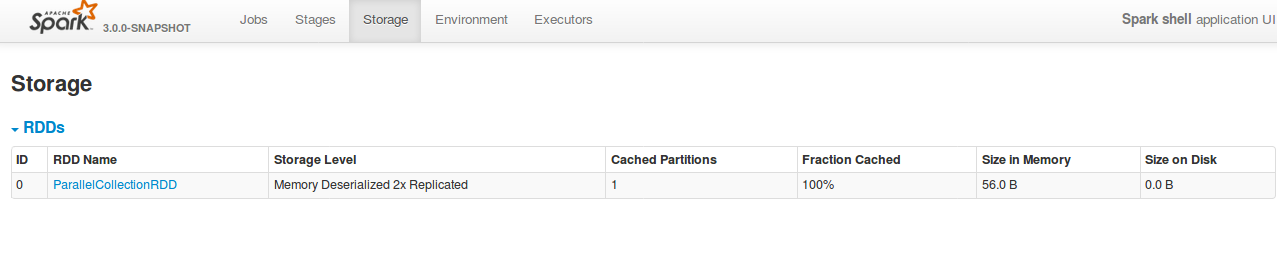

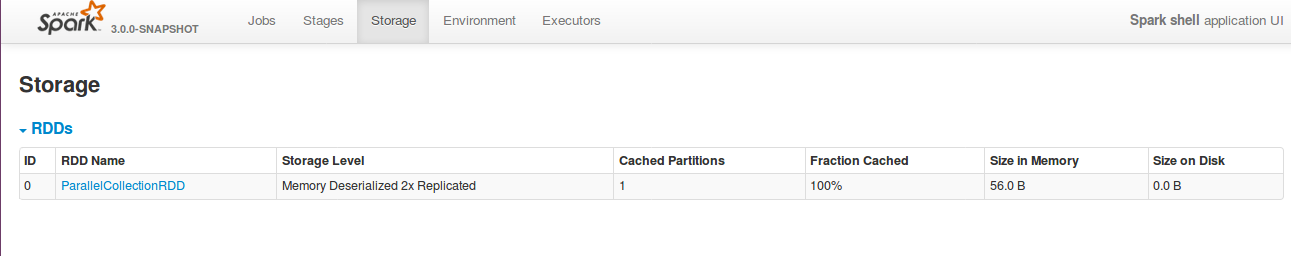

But in the UI, in the storage tab it displays in the description like,

"Memory Deserialized 1x Replicated", even though we have given replication as 2.

The root cause is that, the replication block update events will have replication factor 1. Hence in the AppStatusListener class, we overwrite whatever event comes later. If the replication event comes later, then we update replication factor as 1.

In the PR, I am fixing from the AppStatusListener class side, as we need to detect if the event is replication or not. Else we need to update the rdd store.

How was this patch tested?

Added UT and Manually tested.

Before patch:

After patch: