| OS: | Windows | ||||||||||||

| Type: | A Windows PowerShell script | ||||||||||||

| Language: | Windows PowerShell | ||||||||||||

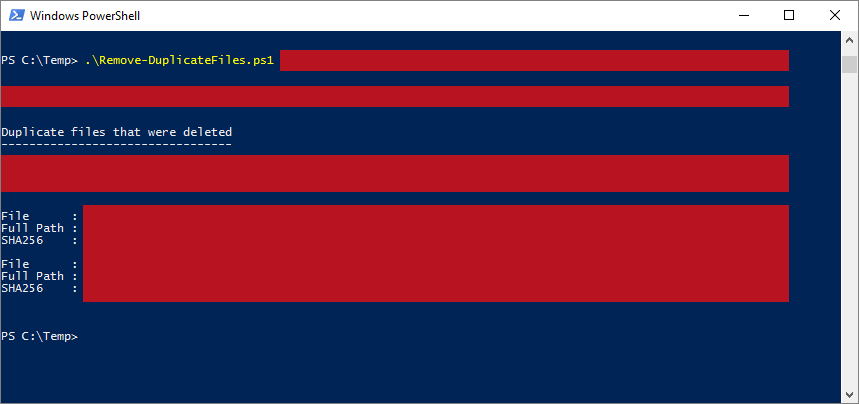

| Description: | Remove-DuplicateFiles searches for duplicate files from a directory specified with the -Path parameter. The files of a folder are analysed with the inbuilt Get-FileHash cmdlet in machines that have PowerShell version 4 or later installed, and in machines that are running PowerShell version 2 or 3 the .NET Framework commands (and a function called Check-FileHash, which is based on Lee Holmes' Get-FileHash script in "Windows PowerShell Cookbook (O'Reilly)") are invoked for determining whether or not any duplicate files exist in a particular folder.

Multiple paths may be entered to the -Path parameter (separated with a comma) and sub-directories may be included to the list of folders to process by adding the -Recurse parameter to the launching command. By default the removal of files in Remove-DuplicateFiles is done on 'per directory' -basis, where each individual folder is treated as its own separate entity, and the duplicate files are searched and removed within one particular folder realm at a time, so for example if a file exists twice in Folder A and also once in Folder B, only the second instance of the file in Folder A would be deleted by Remove-DuplicateFiles by default. To make Remove-DuplicateFiles delete also the duplicate file that is in Folder B (in the previous example), a parameter called -Global may be added to the launching command, which makes Remove-DuplicateFiles behave more holistically and analyse all the items in every found directory in one go and compare each found file with each other.

If deletions are made, a log-file ( deleted_files.txt by default) is created to $env:temp, which points to the current temporary file location and is set in the system (– for more information about $env:temp, please see the Notes section). The filename of the log-file can be set with the -FileName parameter (a filename with a .txt ending is recommended) and the default output destination folder may be changed with the -Output parameter. During the possibly invoked log-file creation procedure Remove-DuplicateFiles tries to preserve any pre-existing content rather than overwrite the specified file, so if the -FileName parameter points to an existing file, new log-info data is appended to the end of that file.

To invoke a simulation run, where no files would be deleted in any circumstances, a parameter -WhatIf may be added to the launching command. If the -Audio parameter has been used, an audible beep would be emitted after Remove-DuplicateFiles has deleted one or more files. Please note that if any of the parameter values (after the parameter name itself) includes space characters, the value should be enclosed in quotation marks (single or double) so that PowerShell can interpret the command correctly. |

||||||||||||

| Homepage: | https://github.com/auberginehill/remove-duplicate-files

Short URL: http://tinyurl.com/jv4jlbe |

||||||||||||

| Version: | 1.2 | ||||||||||||

| Sources: |

|

||||||||||||

| Downloads: | For instance Remove-DuplicateFiles.ps1. Or everything as a .zip-file. |

| 📐 |

|

|---|---|

|

| ➡️ |

|

||||||

|---|---|---|---|---|---|---|---|

|

|

|

|

| 📖 | To open this code in Windows PowerShell, for instance: | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

Find a bug? Have a feature request? Here is how you can contribute to this project:

| Bugs: | Submit bugs and help us verify fixes. | |

| Feature Requests: | Feature request can be submitted by creating an Issue. | |

| Edit Source Files: | Submit pull requests for bug fixes and features and discuss existing proposals. |