-

Notifications

You must be signed in to change notification settings - Fork 53

Closed

Description

Dear Authors,

First and foremost, thank you for your exceptional work on this project!

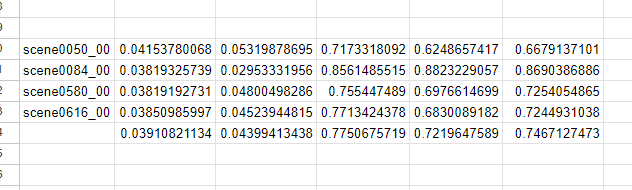

I have a few questions regarding the evaluation on ScanNet, as I've noticed a minor difference between the results provided in your paper and those I obtained using your pre-trained model.

Interestingly, the F-score I computed is higher than the 0.733 value provided in your paper. However, the other metrics, such as Accuracy and Precision, are slightly worse than the ones you reported.

Would you kindly provide some guidance on whether I might have made an error in the process? I used your pre-trained model and ran the evaluate.py script for evaluation.

Thank you for your time and assistance. I'm looking forward to your response.

Metadata

Metadata

Assignees

Labels

No labels