-

Notifications

You must be signed in to change notification settings - Fork 1.2k

Description

Describe the bug

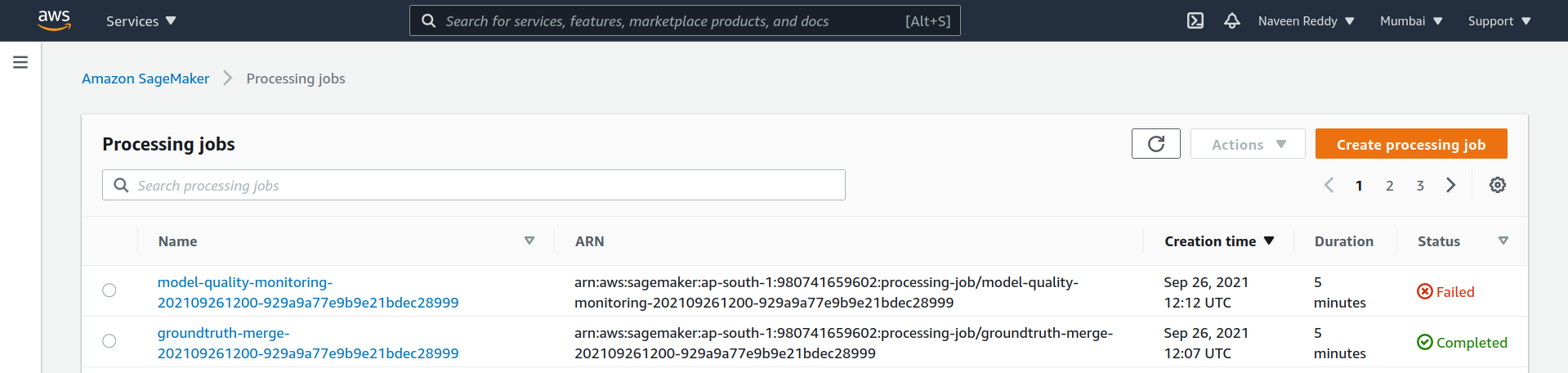

I have scheduled model-quality-monitoring jobs with hourly frequency. As part of which, the ground-truth-merge job completes without issues and model-quality-monitoring job exits out with code 1 with this error:

Exception in thread "main" com.amazonaws.sagemaker.dataanalyzer.exception.Error: Exception while invoking preprocessor script.

I have included print statements in my preprocessor.py script and I presume the script was never run, because nothing was printed to my cloudwatch logs. So, I think container (or processing job) failed even before the script was run. So, what's stopping the container from using the script and how do I fix this error.

To reproduce

I have created an model-quality-monitoring schedule as mentioned in this notebook from sagemaker-examples-repo, with few changes to suit my use case. I have a regression problem(or target variable is continuous) and here is all the code, relevant files and screenshots.

Here's the code I am using to schedule the monitoring job and also create alarm in CloudWatch:

## code is almost entirely from the example notebook from sagemaker-examples-repository linked above.

import boto3

from sagemaker import get_execution_role, Session

from sagemaker.model_monitor import ModelQualityMonitor

from sagemaker.model_monitor import EndpointInput

# create clients

sm_boto_client = boto3.client('sagemaker')

sm_session = Session()

cw_boto_client = boto3.Session().client("cloudwatch")

# initiating relevant variables

endpoint_name = 'ch-mqm-ep-try31'

try_num = 34

# make a Monitoring schedule name

ch_monitor_schedule_name = f"ch-mqm-sched-try{try_num}-sor"

# Create an enpointInput instance

endpointInput = EndpointInput(endpoint_name=endpoint_name,

destination="/opt/ml/processing/input_data",

inference_attribute='data')

# create ModelQualityMonitor instance

ch_mqm_try34_sor = ModelQualityMonitor(

role=get_execution_role(),

instance_count=1,

instance_type="ml.m5.xlarge",

volume_size_in_gb=30,

max_runtime_in_seconds=1800,

sagemaker_session=sm_session)

# create the schedule now

mon_sched_suffix = f"ch-mqm-ep-try{try_num}-sor"

ch_mqm_try34_sor.create_monitoring_schedule(

monitor_schedule_name=ch_monitor_schedule_name,

endpoint_input=endpointInput,

record_preprocessor_script=preprocessor_s3_uri,

output_s3_uri=f's3://api-trial/{mon_sched_suffix}_monitoring_schedule_outputs',

problem_type="Regression",

ground_truth_input=f's3://api-trial/{endpoint_name}_groundtruths',

constraints='s3://api-trial/ch-mqm-ep-25-5_mqm_artifacts/constraints.json',

schedule_cron_expression=CronExpressionGenerator.hourly(),

enable_cloudwatch_metrics=True)

# creating cloudwatch alarm now

alarm_name = f"MODEL_QUALITY_R2_try{try_num}_sor"

alarm_desc = (

"Trigger an CloudWatch alarm when the rmse score drifts away from the baseline constraints"

)

mdoel_quality_r2_drift_threshold = (

0.7238122 ##Setting this threshold purposefully low to see the alarm quickly.

)

metric_name = "r2"

namespace = f"aws/sagemaker/Endpoints/model-metrics-try{try_num}-sor"

# put the alarm in cloudwatch now

cw_boto_client.put_metric_alarm(

AlarmName=alarm_name,

AlarmDescription=alarm_desc,

ActionsEnabled=True,

MetricName=metric_name,

Namespace=namespace,

Statistic="Average",

Dimensions=[

{"Name": "Endpoint", "Value": endpoint_name},

{"Name": "MonitoringSchedule",

"Value": ch_monitor_schedule_name}

],

Period=600,

EvaluationPeriods=1,

DatapointsToAlarm=1,

Threshold=mdoel_quality_r2_drift_threshold,

ComparisonOperator="LessThanOrEqualToThreshold",

TreatMissingData="breaching",

)

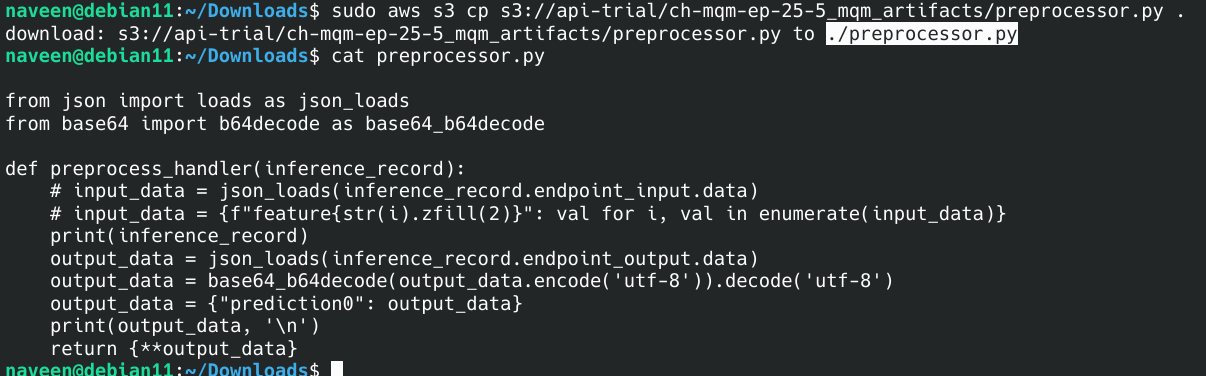

Here's my "preprocessor.py" file:

from json import loads as json_loads

from base64 import b64decode as base64_b64decode

def preprocess_handler(inference_record):

# input_data = json_loads(inference_record.endpoint_input.data)

# input_data = {f"feature{str(i).zfill(2)}": val for i, val in enumerate(input_data)}

print(inference_record)

output_data = json_loads(inference_record.endpoint_output.data)

output_data = base64_b64decode(output_data.encode('utf-8')).decode('utf-8')

output_data = {"prediction0": output_data}

print(output_data, '\n')

return {**output_data}

Here's a couple of lines form the merged ground truth file:

{"eventVersion":"0","groundTruthData":{"data":170300.0,"encoding":"CSV"},"captureData":{"endpointOutput":{"data":"MjAyNTkyLjA=","encoding":"BASE64","mode":"OUTPUT","observedContentType":"text/html; charset=utf-8"}},"eventMetadata":{"eventId":"0faaafcf-5b6c-4dd9-8831-f734eed9aaa0","inferenceId":"60b48ef7-4609-4322-ac5d-e72ccd5d9492","inferenceTime":"2021-09-26T06:40:40Z"}}

{"eventVersion":"0","groundTruthData":{"data":152600.0,"encoding":"CSV"},"captureData":{"endpointOutput":{"data":"MTQ5NzU2LjA=","encoding":"BASE64","mode":"OUTPUT","observedContentType":"text/html; charset=utf-8"}},"eventMetadata":{"eventId":"7400f3e7-0b00-47c2-b39e-efd9cc155a45","inferenceId":"907f0ada-a61b-476e-8a6c-a6d284a249da","inferenceTime":"2021-09-26T06:40:42Z"}}

Expected behavior

Expected behaviour from that the job is that it use the preprocessing.py script I provided while creating the schedule, calculates R2(or R^2) metric and accordingly update the alarm in CloudWatch.

Screenshots or logs

Here's the screenshot of both the jobs:

Here's the screenshot of log of the failed model-quality-monitoring job:

Please tell me if any other logs are needed.

System information

A description of your system. Please provide:

(I am doing all this from SageMaker Studio, scheduling the jobs and running all the scripts. And I am also not using any custom docker images.)

- SageMaker Python SDK version: 2.59.3 (on sagemaker studio)

- Framework name (eg. PyTorch) or algorithm (eg. KMeans): sagemaker-model-monitor-analyzer (for a scheduled model-quality-monitoring job)

- Framework version: (I don't know the version of the container, it had no docker tag)

- Python version:

3.7.10 (default, Jun 4 2021, 14:48:32)(sys.version printed this on studio) - CPU or GPU: CPU

- Custom Docker image (Y/N): N

Additional context

- Since the error is about preprocessing script note being run, here it is in S3 with the code I have shown above:

- I have also tried another scheduling job with naming my script

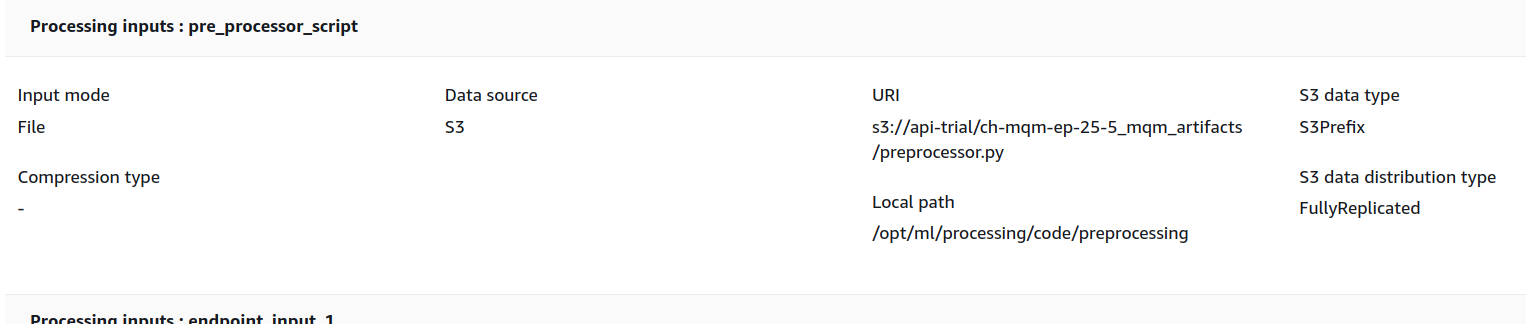

preprocessing.py, and that generated same error too. - in the details of job, on sagemaker console, you can see the script being fetched for scheduled-monitoring job here:

How do I fix this error?