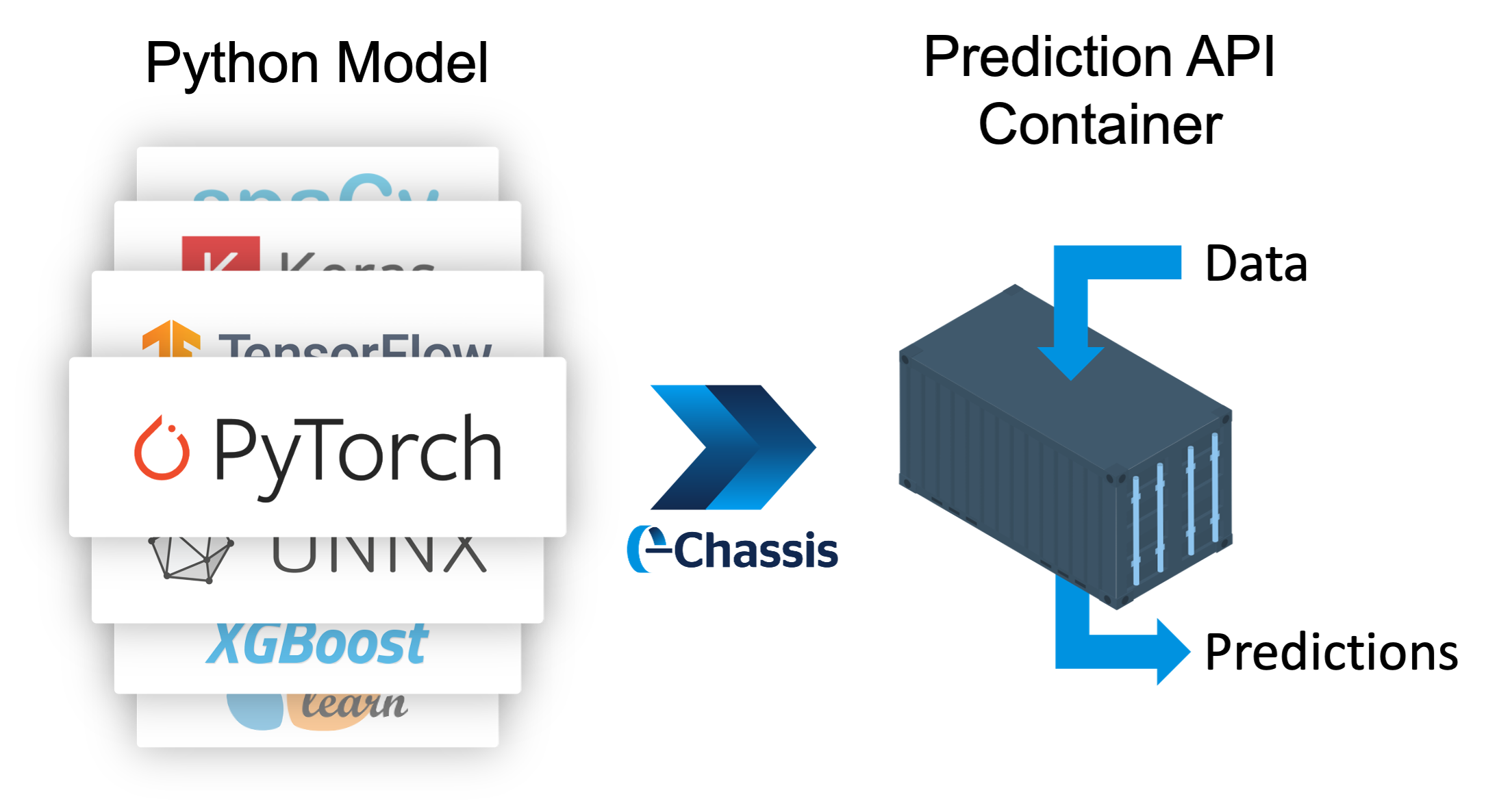

Chassis turns ML models written in Python into containerized prediction APIs in just minutes. We built it to be an easier way to put our models into containers and ship them to production.

Chassis picks up right where your training code leaves off and builds containers for a variety of target architectures. This means that after completing a single Chassis job, you can run your models in the cloud, on-prem, or on a fleet of edge devices (Raspberry Pi, NVIDIA Jetson Nano, Intel NUC, etc.).

Turns models into containers, automatically

Creates easy-to-use prediction APIs

Builds containers locally on Docker or as a K8s service

Chassis containers run on Docker, containerd, Modzy, and more

Compiles for both x86 and ARM processors

Supports GPU batch processing

No missing dependencies, perfect for edge AI

Install Chassis on your machine or in a virtual environment via PyPi:

pip install "chassisml[quickstart]"(<5 minutes)

(~10 minutes)

Framework-specific examples:

| 🤗 Diffusers | |

🤗 Transformers | Coming soon... |

Join the #chassisml channel on Modzy's Discord Server where our maintainers meet to plan changes and improvements.

We also have a #chassis-model-builder Slack channel on the MLOps.community Slack!

Bradley Munday 💻 🤔 🚧 💬 |

Seth Clark 🖋 📖 📆 |

Clayton Davis 💻 📖 🤔 📆 |

Nathan Mellis 🤔 🚇 💻 |

saumil-d 💻 📖 ✅ 🤔 |

lukemarsden 📖 📆 🤔 📢 📹 |

Carlos Millán Soler 💻 |

Douglas Holman 💻 |

Phil Winder 🤔 |

Sonja Hall 🎨 |