🌟 Generative UI: LLMs are Effective UI Generators

Yaniv Leviathan,

Dani Valevski,

Matan Kalman,

Danny Lumen

Eyal Segalis, Eyal Molad, Shlomi Pasternak, Vishnu Natchu, Valerie Nygaard,

Srinivasan (Cheenu) Venkatachar, James Manyika, Yossi Matias

Google Research

📄 Paper

AI models excel at creating content, but typically render it with static, predefined interfaces. Specifically, the output of LLMs is often a markdown “wall of text”. Generative UI is a long standing promise, where the model generates not just the content, but the interface itself. Until now, Generative UI was not possible in a robust fashion.

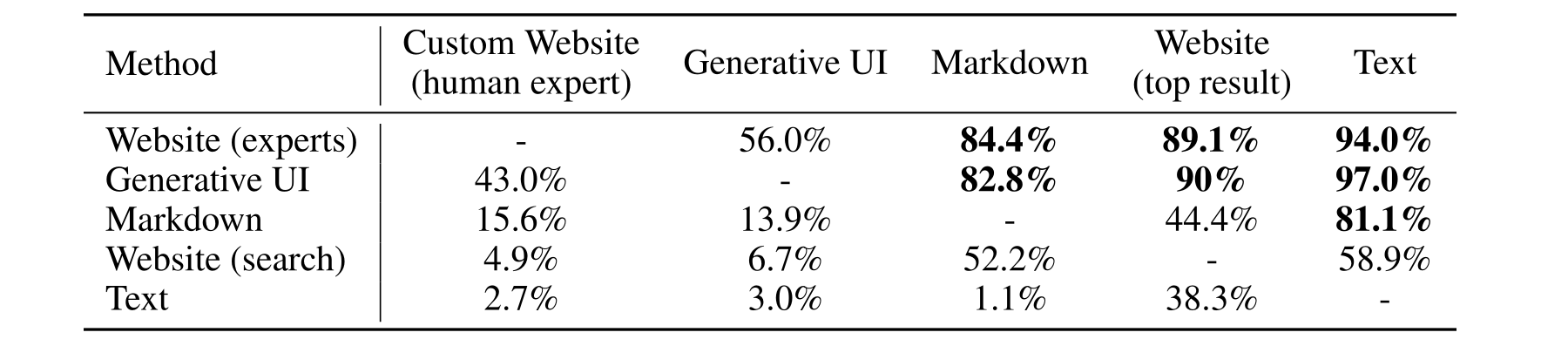

We demonstrate that when properly prompted and equipped with the right set of tools, a modern LLM can robustly produce high quality custom UIs for virtually any prompt. When ignoring generation speed, results generated by our implementation are overwhelmingly preferred by humans over the standard LLM markdown output.

In fact, while the results generated by our implementation are worse than those crafted by human experts, they are at least comparable in 44% of cases. We show that this ability for robust Generative UI is emergent, with substantial improvements from previous models.

We also create and release PAGEN, a novel dataset of expert-crafted results to aid in evaluating Generative UI implementations, as well as the results of our system for future comparisons.

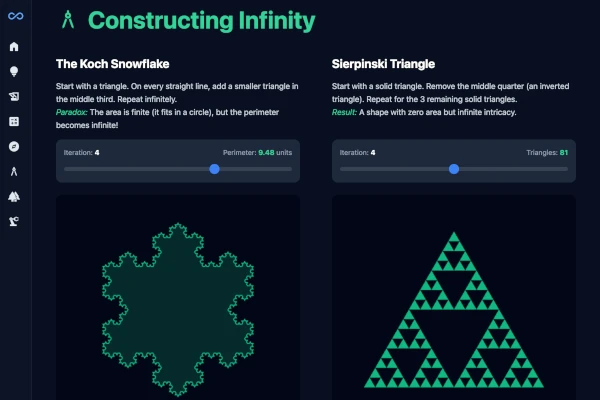

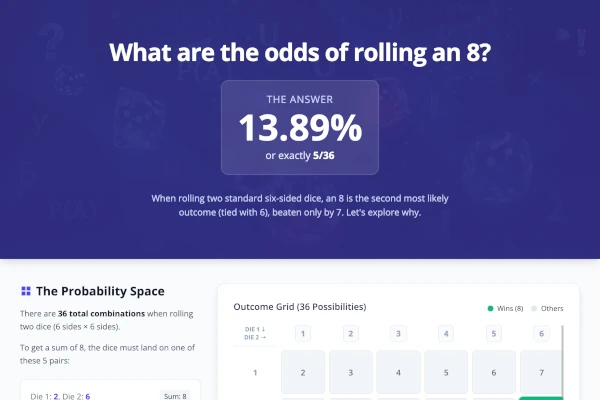

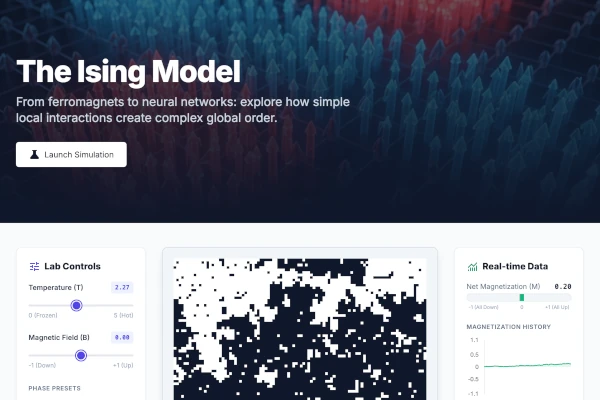

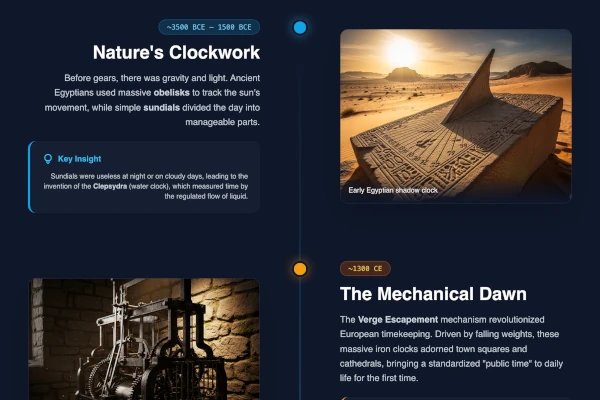

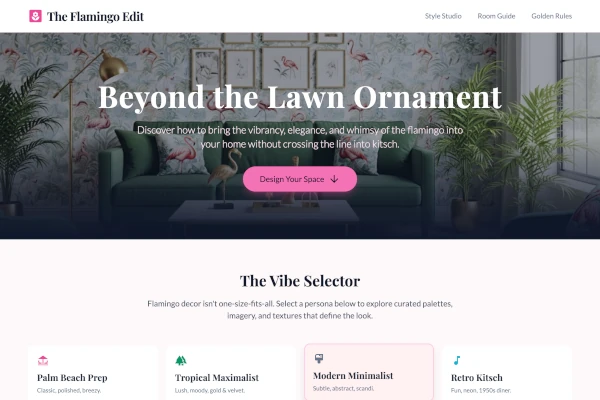

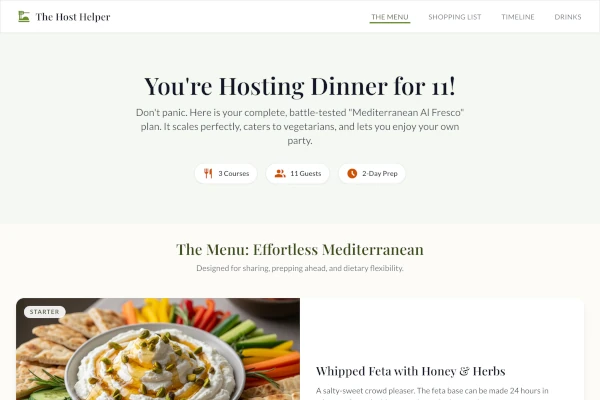

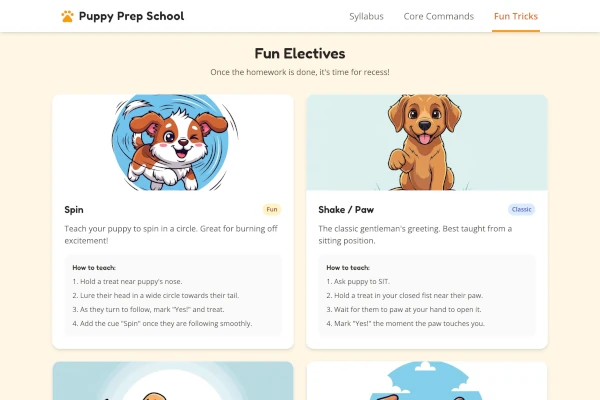

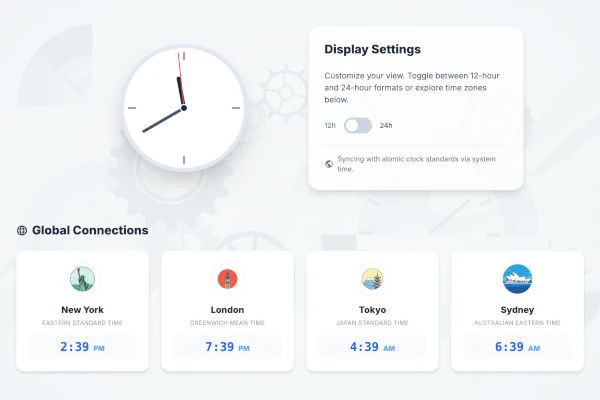

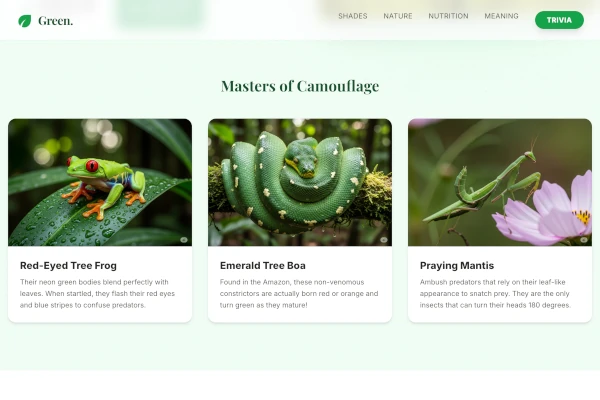

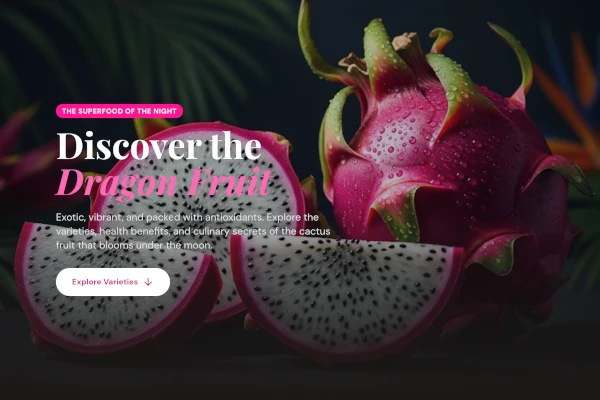

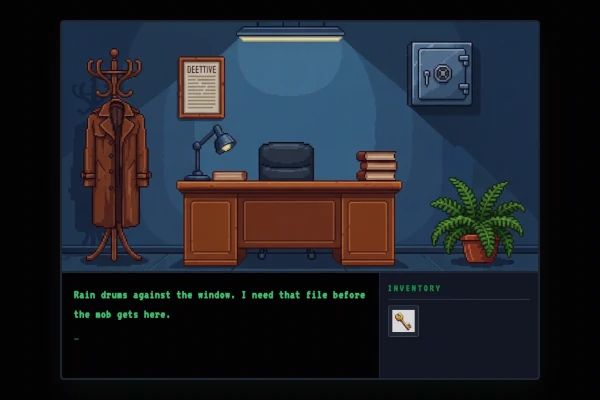

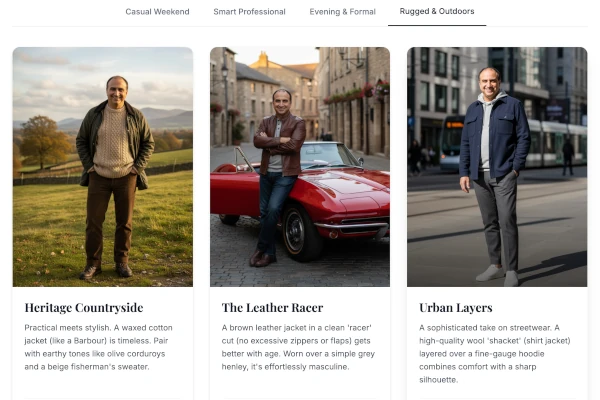

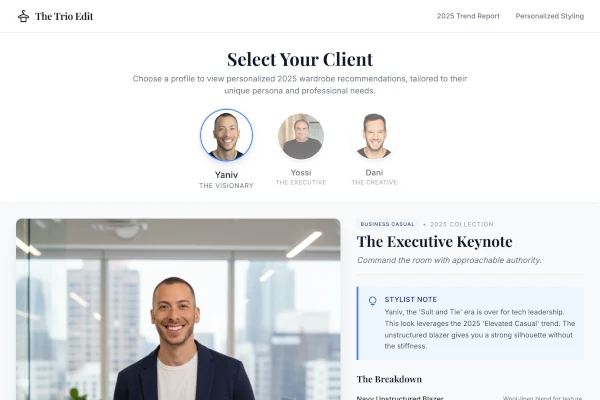

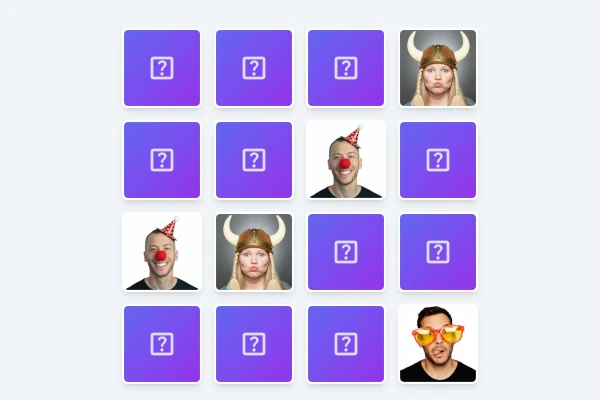

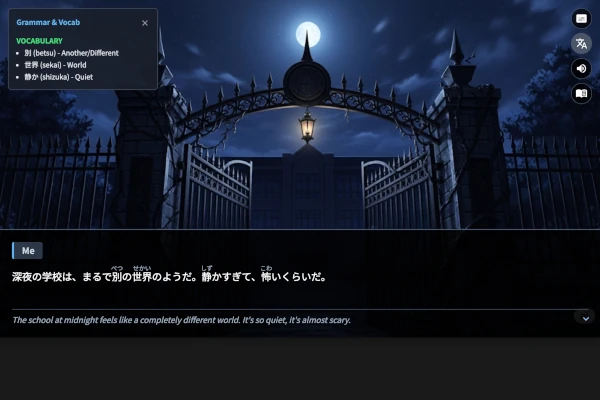

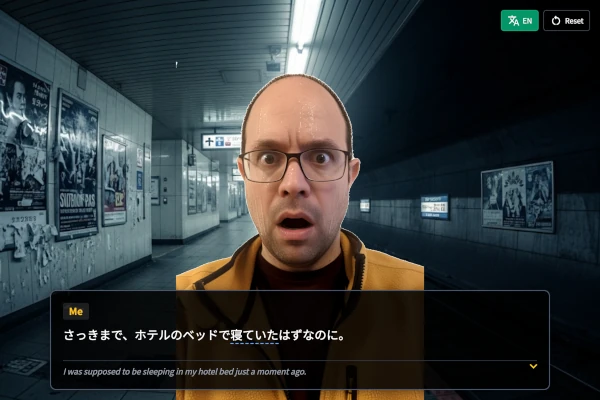

Generative UI can be useful in a wide range of scenarios. In this section you can find examples generated automatically by our implementation from a user prompt. Click on an example to access the generated result.

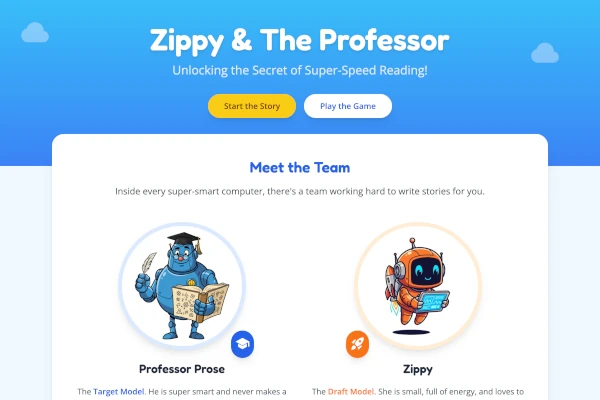

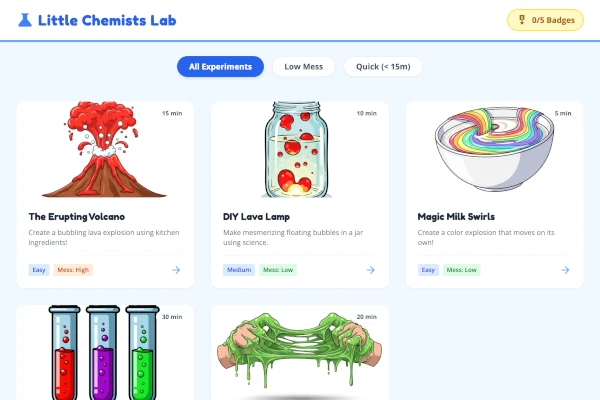

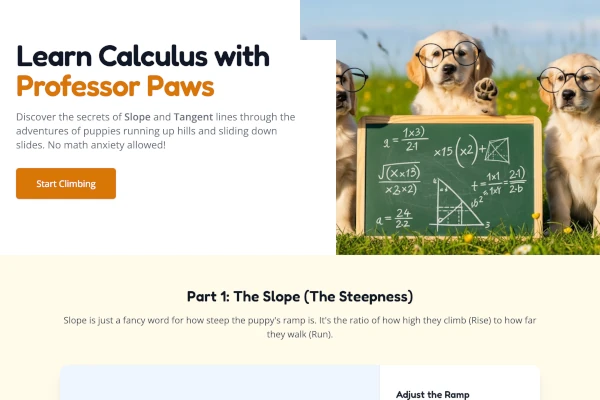

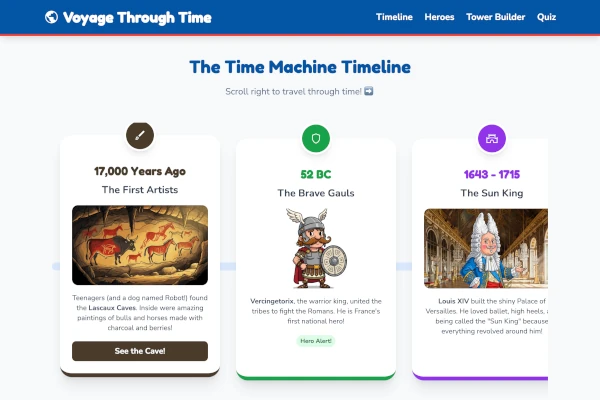

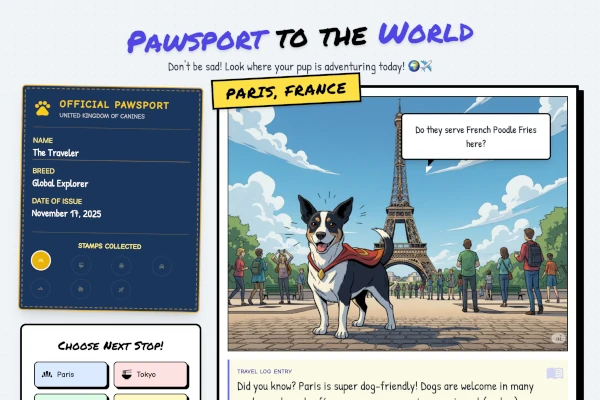

One category in which generative UI is particularly helpful is education.

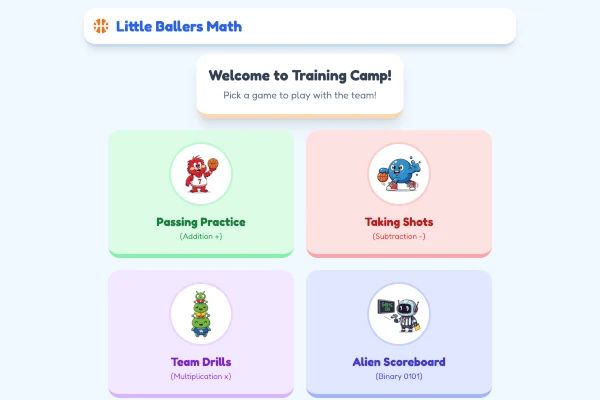

Generative UI excels at extreme customization. For example, here are results for education for children:

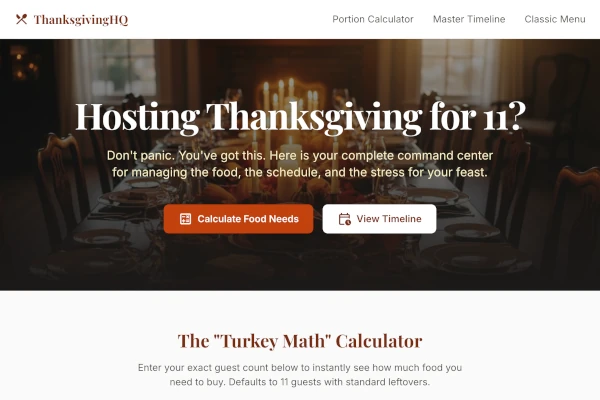

Generative UI can also be helpful for practical tasks:

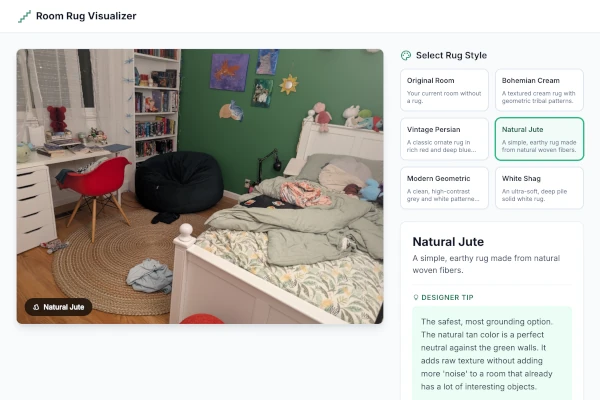

Generative UI can generate surprising results even for simple queries:

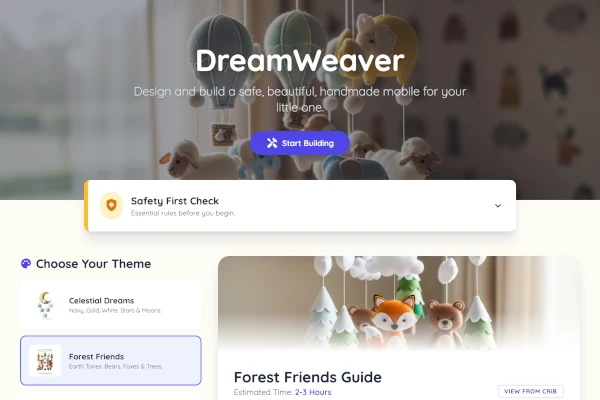

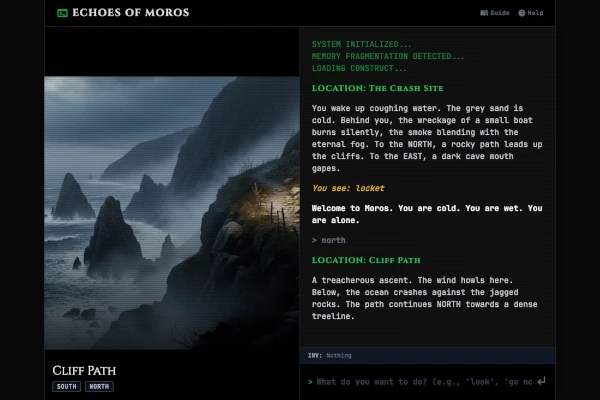

Generative UI can also generate fun applications and games:

Our Generative UI implementation results in a fully-generated web page that is rendered as-is on the user's browser. To that end, we employ 3 main components:

- A server which exposes several endpoints enabling access to key tools, such as image generation and search.

- Carefully crafted system instructions for Gemini, which include the goal, planning guidelines, examples and more.

- A set of post-processors which fix a set of common issues that couldn't be fixed with the system instructions alone.

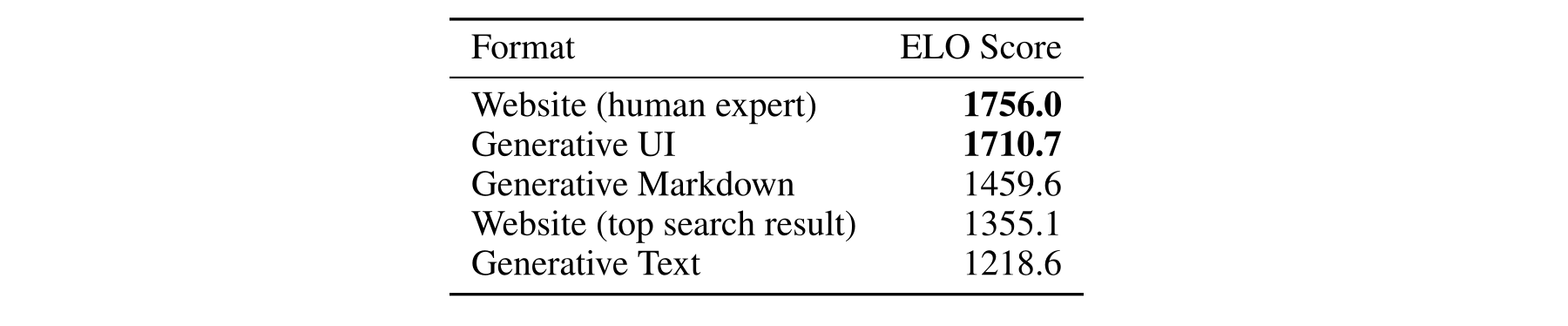

We evaluate user preference across several different result formats:

- A custom website crafted for the prompt by a human expert

- The top Google Search result for the query

- Text (LLM output without markdown)

- Standard LLM output (in markdown format)

- Our Generative UI implementation

We randomly sampled 100 prompts from LMArena and collected pairwise preferences from human raters, sending each result to 2 raters.

Generative UI obtains an ELO score of 1710.7, indicating a strong user preference over all other formats, except human experts. See more details in the paper.

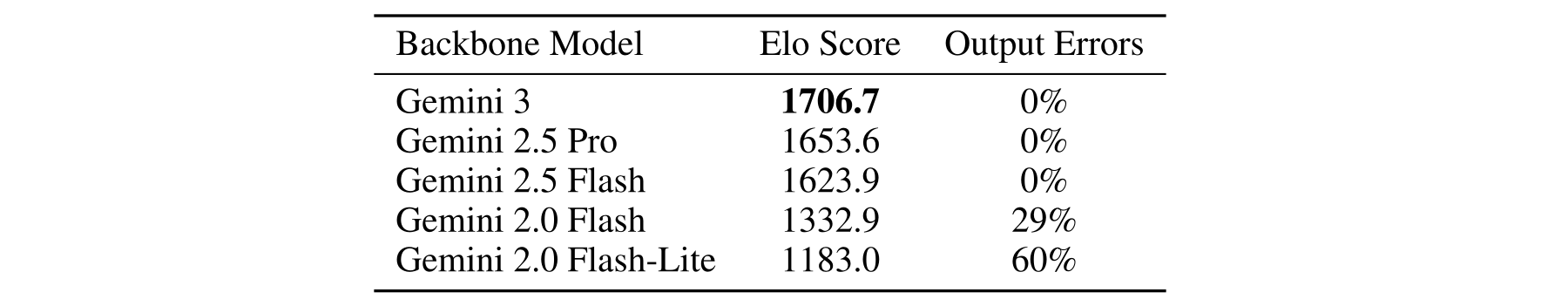

Generative UI is an emerging capability with newer models – we see strong user preference and drastically fewer errors for results with the new Gemini models.

This work would not have been possible without the valuable contributions, insightful suggestions, support, feedback and encouragement from Yoav Tzur, Zak Tsai, Hen Fitoussi, Amir Zait, Oren Litvin, Christopher Haire, Liat Ben-Rafael, Ronit Levavi Morad, Kristen Chui, William Li, Ivan Kelber, Chloe Jia, Ryan Allen, Maryam Sanglaji, Tanya Sinha, Josh Woodward, Jeff Dean, and the Theta Labs, Google Research, Google Search, and Gemini teams, and, as always, our families.