-

Notifications

You must be signed in to change notification settings - Fork 5k

Open

Labels

questionFurther information is requestedFurther information is requested

Description

So, I haven't looked in details, but I suspect there might be something wrong in the new large model released by OpenAI. Keep in mind this is very anecdotal evidence atm, so I might be completely wrong.

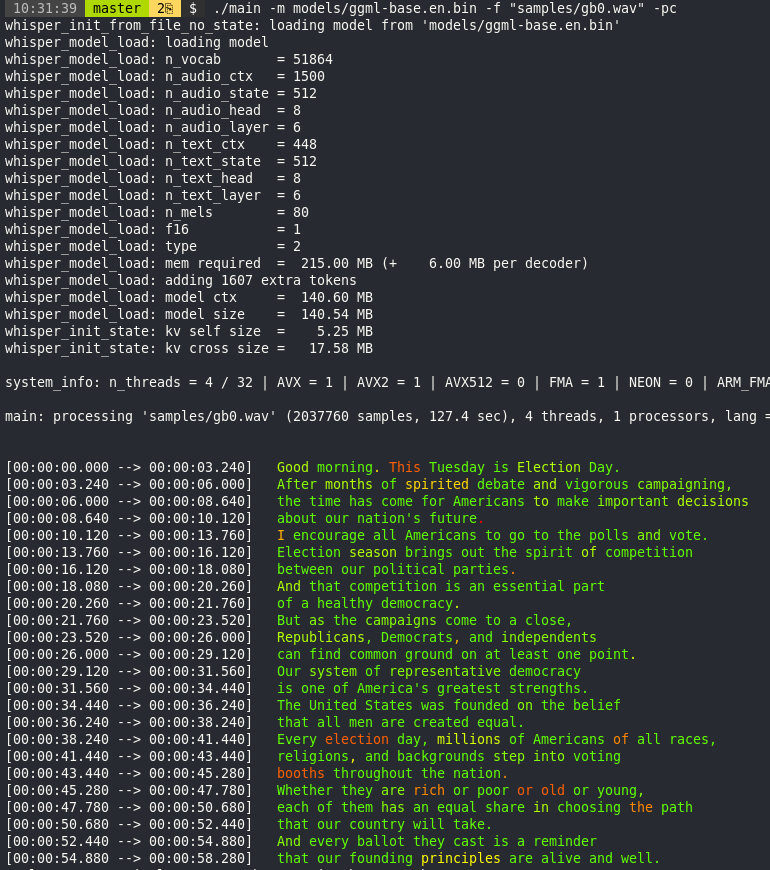

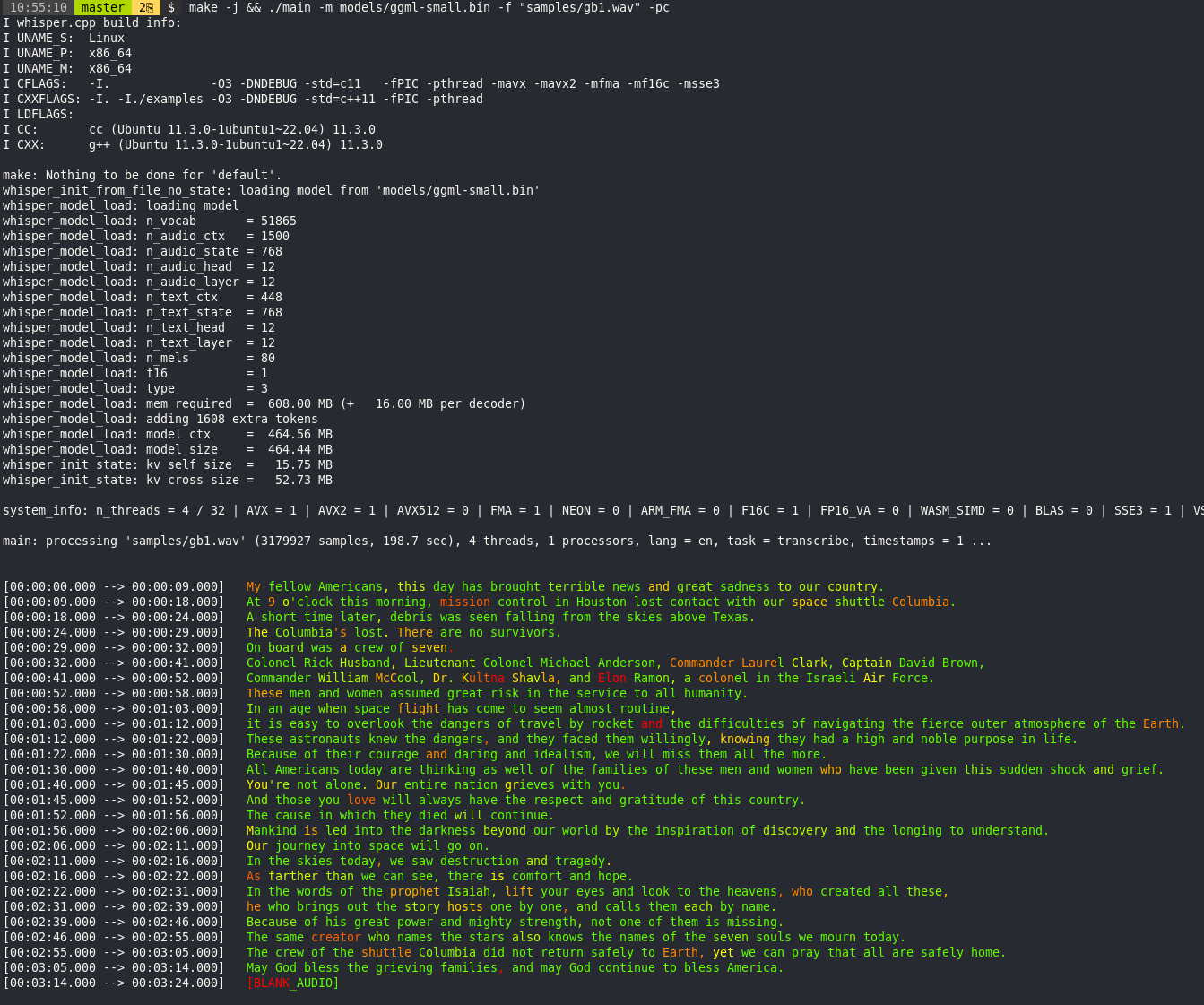

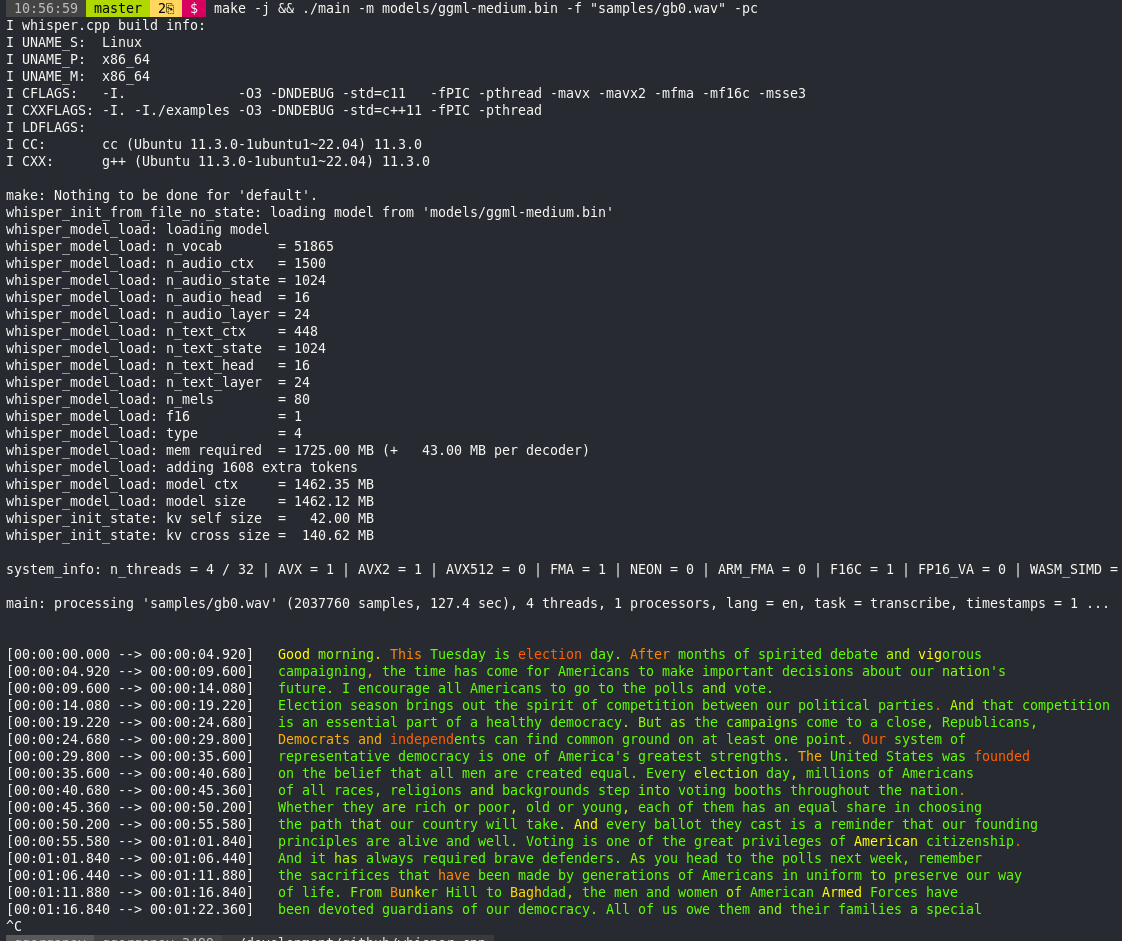

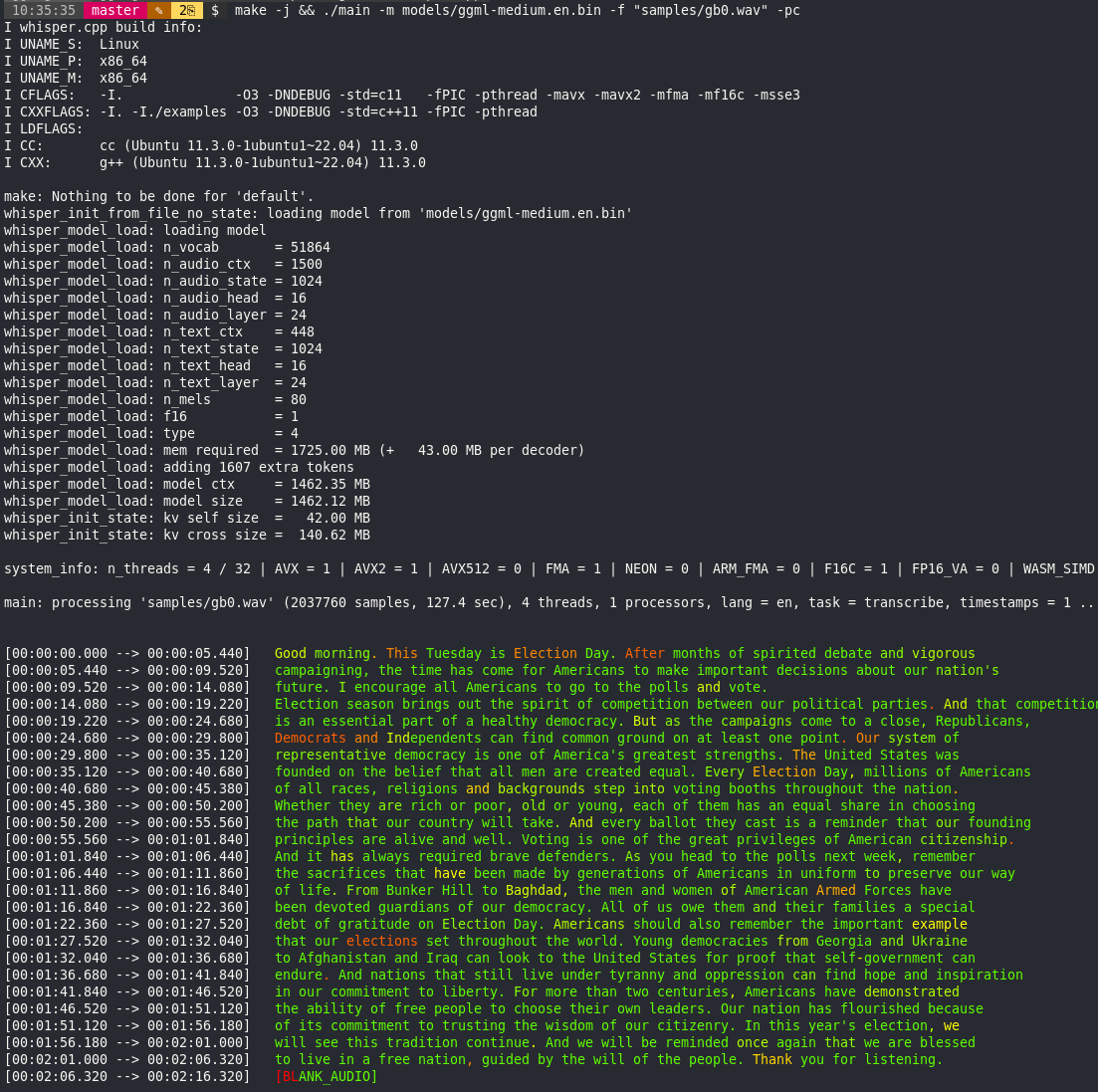

Running the main example with enabled color coding for the token probabilities -pc we normally get the following results:

base.en

small.en

small

medium

medium.en

However, this is what the color coding look like when using the new large model (i.e. v2):

large

As a comparison, this is the same run, but using the old large model - i.e. v1:

large-v1

So somehow the logits with v2 seem to be all over the place which is not observed for any of the other models.

Still, I need to double check all these observations, but I think there is something not quite right with large-v2

neoOpus and nhan000xezpeleta

Metadata

Metadata

Assignees

Labels

questionFurther information is requestedFurther information is requested