A real-time facial emotion detection system that uses MediaPipe Face Mesh and TensorFlow.js KNN Classifier to detect and classify emotions from facial expressions. The system provides both web-based training and Python-based real-time detection capabilities.

- Problem Statement

- Solution

- Features

- Technology Stack

- Project Structure

- Quick Start

- Usage

- Screenshots

- Contributing

- License

Traditional emotion detection systems often require:

- Complex setup - Difficult installation and configuration

- Limited customization - Pre-trained models with fixed emotions

- Poor accuracy - Generic models that don't adapt to individual users

- No real-time capability - Static image analysis only

- Platform limitations - Tied to specific frameworks or languages

This project provides a hybrid approach combining:

- Interactive data collection - Users train their own custom emotion models

- Real-time feedback - See emotions detected as you collect training data

- Export capability - Save trained models for use in other applications

- Cross-platform - Works in any modern web browser

- Live video analysis - Real-time emotion detection from webcam

- Custom model support - Use models trained in the web interface

- Visual feedback - Emotion overlay with confidence scores

- Cross-platform - Works on Windows, macOS, and Linux

- Real-time face detection using MediaPipe Face Mesh

- Custom emotion training with 30+ predefined emotions

- Interactive data collection with visual feedback

- KNN Classifier for personalized emotion recognition

- Model export to JSON format for Python usage

- Beautiful UI with glassmorphism design

- Responsive layout for desktop and mobile

- Real-time emotion detection from webcam feed

- Custom model loading from exported JSON files

- Visual emotion overlay with emoji and confidence scores

- All emotion percentages display for detailed analysis

- Screenshot capture with timestamp

- MediaPipe integration with OpenCV fallback

- Cross-platform compatibility

- 52-dimensional blend shapes from MediaPipe Face Mesh

- K-Nearest Neighbors classification for emotion prediction

- Real-time processing at 30 FPS

- Confidence scoring for prediction reliability

- Dynamic emoji mapping for 30+ emotions

- Export/import functionality for model portability

- HTML5 - Structure and layout

- CSS3 - Styling with glassmorphism effects

- JavaScript (ES6+) - Application logic

- MediaPipe Tasks Vision - Face landmark detection

- TensorFlow.js - KNN Classifier implementation

- Material Design Components - UI components

- Python 3.8+ - Core language

- OpenCV - Computer vision and video processing

- MediaPipe - Face mesh detection (Python 3.8-3.11)

- scikit-learn - KNN Classifier

- NumPy - Numerical computations

- Node.js - Local development server

- http-server - CORS-enabled file serving

- Git - Version control

- Virtual environments - Dependency isolation

VisionEmojiClassification/

├── index.html # Main web application

├── script.js # Web application logic

├── style.css # Application styling

├── package.json # Node.js dependencies

├── start-server.sh # Server startup script

├── SERVER_SETUP.md # Server setup instructions

├── README.md # Project documentation

├── .gitignore # Git ignore rules

└── pythonTest/ # Python application

├── main.py # Real-time detection script

├── demo_realtime.py # Demo without trained model

├── requirements.txt # Python dependencies

├── requirements-mediapipe.txt # Full dependencies

├── setup_env.sh # Automated setup (macOS/Linux)

├── setup_env.bat # Automated setup (Windows)

├── setup.py # Manual setup verification

├── README.md # Python usage documentation

├── QUICKSTART.md # Quick setup guide

└── .gitignore # Python-specific ignore rules

# Clone the repository

git clone <repository-url>

cd VisionEmojiClassification

# Start local server

npm install

./start-server.sh

# Open browser to http://localhost:8080# Navigate to Python directory

cd pythonTest

# Automated setup (macOS/Linux)

./setup_env.sh

# Or manual setup

python3 -m venv emotion_env

source emotion_env/bin/activate

pip install -r requirements.txt

# Run real-time detection

python main.py path/to/exported_model.json- Open the web application in your browser

- Enable webcam and allow camera permissions

- Select emotions from the dropdown menu

- Collect training data by making facial expressions

- Train the model when you have enough samples

- Export the model to JSON format

- Activate virtual environment

- Run the detection script with your exported model

- Make facial expressions in front of the webcam

- View real-time predictions with confidence scores

- Save screenshots or analyze emotion percentages

# Test the setup without a trained model

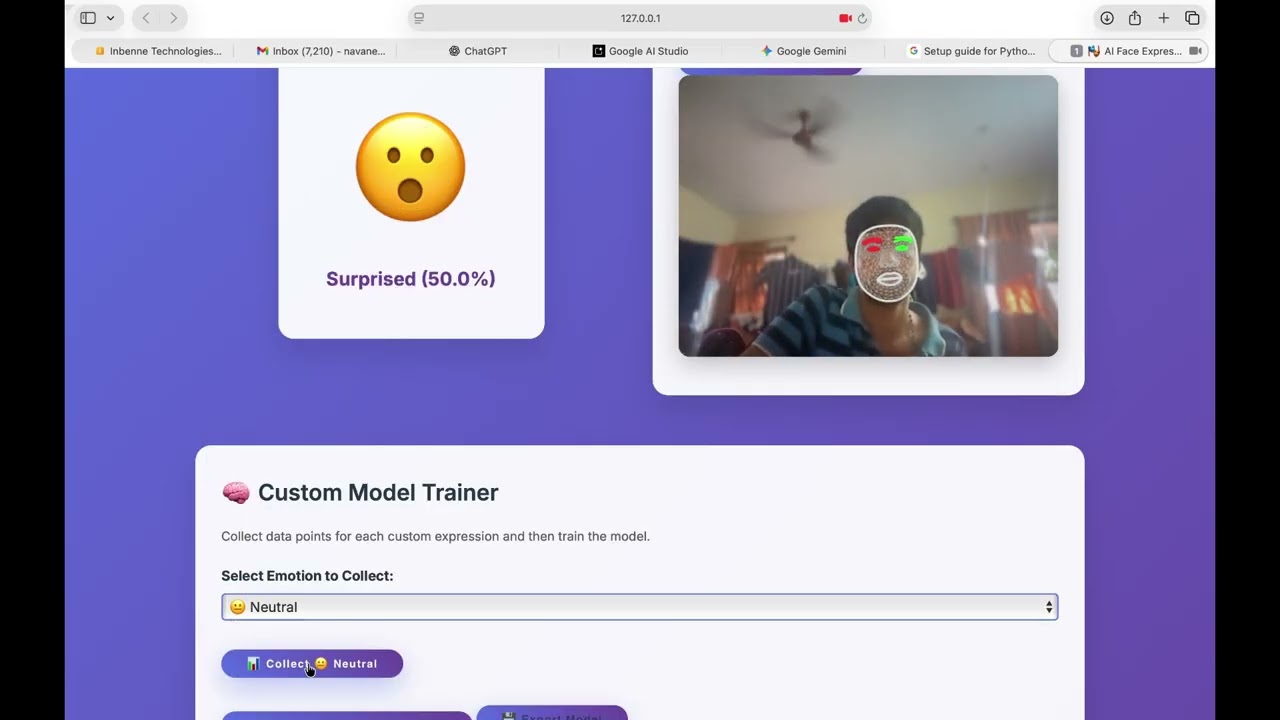

python demo_realtime.pyWatch the project in action! This video demonstrates the complete workflow from training custom emotions to real-time detection.

Click the image above to watch the full demo on YouTube

- Web Application Training - Interactive emotion collection interface

- Real-time Detection - Live emotion classification with confidence scores

- Python Application - Cross-platform real-time video analysis

- Model Export/Import - Seamless workflow between web and Python

- Custom Emotions - Training personalized emotion recognition models

- Training Interface - Interactive emotion collection

- Real-time Detection - Live emotion display

- Model Export - Save trained models

- Real-time Video Feed - Webcam with emotion overlay

- Confidence Display - All emotion percentages

- Face Detection - Green mesh overlay

- Add new emotions to the dropdown menu

- Collect diverse samples for better accuracy

- Balance training data across all emotions

- Export multiple models for different use cases

- Load models in your own Python applications

- Batch processing of images or videos

- API development using Flask or FastAPI

- Data analysis with pandas and matplotlib

- CORS errors - Use the local server, not file:// protocol

- MediaPipe installation - Requires Python 3.8-3.11

- Webcam permissions - Allow camera access in browser

- Model loading - Ensure JSON file path is correct

- Good lighting - Face the light source for better detection

- Stable position - Avoid rapid head movements

- Sufficient training - Collect 50+ samples per emotion

- Balanced data - Equal samples across all emotions

We welcome contributions! Please see our contributing guidelines:

- Fork the repository

- Create a feature branch

- Make your changes

- Add tests if applicable

- Submit a pull request

- Additional emotion mappings

- Improved UI/UX design

- Performance optimizations

- Documentation improvements

- Cross-platform compatibility

This project is licensed under the MIT License - see the LICENSE file for details.

- ✅ Free to use - Anyone can use this software for any purpose

- ✅ Free to modify - You can change the code as needed

- ✅ Free to distribute - Share the software with others

- ✅ Commercial use - Use in commercial projects

- ✅ Attribution required - Must include the original license and copyright notice

Perfect for open-source projects that want maximum compatibility and adoption!

- MediaPipe - Google's framework for face mesh detection

- TensorFlow.js - Machine learning in the browser

- OpenCV - Computer vision library

- scikit-learn - Machine learning toolkit

If you encounter any issues or have questions:

- Check the troubleshooting section

- Review the documentation

- Open an issue on GitHub

- Contact the maintainers

Built with ❤️ for the AI/ML community

Making emotion detection accessible, customizable, and real-time for everyone.