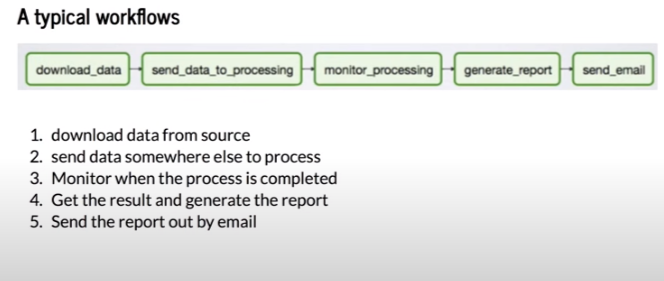

data pipeline management framework Airflow and how it can help us solve the problem of the traditional ETL

is a platform that lets you build and run and monitor workflows or PipeLines

squence of scheduled tasks triggered by an event

used to handle big data pipelines

-

write

scriptto pull data from DB & send toHDFSto process -

schedule script as a

cronjob

- Failures: retry if happends(how many times?,how often?)

- Monitoring: pass or fails (how long does it take?)

- Dependencies:

- Data dependencies: upstream data is missing

- execution dependencies: job2 runs after job 1 is finished

- Scalability: no centralized schedulerbetween diff cron machines

- Deplyment: deploy smt new constantly

- Process historic data: backfill/rerun historical data

allows you to run many containers simultaneously

container are lightweightno need of of hypervisor- you can run more container than if tou were using virtual machine

Beneficts:

- no more taks-managing/manteining dependencies, deployment

- easy to share & deploy different version & environments

- keep track through github tags & releases

- ease of deployment from testing to production environment