-

Notifications

You must be signed in to change notification settings - Fork 25.6k

SVD docs improved #54002

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

SVD docs improved #54002

Conversation

💊 CI failures summary and remediationsAs of commit 37e2f4b (more details on the Dr. CI page):

🕵️ 2 new failures recognized by patternsThe following CI failures do not appear to be due to upstream breakages:

|

|

Maybe worth preserving the link to gauge theory blog in some Notes section? |

|

I explained the problems in simpler terms in the last note::. In my opinion, the post was too abstract for a person not used to the notation in differential geometry / physics. |

torch/_torch_docs.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

For torch.linalg.svd we should clarify what actually happens. When say the gradient is only "well-defined" in certain cases we're not actually telling the reader what torch.svd() or torch.linalg.svd() does. Does it compute the gradient when the gradient is well-defined? What does it compute when the gradient is not well-defined? Does it throw a runtime error?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

That is a great question. The answer is: I do not know.

I have been trying to undestand this problem better, which lead to the discussion in #47599 (comment)

It happens to be a surprisingly tricky problem, and I do not understand it well-enough just yet. I will come back to this once I do.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Appreciating what we don't know is a great start.

Maybe we can unblock this PR by:

- leaving this issue open for future resolution

- saying that produced gradients are undefined unless the grad passed to svd has certain properties (this would require some wordsmithing)

torch/linalg/__init__.py

Outdated

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

What does "unstable" mean?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Close to singular. Basically the gradient has a 1. / (s_i - s_j) term, where s_i are the singular values.

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Can we clarify that in the documentation? Or maybe we should consider adding a glossary to the linalg docs?

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

I've added a better explanation now as its done in the documentation of symeig. I will open an issue to discuss how to make the documentation of torch.linalg more consistent, to discuss both the style and things like this one.

|

I like a lot of what this PR does and think it does make the writing clearer. The mathematical formatting is very jarring, however. Check out: https://11545664-65600975-gh.circle-artifacts.com/0/docs/linalg.html. Maybe we can make the inline mathematical expressions more natural with the surrounding text? There are a few places where I would like our docs to be clearer, but this PR doesn't need to improve upon them. |

|

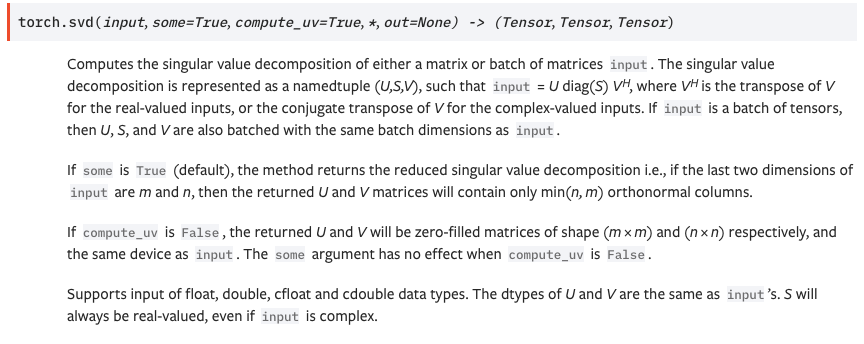

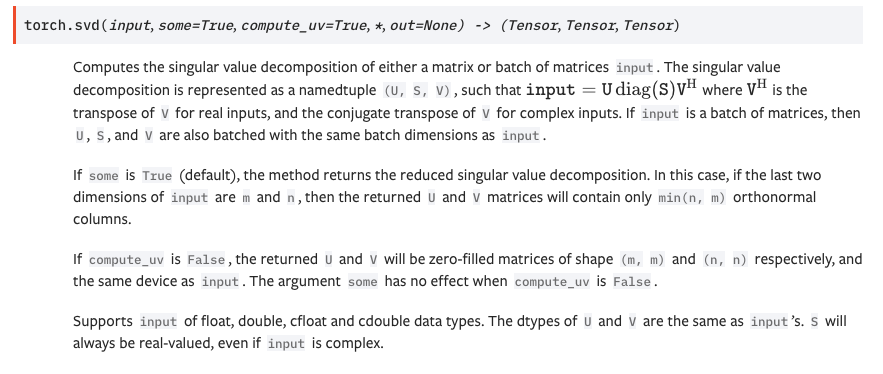

Links to rendered documentation from this PR: Screenshots of the main text |

|

About the code rendering, fair enough. I will change it back to double back quotes and unicode. This being said, it would be good to know how to write decent maths that uses variables (e.g. input / outputs from the function) with mathjax. In this case, the formula is simple enough that it can be written in unicode, but more non-trivial formulae may not. For example, the formulae in About the code highlighting, @IvanYashchuk raised the point that when you write equations such as I think it is better to unify everything that's a variable / formula to have a double back tilt. I believe this is important as, for example, in this SVD documentation, we sometimes write |

It would be nice to have more guidelines for how to write our documentation. cc @brianjo

Maybe we can tweak the rendering with an add-on or setting? But without pursuing that I think we should try to make the docs look reasonable given our current system.

:attr:`input` is the best we have, I think. Maybe we should just be more careful about not using the word "input" except to refer to the input argument? |

|

My suggestions for torch.linalg documentation formatting:

|

|

I agree about the "Use of TeX" part. About the code highlighting, in my opinion, it's better to always go for double back tilts.

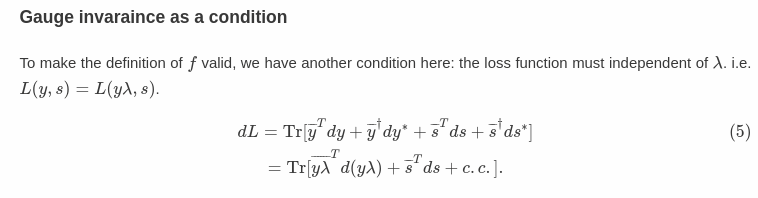

Cons:

About the technical references, I agree. That being said, I do not think that the link to those formulas on gauges are very useful here. I think that the amount set of people that do not know that the SVD is not unique but do know what a gauge is and would not be scared by the following formula is basically empty I believe that this link should go as a comment in Surely you can explain this lack of uniqueness as a gauge-invariance problem, as the Stiefel manifold is a principal bundle over the Grassmannian and the SVD decomposition is a map onto a product of Grassmannians, but this is overkill. I think is better to explain that what's happening is that the SVD chooses subspaces, not bases of orthonormal vectors, and to represent those subspaces we need to choose a basis, but we can choose any basis. In particular we can choose a basis and rotate it inside this subspace and that will give another basis of the subspace. This is even explained in the wikipedia article of the SVD

This all boils down to choosing a level of mathematical background that we are assuming on the reader and write catering to that level. When it comes to linear algebra, I think it is safer to stay on the conservative side. |

I welcome the debate on how best to format the docs but would like to suggest we move it to its own issue and not block this PR. That leaves the question, then, of how do we format this PR. Would it be OK, @lezcano, if we try to minimize the disruption of weird whitespace for now, follow-up fixing that issue, create a standard, and then possibly revisit this to impose that standard?

I agree with you on the complexity of the "Gauge-based" explanation vs. one using bases, which are familiar to anyone who's taken a course in linear algebra. |

|

Sure, let's move this discussion somewhere else. That being said, I do not know when to use the back tilts. I see these cases:

For the last case, should it be:

Again, I'm happy to format this PR in whichever way you see fit. I just do not know what the formatting should be. |

Simpler explanation in the warnings Improve the writing

|

Sorry for that |

|

eI have not been able to split the long line. I really hope that flake8 does not complain. If it does, I do not know how to solve it. I have found this SO post, but I have not managed to replicate the behaviour that they show there. Edit. It looks like flake8 does not complain. I've also added a warning making explicit the only cases in which the backwards is correct, and I've polished a bit the explanation for why it fails in the other cases. |

|

Moving the discussion about the formatting here: #54878 |

There was a problem hiding this comment.

Choose a reason for hiding this comment

The reason will be displayed to describe this comment to others. Learn more.

Cool! Always great to have better docs.

|

@mruberry has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator. |

|

What do you think of the test failure, @lezcano? |

|

Wow, that's a new one. I'll have a look in a second. |

|

@mruberry has imported this pull request. If you are a Facebook employee, you can view this diff on Phabricator. |

Vhinlinalg.svd, always use double tilts...)UandVare not well-defined when the input is complex or real but has repeated singular values. The previous one pointed to a somewhat obscure post on gauge theory.